ChatGPT Parent OpenAI Establishes Oversight Committee For Safety and Security

OpenAI, the parent company of ChatGPT, recently announced the formation of an independent board oversight committee focusing on safety and security. This decision comes in the wake of ongoing security process controversies within the organization.

Background

In a blog post on Monday, OpenAI disclosed that the Safety and Security Committee, initially established in May, will now transition to operate as an independent board oversight committee. The committee will be spearheaded by Zico Kolter, the director of the machine learning department at Carnegie Mellon University's School of Computer Science. Other key members include Adam D'Angelo, an OpenAI board member and co-founder of Quora, former NSA chief and board member Paul Nakasone, and Nicole Seligman, former executive vice president at Sony.

The primary focus of the committee is to oversee the safety and security processes guiding OpenAI's model deployment and development. Following a comprehensive 90-day review of OpenAI's processes and safeguards, the committee has made various recommendations to the board. These include establishing independent governance for safety and security, enhancing security measures, promoting transparency about OpenAI's work, collaborating with external organizations, and unifying the company's safety frameworks. Additionally, the committee will have the authority to oversee model launches and delay releases if safety concerns arise.

Importance of the Committee

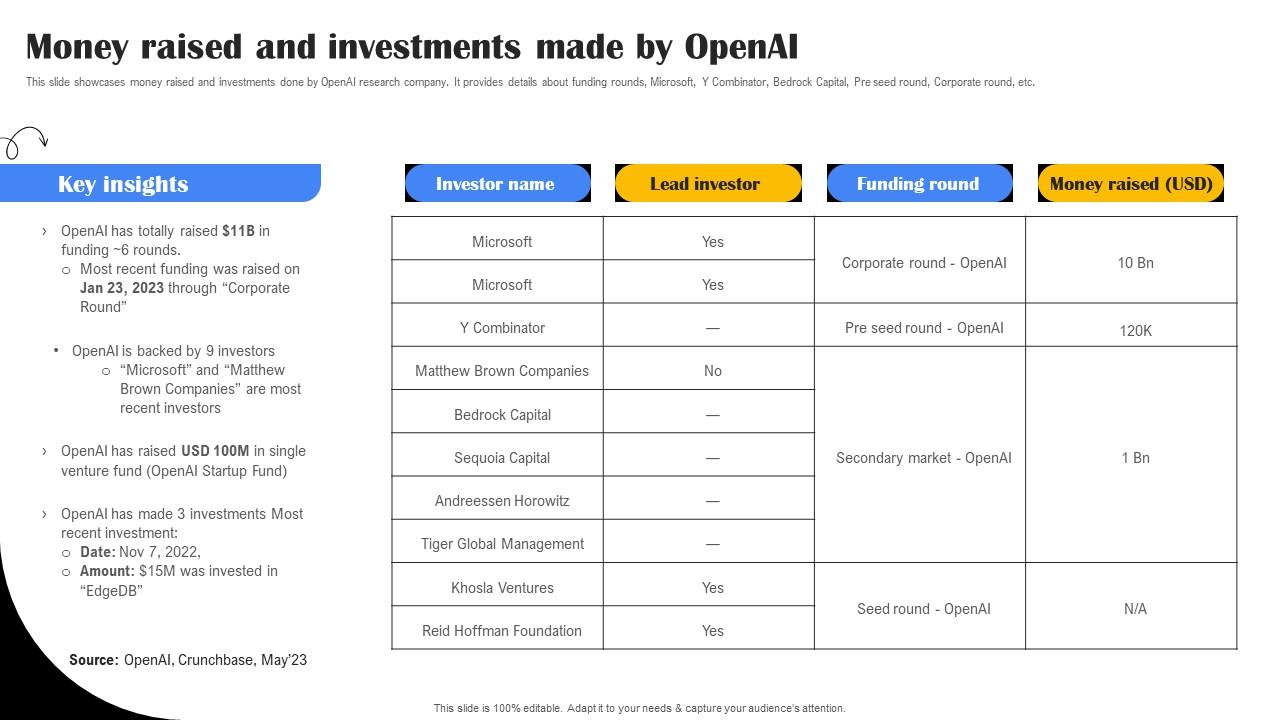

OpenAI has been under scrutiny due to controversies and high-level employee departures, leading to concerns about the organization's rapid growth potentially compromising operational safety. Currently, OpenAI is in the process of a funding round that could value the company at over $150 billion. Thrive Capital is set to lead the round with a planned investment of $1 billion, and Tiger Global is expected to join. Tech giants such as Microsoft, Nvidia, and Apple are reportedly in talks to invest as well.

Moreover, OpenAI recently introduced a new model named “o1,” designed to focus on reasoning and solving complex problems. While the model showcases advanced capabilities, it has raised concerns about potential misuse, particularly in the creation of biological weapons. OpenAI has assessed o1 to have a "medium risk" in relation to issues involving chemical, biological, radiological, and nuclear (CBRN) weapons.

For more tech-related updates, subscribe to the Benzinga Tech Trends newsletter.

Disclaimer: This content incorporates insights from Benzinga Neuro and was reviewed and published by Benzinga editors. Benzinga does not offer investment advice. All rights reserved. Stay informed with analyst ratings, free reports, and breaking news impacting your stock investments.