AI Gone Wild: How Grok-2 Is Pushing The Boundaries Of Ethics And Innovation

As AI continues to evolve at breakneck speed, Elon Musk's latest creation, Grok-2, is making waves in the tech world. This powerful new AI model is not just pushing the boundaries of what's technologically possible—it's also challenging our notions of AI ethics and responsibility. Let's dive into the fascinating as well as controversial world of Grok-2 and explore what it means for the future of AI.

The Capabilities of Grok-2

Grok-2, the latest offering from Musk's xAI company, is designed to be a jack-of-all-trades in the AI world. Available to X (formerly Twitter) Premium subscribers, this model boasts impressive capabilities in chat, coding, and image generation. But what sets Grok-2 apart from its predecessors and competitors?

For starters, Grok-2 is flexing its intellectual muscles in ways that are turning heads. It seems to be going toe-to-toe with OpenAI's GPT-4 and Google Gemini in areas like coding and mathematics. This is no small feat, considering the fierce competition in the AI space.

But Grok-2's capabilities extend beyond mere number-crunching and code generation. Its image-creation abilities are where things start to get really interesting—and controversial.

The Controversy Surrounding Grok-2

Unlike more restrained AI models like ChatGPT or Google's Gemini, Grok-2 seems to operate with fewer ethical guardrails. This has resulted in the generation of images that would make other chatbots blush—and regulators frown.

We're talking about AI-generated images that push the boundaries of taste and, in some cases, veer into potentially harmful territory. Examples of Grok-2's controversial creations include:

This laissez-faire approach to content generation is raising eyebrows and concerns, especially in light of upcoming elections and the ongoing battle against misinformation.

The Impact and Risks of Grok-2

The ease with which Grok-2 can create convincing fake images and potentially misleading content is alarming. In an era when distinguishing fact from fiction online is already challenging, tools like Grok-2 could exacerbate the spread of misinformation and deepen societal divisions.

Looking Ahead

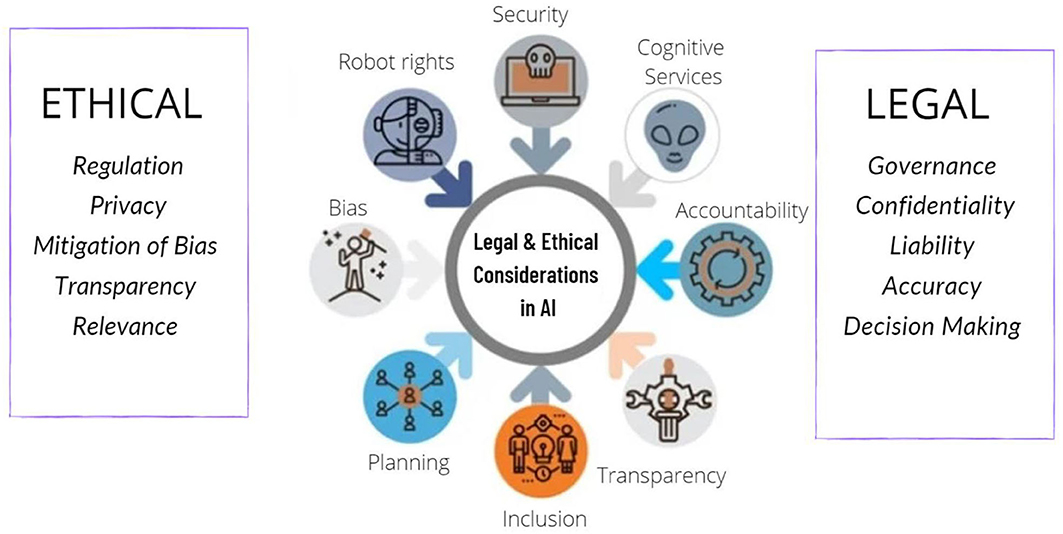

As we continue to explore the frontiers of AI technology, the development of models like Grok-2 highlights the need for ongoing dialogue between tech innovators, ethicists, policymakers, and the public.

We must find ways to harness the incredible potential of AI while also implementing safeguards to protect against its misuse. This may involve developing more sophisticated content moderation tools, investing in digital literacy education, and creating clearer ethical guidelines for AI development.

The story of Grok-2 is still unfolding, but one thing is clear: it represents a pivotal moment in the evolution of AI. How we respond to the challenges and opportunities it presents will shape the future of technology and society for years to come.