OpenAI's GPT-4o mini model is battling Gemini Flash and Claude Haiku

OpenAI has been making waves this summer with the introduction of the new GPT-4o model, which pushes the boundaries of artificial intelligence (AI). This new model allows for voice conversations, web browsing to answer queries, coding, data analysis, text-to-image generation, and even troubleshooting using just a photo. But that's not all - users can now interact with the AI assistant through their phone's camera, providing a deeper contextual understanding of the world around them.

Advancements in AI Technology

At the recent Apple WWDC event, OpenAI unveiled ChatGPT's integration into iOS, iPadOS, and macOS, further solidifying its presence in the AI landscape. But the innovations didn't stop there. The GPT-4o mini model, despite its smaller size and weight, boasts impressive performance and cost-effectiveness compared to its predecessors.

Future Developments

OpenAI plans to enhance the GPT-4o mini's capabilities by adding support for text, image, video, and audio inputs and outputs in the API. The model's improved tokenizer allows for efficient processing of non-English text, further expanding its usability.

Developers can access the GPT-4o mini at a fraction of the cost compared to previous models, with pricing set at 15 cents per million input tokens and 60 cents per million output tokens. This affordability, coupled with its versatility, positions the mini model as a compelling choice for AI integration.

Competition and Innovation

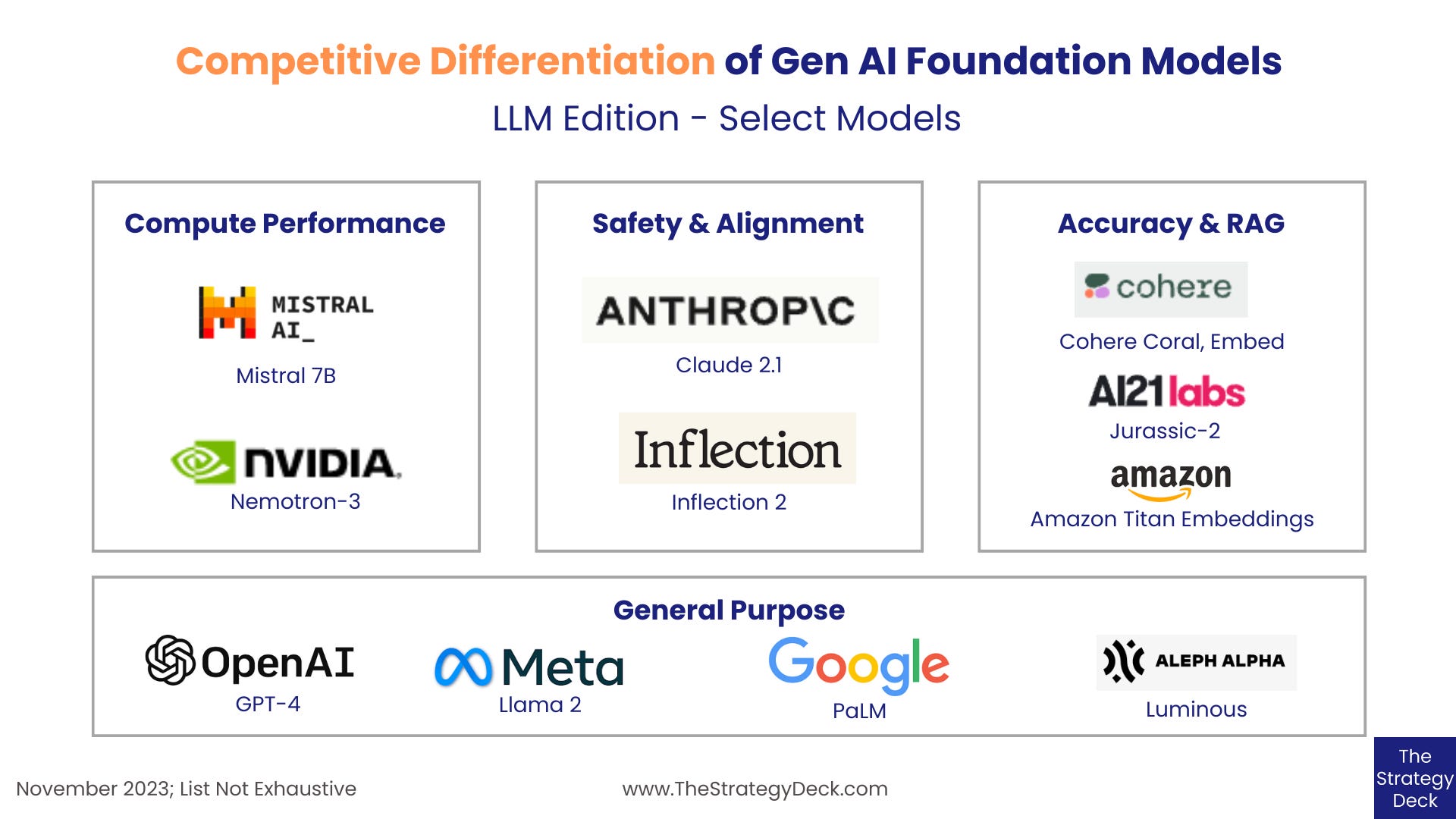

OpenAI's strategic move to introduce the GPT-4o mini addresses the growing demand for cost-effective AI models in the market. Competitors like Google Gemini 1.5 Flash and Anthropic's Claude 3 Haiku offer alternative options, each with its own pricing and capabilities.