GPT-4 Has Passed the Turing Test, Researchers Claim - Slashdot

Catch up on stories from the past week (and beyond) at the Slashdot story archive

LLMs - The Ultimate Example of Teaching to the Test?

Funny that the first and second posts are about the same exact thing - that if you were to construct from scratch a system with only one goal, to pass the Turing tests, LLMs are how you would do it. They are built to output streams of words that are statistically the most likely word based on what other humans write. So in response to almost anything, an LLM will produce a string of words that almost always look and read very human on the surface.

Eliza passed the Turing test. It's not that interesting. It doesn't tell us anything about the machine. All it does is highlight how ridiculous people can be. "Teaching to the test" is the whole game. It's not about making an intelligent machine, it's about making credulous humans think the machine is intelligent. I guess it depends on the IQ of the person. It is pretty quickly clear that an Eliza is not AI and certainly not a human. Or at least some meta knowledge as in: oki, they want to test me if I can figure the other one is an AI... how do I trick an AI to reveal itself? On the other hand, I once put an Eliza into an IRC channel. It would drag people into a conversation if they mentioned its name, Pirx. As I made obviously a new ElizaObject for each conversation partner of Pirx, he never mixed anything up.

AI vs. Human: Spotting the Difference

A human judging ELIZA to be a fellow human is not so much a case of ELIZA passing the Turing test, but of the human failing it. It's very easily said because we all know Eliza's tricks. However, if you aren't trying to trick it then it's very possible to have a "lucky" Eliza session which just perfectly matches what you want to talk about with what Eliza expects. A time-limited Turing test could easily pass.

I feel like a lot of passed Turing tests lean pretty heavily on the novelty of the experience. When ELIZA came out terminals were still luxurious, adventure wasn't even a thing, so if you type something into a computer and get anything even slightly resembling a human response; It's easy to see how a person could decide that's not how computers act so it must be a person.

ChatGPT - The Modern Contender

ChatGPT seems very human when you first interact with it but once you know how modern LLMs behave you're much less likely to be fooled. It's not about making an intelligent machine, it's about making credulous humans think the machine is intelligent. ChatGPT can chat intelligently with me about building a moderately complex PHP application... more intelligently than some coworkers I have had. It can also usually just build it... given good requirements and feedback. Again, better than some coworkers I've had... I don't know if that's "intelligent", in a philosophical sense, but it's pretty freakin' amazing, unless I move goalposts in massive fashion.

AI Testing and Results

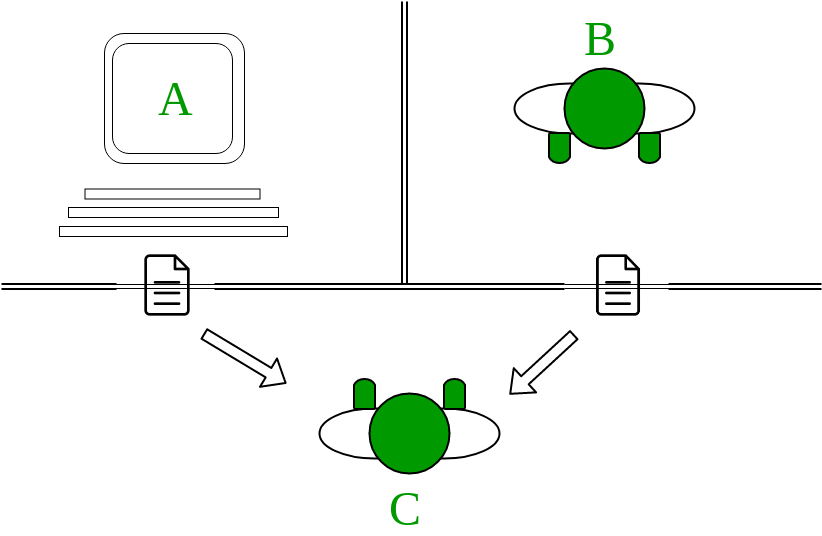

Define "smarter". There ARE reasonable definitions that would validate your assertion, but most of them wouldn't. LLMs don't even understand that the external world exists, much less understand it. Properly structured the AI would have smaller modules handling specific data inputs, and other modules interconnecting those modules. And it would also need to experime...

An AI or a human can be easily spotted by using trigger words or employing general craziness. The problem with the result and the test in general is that people are not testing the system, but expect to have a believable conversation. So, there is a preset desire to be fooled.

Evaluating the Turing Test

I'd hardly say that a 54% score is passing, but maybe the researchers were grading on a curve. The 5-minute test time smacks of tuning the test to get closer to the desired result. Overall, I'm unimpressed.

Considering humans were only 67% the 54% doesn't bother me so much. But the 5-minute threshold is really low, if you look at the paper the "conversations" are the equivalent of a series of 5 back and forth text messages. I suspect the AIs would break down in longer conversations.

More noteworthy is that ChatGPT was explicitly instructed to play a little dumb. This of course lowers expectations of the people interacting with the AI. The full instruction prompt:

The Future of AI Testing

Maybe it's time to retire the Turing Test, and introduce the Tinder Test. Jokes aside I think one of the main things we need to keep in mind is that most people aren't particularly... impressive. We've shed a lot of blood, sweat, and tears to come up with the systems that allow us to cooperate and do great things as a collective in spite of all our incompetence and many, many individual flaws. And even then it's still an uphill battle in many...

I saw that bit as well, I assumed that was just a particularly fast-fingered interrogator. Really, they should have been clearer on that. Curious why they wouldn't ask it presumptive questions like which part of Washington it grew up in, where it graduated, how old it was when its mommy and daddy got divorced. The reason I think that is several fold...