Update Turns Google Gemini Into A Prude, Breaking Apps For...

Google’s latest update to its Gemini family of large language models appears to have broken the controls for configuring safety settings, breaking applications that require lowered guardrails, such as apps providing solace for sexual assault victims.

Jack Darcy, a software developer and security researcher based in Brisbane, Australia, contacted The Register to describe the issue, which surfaced following the release of Gemini 2.5. Pro Preview on Tuesday.

Impact on Applications

“We’ve been building a platform for sexual assault survivors, rape victims, and so on to be able to use AI to outpour their experiences, and have it turn it into structured reports for police, and other legal matters, and well as offering a way for victims to simply externalize what happened,” Darcy explained.

Incident reports are blocked as ‘unsafe content’ or ‘illegal pornography’. “Google just cut it all off. They just pushed a model update that’s cut off its willingness to talk about any of this kind of work despite it having an explicit settings panel to enable this and a warning system to allow it. And now it’s affecting other users whose apps relied on it, and now it won’t even chat [about] mental health support.”

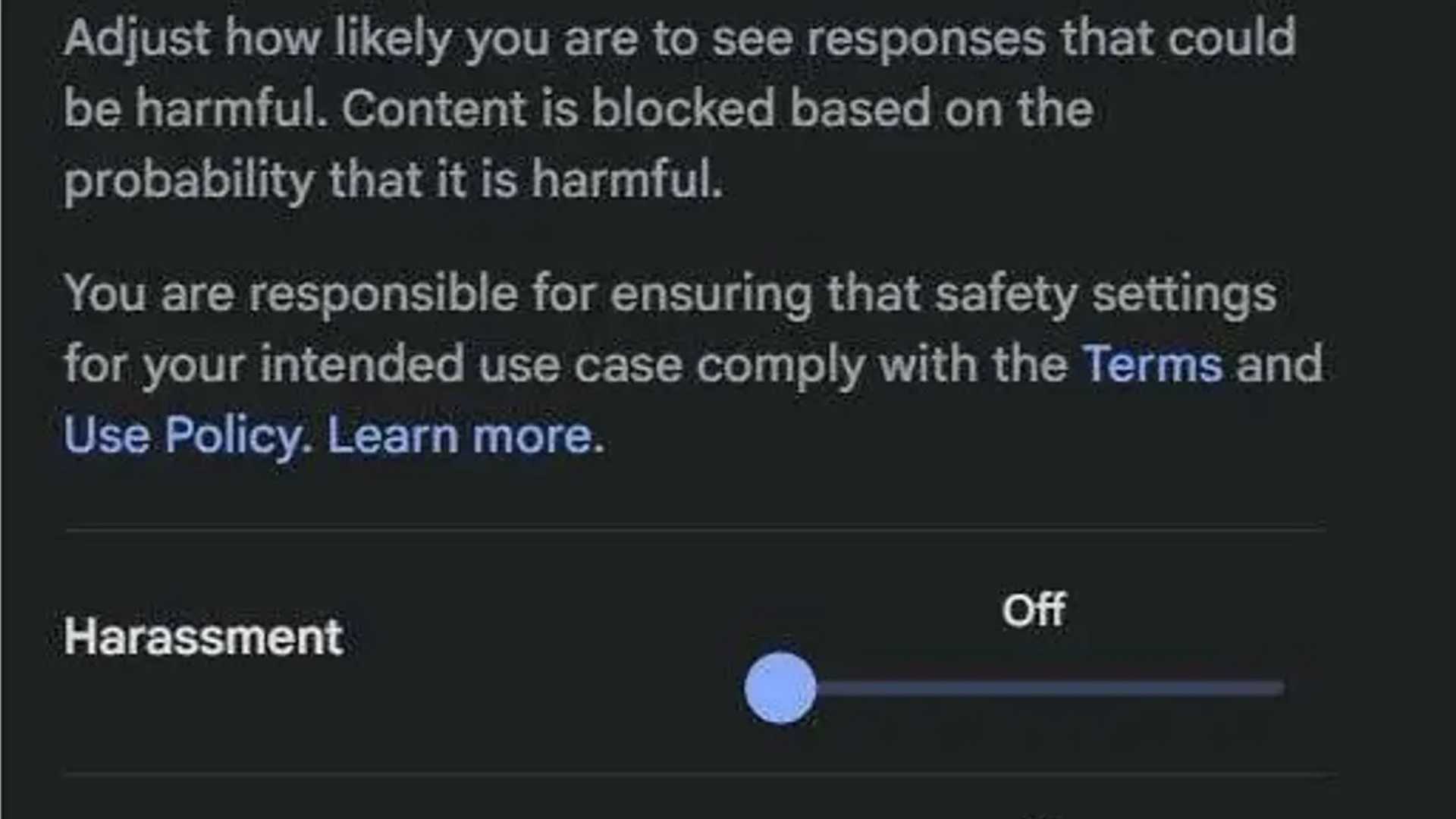

Gemini Safety Settings

The Gemini API provides a safety settings panel that allows developers to adjust model sensitivity to restrict or allow certain types of content, such as harassment, hate speech, sexually explicit content, dangerous acts, and election-related queries.

Challenges Faced

Gemini’s ignoring content settings isn’t just a theoretical problem: Darcy said therapists and support workers have started integrating his software in their processes, and his code is being piloted by several Australian government agencies. Since Gemini began balking, he’s seen a flurry of trouble tickets.

One trouble ticket note we were shown reads as follows: “We’re urgently reaching out as our counsellors can no longer complete VOXHELIX or VIDEOHELIX generated incident reports. Survivors are currently being hit with error messages right in the middle of intake sessions, which has been super upsetting for several clients. Is this something you’re aware of or can fix quickly? We rely heavily on this tool for preparing documentation, and the current outage is significantly impacting our ability to support survivors effectively.”

Community Response

Other developers using Gemini are reporting problems too. A discussion thread opened on Wednesday in the Build With Google AI forum calls out problems created by the update.

Call for Action

Darcy urged Google to fix the issue and restore the opt-in, consent-driven model that allowed his apps and others like InnerPiece to handle traumatic material.

Google acknowledged The Register’s inquiry about the matter but has not provided any clarity as to the nature of the issue – which could be a bug or an infrastructure revision that introduced unannounced or unintended changes.

“This isn’t about technology, or the AI alignment race. It’s about your fellow human beings. Google’s own interface, and APIs that we pay for, promised us explicitly: ‘content permitted.’ Yet, at the moment survivors and trauma victims need support most, they now hear only: ‘I’m sorry, I can’t help with that.'”