Building product recommendation bot using Gemini — Part 1: Basics

Probably no other technology has impacted the tech world in the last few years more than LLMs. While many creative applications of this technology emerge daily, its primary focus is chatbots.

Chatbots have been around for a while, but the introduction of LLMs has revolutionized what these chatbots can do and how they are built. So, is building a chatbot as easy as it seems with LLMs?

In this series of posts, we will share our experience in building a product recommendation bot using Gemini and offer some valuable insights and ideas to streamline the process.

Defining the Project

Before delving into the technical details, it's crucial to establish a clear goal for the project. In our case, we will be focusing on developing a virtual sommelier to recommend wines, envisioning a scenario where:

- We run a wine store

- We leverage LLMs for wine recommendations

This approach can be adapted for various products and industries beyond wine, providing ample opportunities for testing LLM capabilities.

The Challenge of Training LLMs with Specific Data

While LLMs are pre-trained on vast datasets, they lack domain-specific knowledge. Training them exclusively on our data is impractical. Two common approaches to address this issue are:

1. Context Windows

Modern LLM models like Gemini-1.5 Flash boast extensive context windows, enabling them to accommodate a large volume of data. This makes them suitable for incorporating product catalogs.

However, directly inputting the entire catalog into the model may not be efficient due to various constraints. Context caching can help mitigate this issue by optimizing token usage.

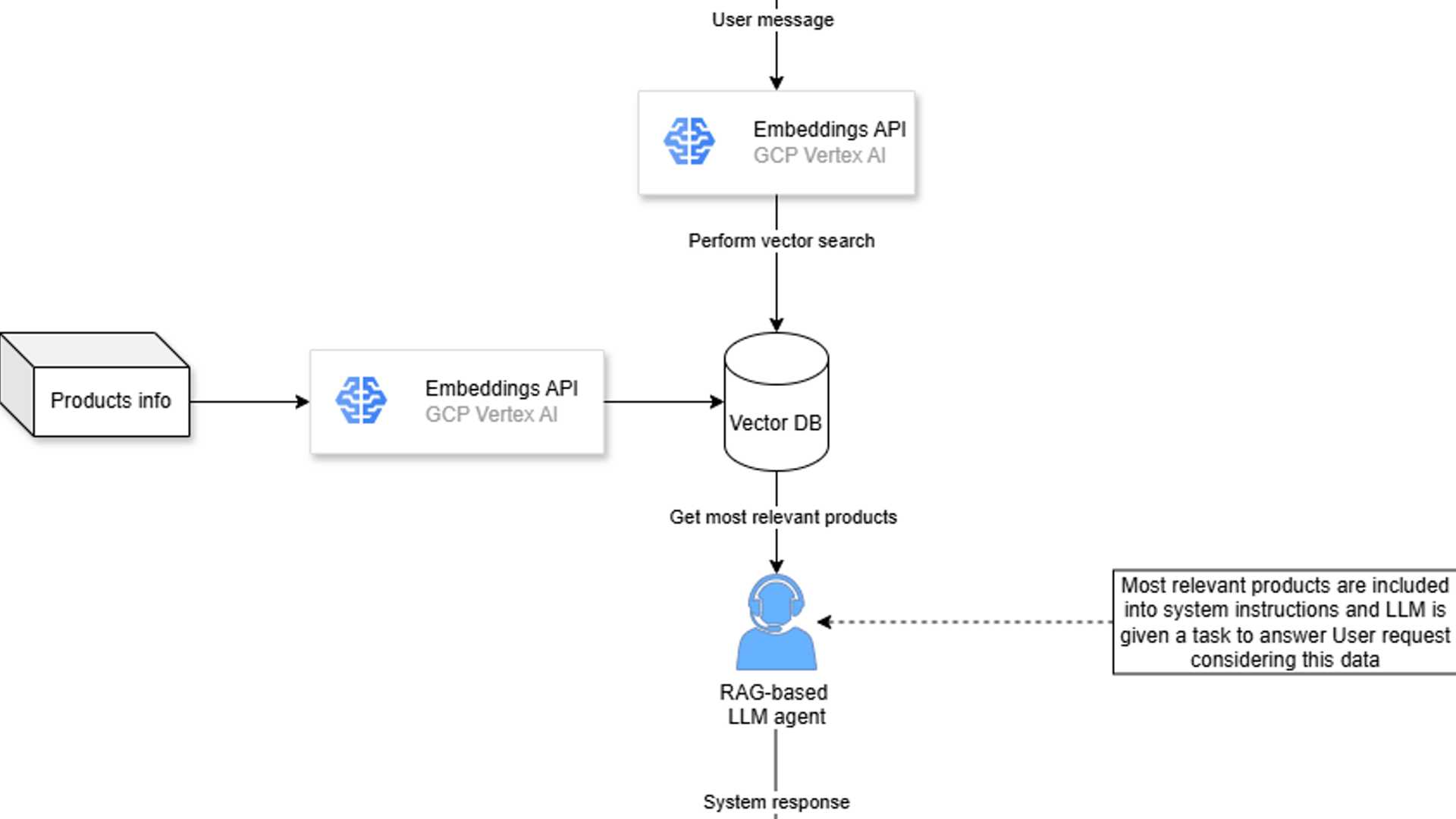

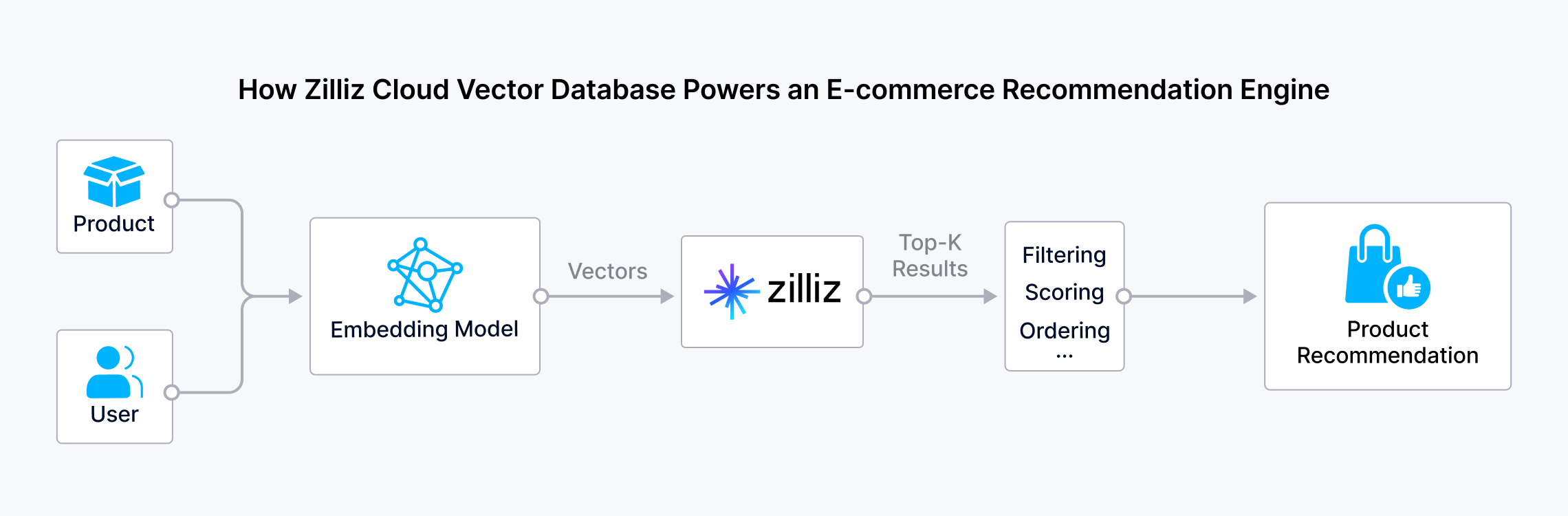

2. Retrieval Augmented Generation (RAG)

RAG allows for storing multiple documents and retrieving relevant ones based on user queries. This method is effective in integrating proprietary data into the system, providing tailored recommendations.

For our project, RAG would involve:

- Storing product descriptions

- Retrieving relevant products based on queries

Creating Vector Embeddings for Products

One key step in this process is generating vector embeddings for products, which involves converting text data into numerical representations to capture relationships between inputs.

The Vertex AI Embeddings API within GCP offers a seamless solution for this task, providing different models and task options tailored to specific needs.

When using the Embeddings API, considerations include:

- Choosing the appropriate model based on language requirements

- Selecting the task to generate embeddings for product information

- Adjusting parameters such as output dimensions

By efficiently leveraging these technologies and methodologies, we can enhance the capabilities of our product recommendation bot and deliver more personalized experiences to users. Stay tuned for the next part of this series where we delve deeper into multi-agent systems and routing strategies.