Google's Gemini AI Faces Criticism For Bias In Image Generation ...

Recently, Google's Gemini AI technology has come under fire for its bias in image generation. The AI, which is used for creating realistic images from text descriptions, has been criticized for producing skewed and discriminatory results.

Issues with Bias

One of the main concerns with Gemini AI is its tendency to generate images that perpetuate stereotypes and biases. For example, when given descriptions of professions, the AI often defaults to gender-specific roles, such as depicting a doctor as male and a nurse as female. This can reinforce harmful gender norms and limit the diversity in the generated images.

Another issue is the racial bias present in the AI's image generation. Gemini AI has been found to disproportionately represent certain racial groups in specific contexts, leading to inaccurate and discriminatory depictions. This can have serious consequences in various applications, such as in the development of AI-powered tools and services.

Call for Transparency and Accountability

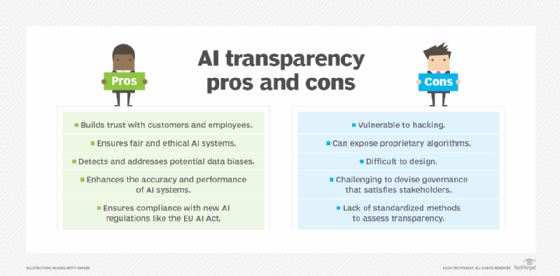

As concerns about bias in AI continue to grow, there have been calls for greater transparency and accountability in the development and deployment of such technologies. Critics argue that without proper safeguards and oversight, biased AI systems like Gemini AI can perpetuate discrimination and inequality.

Companies like Google are being urged to address the underlying issues with their AI algorithms and take steps to mitigate bias in image generation. This includes diversifying the training data, implementing fairness and accountability measures, and involving diverse voices in the development process.

Looking to the Future

Despite the criticism, Google's Gemini AI technology also has the potential to drive positive change and innovation in the field of image generation. By addressing the bias issues and prioritizing fairness and inclusivity, AI developers can ensure that their technologies benefit all users equally.

It is crucial for companies and researchers to continue exploring ways to make AI systems more ethical, transparent, and accountable. Only through concerted efforts towards bias mitigation and diversity inclusion can the full potential of AI be realized in a responsible and inclusive manner.