Fine-tuning GPT-2 for Curriculum Content | Sep ...

Imagine an AI model available on your laptop, trained on a curriculum document. You could ask it to generate lesson plans, suggested classroom activities, and teachers’ resources. I recently set out to do this (somewhat successfully) by attempting to fine-tune GPT-2 for curriculum content generation. The secondary purpose of this exercise was to learn more about Hugging Face and the process of fine-tuning their open models. This article details my experience, from setup to results, including the challenges faced and lessons learned.

Key Technologies

Hugging Face is a company that has developed a popular library called Transformers. This library provides pre-trained models for NLP tasks, including text generation, translation, and summarization. It’s built on top of PyTorch and TensorFlow, making it easier for developers to use state-of-the-art NLP models.

GPT-2 (Generative Pre-trained Transformer 2) is a language model developed by OpenAI. It’s trained on a diverse range of internet text and can generate coherent paragraphs of text. While larger versions exist, I chose to work with the smallest version (124M parameters) due to computational constraints. It’s worth noting that while GPT-2 was developed by OpenAI, the specific implementation I used is provided by Hugging Face and is free to use.

PyTorch is an open-source machine learning library developed by Facebook’s AI Research lab. It’s known for its ease of use and dynamic computational graphs, making it popular for research and development in deep learning.

Project Overview

Since starting my new role as AI product lead at Cambridge, I’ve been intrigued by AI's potential to assist in creating educational content, similar to how a publisher would commission an author to write a textbook. The idea of fine-tuning GPT-2 for curriculum content generation stemmed from a desire to explore how modern NLP models could be adapted to produce grade-specific, subject-oriented material. My goal was to create a tool that could assist teachers in generating lesson plans, study guides, and other educational resources, potentially saving time and offering fresh approaches to curriculum delivery.

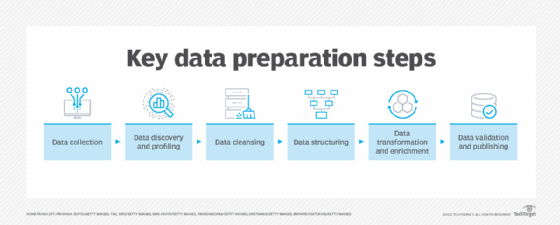

Data Preparation

The data preparation stage was more time-consuming and crucial than I initially anticipated. I started with a curriculum document in PDF format (scanned document = nightmare!), which presented several challenges:

- Challenge 1

- Challenge 2

- Challenge 3

The entire data preparation process took approximately 3 hours (for a relatively small dataset), largely due to the iterative nature of cleaning and formatting the text data.

Fine-tuning GPT-2 Model

With the data prepared, I moved on to fine-tuning the GPT-2 model. This involved several steps:

- Step 1

- Step 2

- Step 3

During this process, I encountered memory errors due to the limited RAM on my MacBook Air. I resolved this by implementing the following strategies:

- Strategy 1

- Strategy 2

- Strategy 3

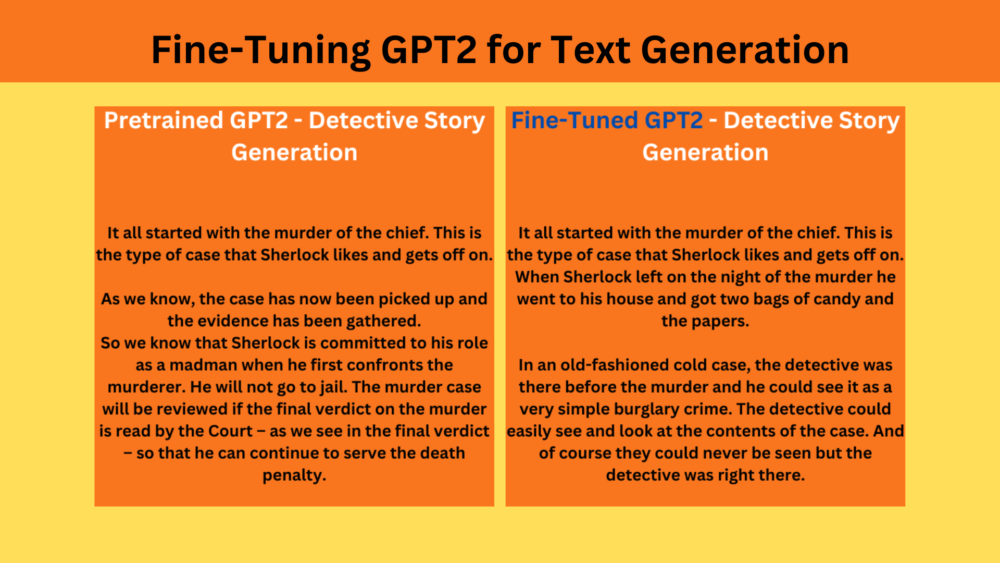

Results and Improvements

The initial results were disappointing. The model often produced irrelevant or nonsensical content. To improve this, I implemented several strategies:

- Strategy 1

- Strategy 2

- Strategy 3

For future attempts, I plan to:

- Plan 1

- Plan 2

- Plan 3

Conclusion

While my first foray into fine-tuning GPT-2 didn’t produce the curriculum-generation wizard I’d hoped for, it was an invaluable learning experience. It highlighted the complexities of working with language models and the importance of high-quality, relevant training data. For those considering similar projects, start small, be patient, and be prepared to iterate. These models' potential is enormous, but harnessing it effectively requires persistence, careful planning, and a willingness to learn from failures.

For those interested in scaling up similar projects, here are some cloud computing options to consider:

- Option 1

- Option 2

- Option 3

When choosing a platform, consider factors like cost, ease of use, available compute resources, and integration with your existing workflow.

Here’s my project code on GitHub: github.com