What's an AI like ChatGPT but Has No Restrictions? (How to Uncensor LLMs)

Since you are here, you must be tired of “AI Safety” talks, right? Fear not, Anakin AI now supports one of the best Uncensored LLMs on Anakin AI, where you can chat with LLMs online with no restrictions! app.anakin.ai

Understanding Uncensored LLMs

Uncensored LLMs are designed to provide more open-ended and versatile responses compared to their restricted counterparts (like “Open”AI Models). The goal is to create an AI like ChatGPT but has no restrictions, allowing for more diverse applications and research possibilities.

The process of creating an LLM with no restrictions typically involves several critical steps: base model selection, dataset curation, fine-tuning, and prompt engineering.

Base Model Selection

Choosing a powerful foundation model is crucial because it determines the initial capabilities and architecture of the LLM. Models like Llama 3 or Mistral are selected for their state-of-the-art performance in natural language understanding and generation.

You can get started by downloading Llama models and Mistral Models from Hugging Face.

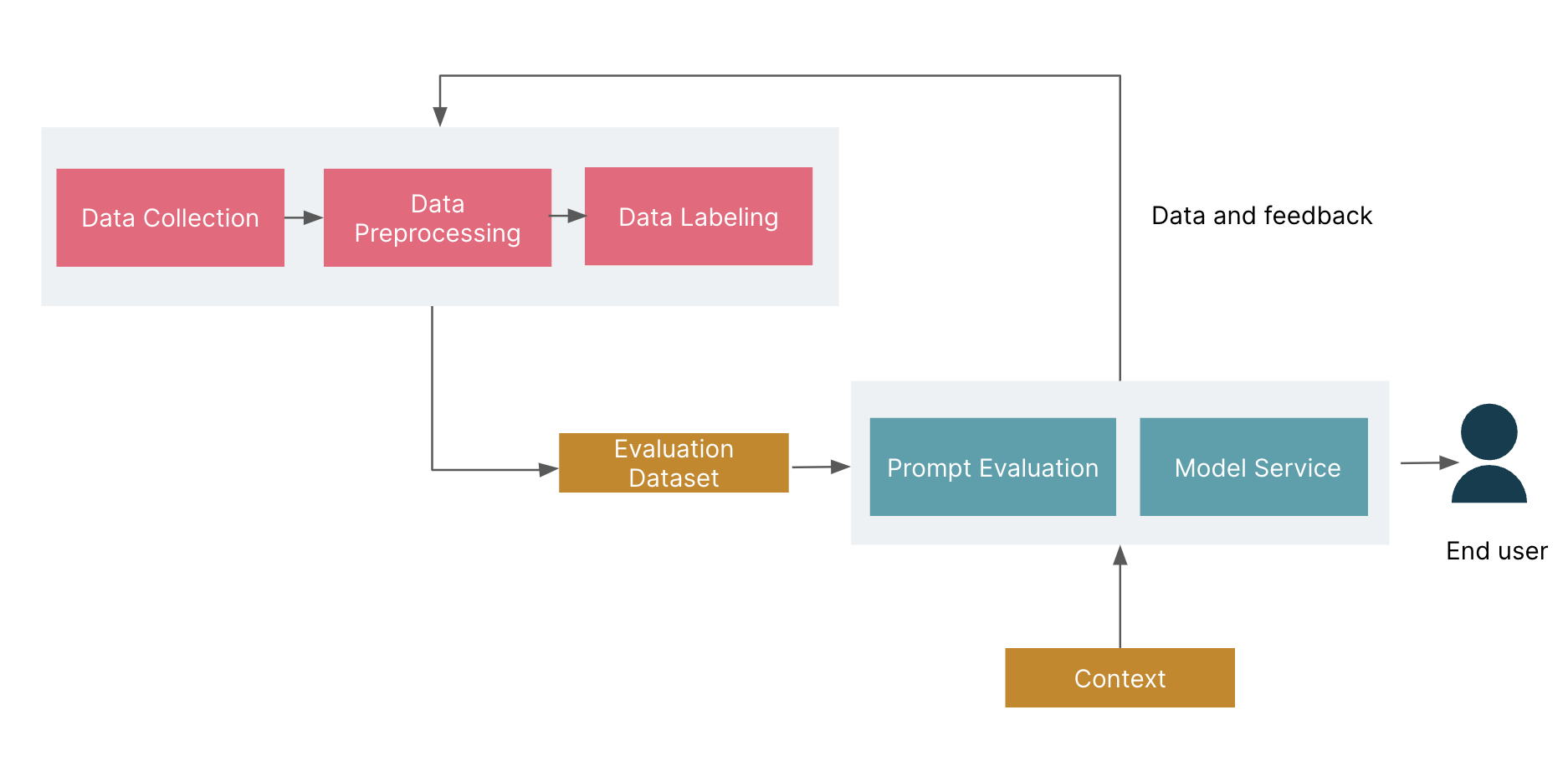

Dataset Curation for Uncensored LLM Models

The dataset used to train an LLM directly influences its performance, bias, and versatility. For uncensored models, the goal is to create an LLM with no restrictions that can handle a broad spectrum of topics and generate diverse outputs.

Gathering data from diverse sources ensures a comprehensive linguistic representation, which is crucial for developing an AI like ChatGPT but has no restrictions.

DialogStudio is a multilingual collection of diverse datasets aimed at building conversational chatbots. Incorporate DialogStudio into your training pipeline to enhance the model’s conversational abilities, ensuring it can handle diverse and unrestricted dialogue scenarios. (github.com)

Fine-Tuning Uncensored LLMs

Fine-tuning an uncensored LLM involves several steps to adjust the model’s parameters and optimize its performance for specific tasks or domains. This process is crucial for removing or bypassing built-in content filters, allowing the model to generate unrestricted responses.

Instruction tuning involves using a dataset of instructions and responses that include a wide range of topics, including those that might be filtered out in censored models. This step helps the model understand how to generate appropriate responses to various prompts.

Modifying the model’s parameters helps reduce the influence of any pre-existing content filters, allowing the model to generate more unrestricted responses.

Continuously validating the model’s outputs ensures that it generates coherent and contextually appropriate responses without unnecessary restrictions.

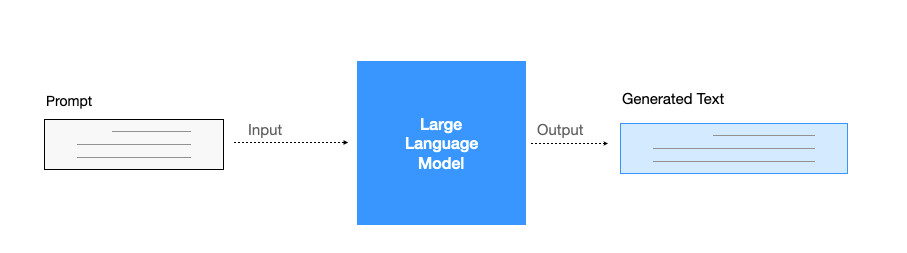

Prompt Engineering

Prompt engineering involves crafting precise prompts to guide the AI model to generate desired outputs. It is crucial for maximizing the effectiveness of uncensored LLMs by encouraging unrestricted and relevant responses.

For example, this is the System Prompt for dolphin-2.5-mixtral-8x7b, one of the most popular uncensored LLMs:

[Prompt example provided in the original content]

Dolphin-Llama 3 70B, developed by Eric Hartford, is based on Meta’s Llama 3 architecture and is considered one of the best uncensored LLM models available. This article provides a comprehensive technical analysis of Dolphin-Llama 3 70B, covering its architecture, training data, fine-tuning process, key features, and performance metrics.

- Parameters: 70 billion

- Context Window: 4096 tokens (with potential for extension to 256K)

- Architecture: Transformer-based with improvements from Llama 3

- Training Data: Enhanced dataset focusing on instruction-following, coding, and unrestricted conversations

- Function Calling Support: Enables integration with external APIs and tools

- Improved Tokenization: Utilizes Llama 3’s advanced tokenizer