OpenAI placing watermarks on DALL-E 3 images to combat AI-generated misinformation

OpenAI has announced plans to address the issue of AI-generated misinformation by incorporating digital watermarks into images created by its DALL-E 3 model. This initiative is in response to the escalating concerns surrounding the proliferation of false information, particularly in the context of upcoming elections in the US.

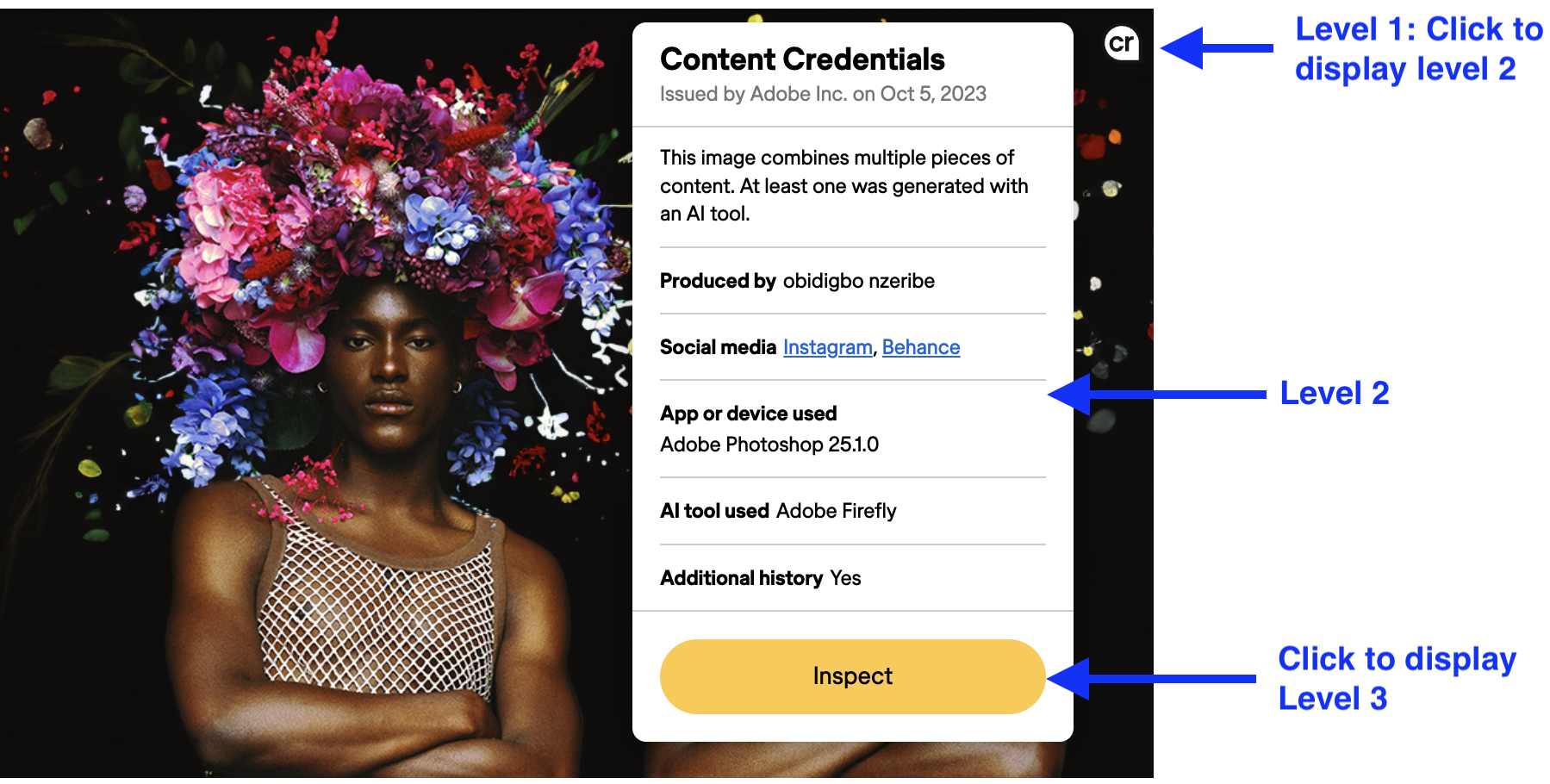

The collaboration with the Coalition for Content Provenance and Authenticity (C2PA) has led to the development of these new watermarks. The primary objective is to offer users a way to validate the legitimacy of AI-generated images, thereby fostering greater trust in digital content.

Enhancing Verification through Watermarks

These watermarks will be integrated into the metadata of images produced through the DALL-E 3 model, and plans are underway to extend this functionality to mobile users by 12th February. To verify the image source, users can utilize platforms like Content Credentials Verify.

Despite this positive step, OpenAI acknowledges that the watermarks, while beneficial, may not provide a foolproof solution. Concerns exist regarding the potential ease with which these watermarks could be removed, either accidentally or intentionally, thereby limiting their effectiveness in combatting misinformation.

Industry Response and Challenges

The rise of AI-generated misinformation has been a pressing issue, manifesting in various forms such as fabricated audio clips and deepfake videos featuring public figures. Meta, the parent company of Facebook and Instagram, has also committed to labeling AI-generated images on its platforms to counteract misinformation.

However, experts caution that relying solely on digital watermarks may not suffice in addressing the multifaceted nature of misinformation. Studies reveal that many existing watermarking techniques are susceptible to exploitation by malicious entities.

Promoting Collaborative Efforts

OpenAI's implementation of digital watermarks underscores the importance of collective action in combatting misinformation. While recognizing the imperfections of this approach, it signifies a proactive move towards fostering transparency and accountability in the digital realm.

For more information on AI images, watermarks, Open AI, and misinformation, you can visit the following links: ai image, watermark, Open AI, Misinformation