Gemini API 102: Next steps beyond "Hello World!" - DEV Community

The previous post in this (short) series introduced developers to the Gemini API by providing a more user-friendly and useful "Hello World!" sample than in the official Google documentation. The next steps: enhance that example to learn a few more features of the Gemini API, for example, support for streaming output and multi-turn conversations (chat), upgrade to the latest 1.0 or even 1.5 API versions, and switch to multimodality... stick around to find out how!

Are you a developer interested in using Google APIs? You're in the right place as this blog is dedicated to that craft from Python and sometimes Node.js. Previous posts showed you how to use Google credentials like API keys or OAuth client IDs for use with Google Workspace (GWS) APIs. Other posts introduced serverless computing or showed you how to export Google Docs as PDF. If you're interested in Google APIs, you're in the right place. The previous post kicked off the conversation about generative AI, presenting "Hello World!" examples that help you get started with the Gemini API in a more user-friendly way than in the docs. It presented samples showing you how to use the API from both Google AI as well as GCP Vertex AI.

Upgrading to the Latest API Versions

This post follows up with a multimodal example, one that supports streaming output, and another one leveraging multi-turn conversations ("chat"), and finally, another one that upgrades to using the latest 1.0 and 1.5 models, the latter of which is in public preview at the time of this writing.

Implementing Multi-turn Conversations

The next easiest update is to change to streaming output. Switching to streaming requires only the stream=True flag passed to the model's generate_content() method. The loop displays the chunks of data returned by the LLM as they come in.

Upgrading API Versions and Implementing Streaming Output

The simplest update is to upgrade the API version. The original Gemini API 1.0 version was named gemini-pro. It was replaced soon thereafter by gemini-1.0-pro, and after that, the latest version, gemini-1.0-pro-latest.

The one-line upgrade was effected by updating the MODEL variable. This "delta" version is available in the repo as gemtxt-simple10-gai.py. Executing it results in output similar to the original version.

If you have access to the 1.5 API, update MODEL to gemini-1.5-pro-latest. The remaining samples below stay with the latest 1.0 model as 1.5 is still in preview.

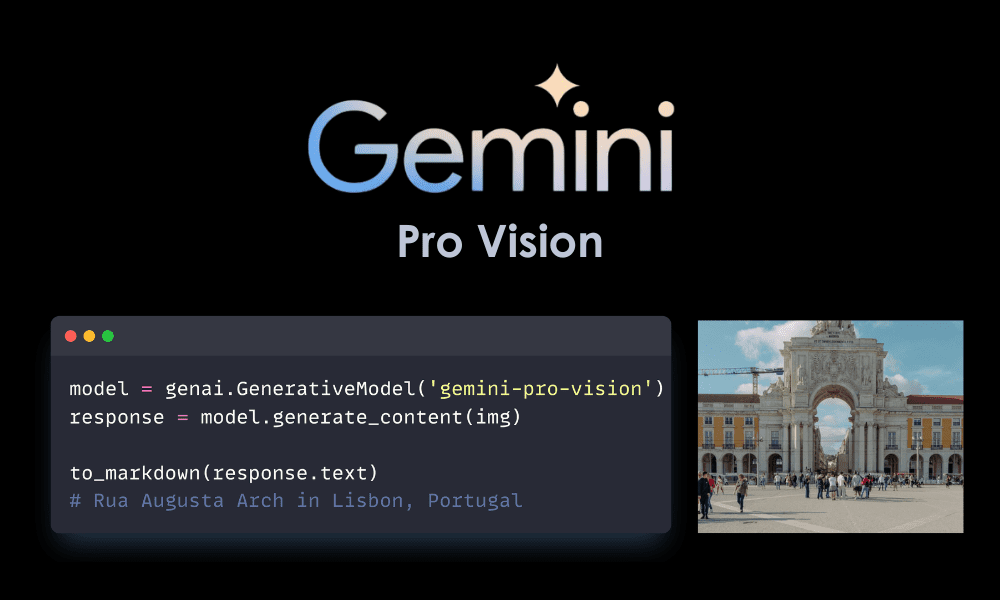

Exploring Multimodality with Gemini API

So far, all of the enhancements and corresponding samples are text-based, single-modality requests. A whole new class of functionality is available if a model can accept data in addition to text, in other form factors such as images, audio, or video content.

Accessing Images Online

The sample script below takes an image and asks the LLM for some information about it, specifically this image.

The final update is to take the previous example and change it to access images online rather than requiring it be available on the local filesystem. For this, we'll use one of Google's stock images.

Developers are eager to jump into the world of AI/ML, especially GenAI & LLMs, and accessing Google's Gemini models via API is part of that picture. The previous post in the series got your foot in the door, presenting a more digestible user-friendly "Hello World!" samples to help developers get started.

This post presents possible next steps, providing "102" samples that enhance the original script, furthering your exploration of Gemini API features but doing so without overburdening you with large swaths of code.

More advanced features are available via the Gemini API we didn't cover here — they merit separate posts on their own.

The next post in the series focuses on Gemini's responses and explores the differences between the 1.0 and 1.5 models' outputs across a variety of queries, so stay tuned for that.