Implementation of REAcT Agent using LlamaIndex and Gemini

In the past 2-3 years, we've witnessed unreal development in the field of AI, mainly in large language models, diffusion models, multimodals, and so on. One of my favorite interests has been in agentic workflows. Early this year, Andrew Ng, the founder of Coursera and a pioneer in deep learning, made a tweet saying “Agentic workflows will drive massive AI progress this year”. Ever since this tweet came out, we’ve seen unbelievable development in the field of agents, with many people building autonomous agents, multi-agent architectures, and so on.

Understanding REAcT Agent

In this article, we’ll dive deep into the implementation of REAcT Agent, a powerful approach in agentic workflows. We’ll explore what REAcT prompting is, why it’s useful, and how to implement it using LlamaIndex and Gemini LLM.

REAcT stands for Reasoning, Acting, and Thinking. It’s a prompting technique that enables large language models (LLMs) to break down complex tasks into a series of thought processes, actions, and observations.

Structure of a REAcT Prompt

REAcT prompting follows a three-step process that runs in a loop until a satisfactory result is achieved or a maximum number of iterations is reached.

Real-Time Example

Let’s look at a real-time example of how a REAcT Agent might process a query about recent technological advancements. This example will demonstrate the agent’s thought process, actions, and observations.

User Query: “Who was the man of the series in the recent India vs England Test series, and what were their key performances?”

Advantages of REAcT Agent

A typical approach to obtaining results from LLMs is by writing a well-structured prompt. However, it’s important to remember that LLMs lack inherent reasoning capabilities. Various methods have been attempted to enable LLMs to reason and plan, but many of these approaches have fallen short. Techniques like Chain of Thought, Tree of Thoughts, and Self-Consistency COT have shown promise but were not entirely successful in achieving robust reasoning. Then came ReAct, which, to some extent, succeeded in designing logical research plans that made more sense than previous methods.

REAcT breaks down complex tasks into a series of thoughts, actions, and observations, REAcT agents can tackle intricate problems with a level of transparency and adaptability that was previously challenging to achieve. This methodology allows for a more nuanced understanding of the agent’s decision-making process, making it easier for developers to debug, refine, and optimize LLM responses.

Applications of REAcT Agents

Moreover, the iterative nature of REAcT prompting enables agents to handle uncertainty. As the agent progresses through multiple cycles of thinking, acting, and observing, it can adjust its approach based on new information, much like a human would when faced with a complex task. By grounding its decisions in concrete actions and observations, a REAcT agent can provide more reliable and contextually appropriate responses, thus significantly reducing the risk of hallucination.

Code Implementation with LlamaIndex and Gemini

In our code implementation, we will look into real-time sports data query and analysis. Now, let’s get into the exciting part – implementing a REAcT Agent using LlamaIndex. The implementation is surprisingly straightforward and can be done in just a few lines of code.

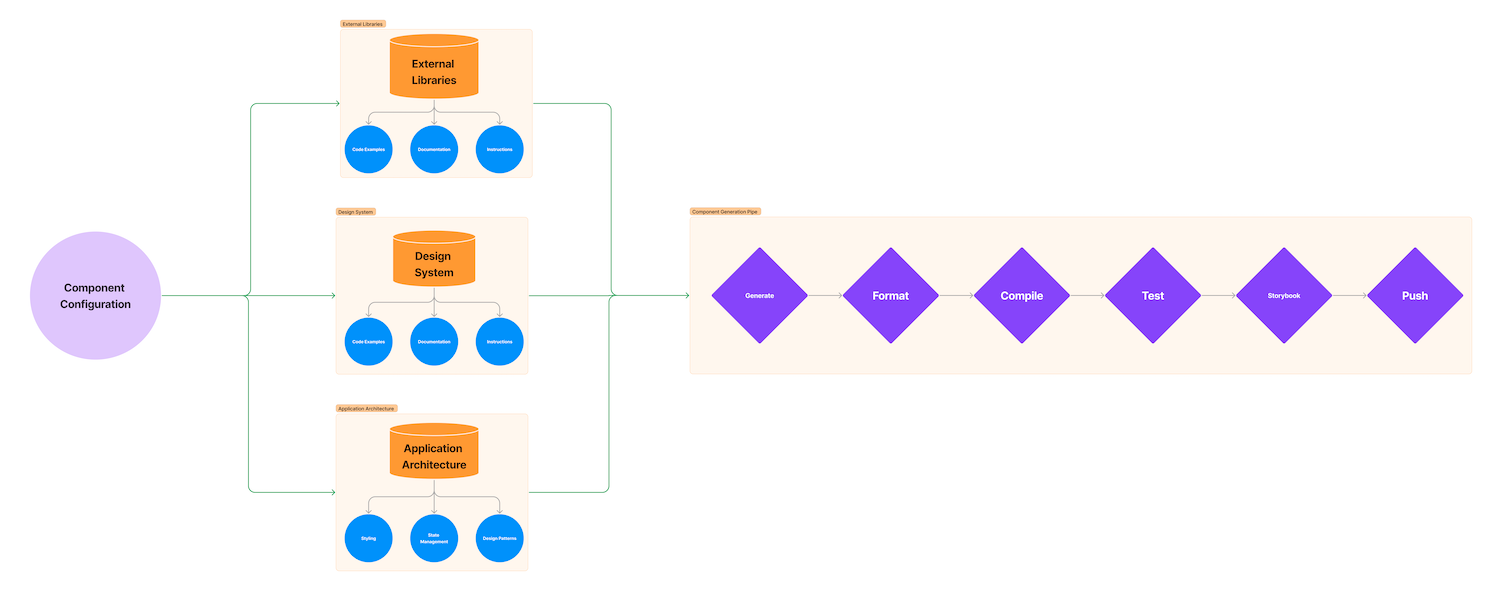

Before we proceed with the code implementation, let’s install a few necessary libraries, including LlamaIndex. LlamaIndex is a framework that efficiently connects large language models to your data. For our action tool, we’ll be using DuckDuckGo Search, and Gemini will be the LLM we integrate into the code.

First, we need to import the necessary components. Since the ReAct agent needs to interact with external tools to fetch data, we can achieve this using the Function Tool, which is defined within the LlamaIndex core tools. The logic is straightforward: whenever the agent needs to access real-world data, it triggers a Python function that retrieves the required information. This is where DuckDuckGo comes into play, helping to fetch the relevant context for the agent.

In LlamaIndex, OpenAI is the default LLM, to override Gemini, we need to initialize it within the Settings. To use the Gemini LLM, you need to get the API key from here: https://aistudio.google.com/

Next, we define our search tool, DuckDuckGo Search. One important detail to remember is that you need to specify the data type of the input parameter when defining the FunctionTool for performing actions. For example, search(query: str) -> str ensures the query parameter is a string. Since DuckDuckGo returns the search results with additional metadata, we’ll extract only the body content from the results to streamline the response.

With the major components of the agent already set up, we can now define the ReAct agent. We can directly use the ReAct Agent from LlamaIndex core. Additionally, we set verbose=True to understand what’s happening behind the scenes. Setting allow_parallel_tool_calls to True enables the agent to make decisions without always relying on external actions, allowing it to use its reasoning when appropriate.

That’s it! We’ve created our REAcT Agent. Now we can use it to answer queries, by running agent.chat methodology. REAcT Agents represent a significant step forward in the field of AI and agentic workflows. By implementing a REAcT Agent using LlamaIndex, we’ve created a powerful tool that can reason, act, and think its way through real-time user queries.

By grounding responses in concrete actions and observations, REAcT Agents reduce hallucinations. This means that instead of generating unsupported or inaccurate information, the agent performs actions (like searching for information) to verify its reasoning and adjust its response based on real-world data.

Yes, you can implement a ReAct agent using Langchain and it is very straightforward as well. You first define the tools the agent can use, such as search functions, and LLM, and then create the agent using these tools. The agent then operates in an iterative loop, reasoning, acting, and observing until a satisfactory answer is reached.

REAcT agents are commonly used in complex problem-solving environments such as customer support, research analysis, autonomous systems, and educational tools.