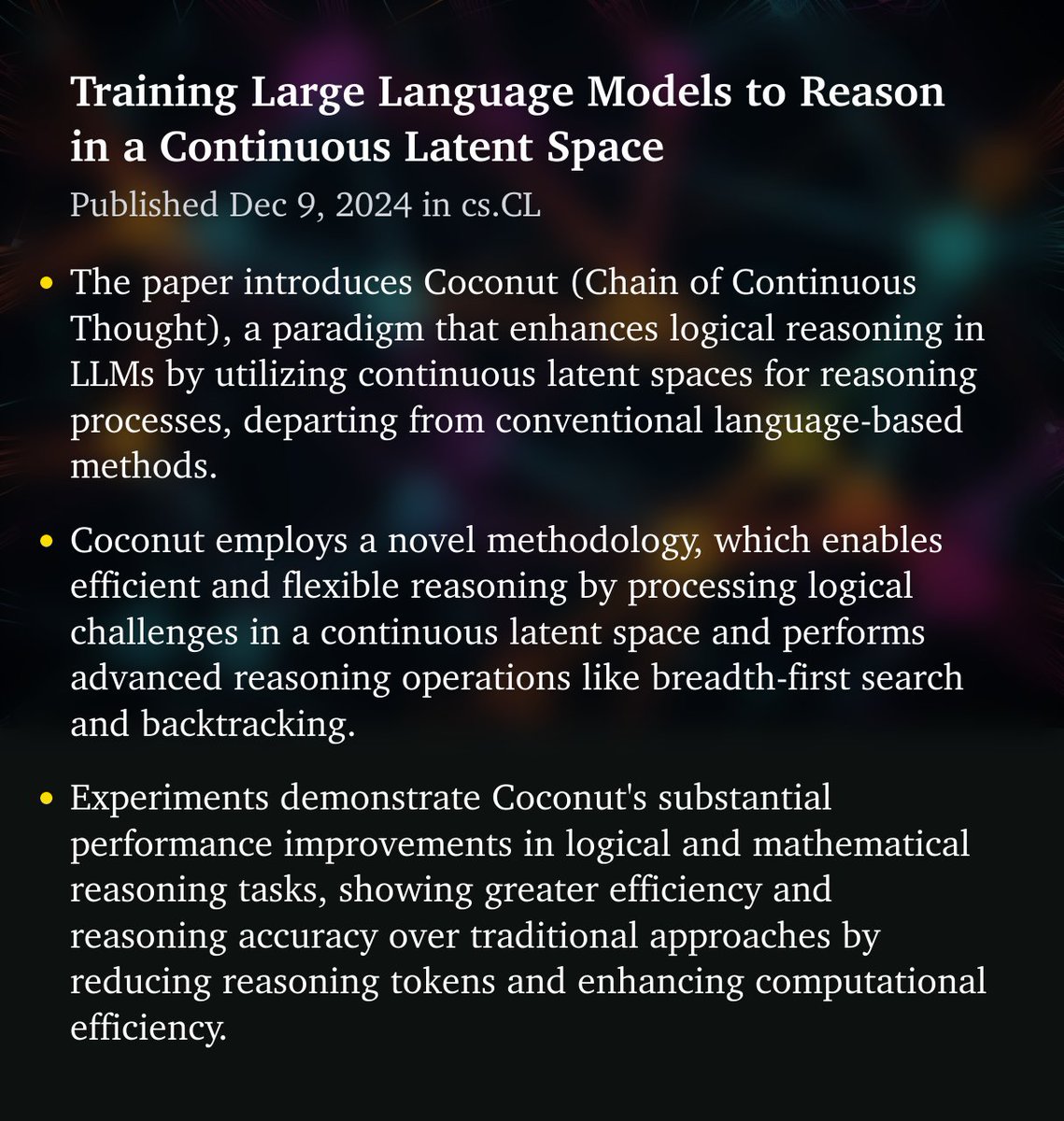

TLDRMeta AI's paper introduces a new method called Chain of Continuous Thought (Coconut) for training large language models (LLMs) to reason in a continuous latent space. This approach alternates between language and latent thought modes, enhancing reasoning capabilities. The Coconut method is shown to be more efficient and

Reasoning with Chain of Continuous Thought by Meta AI

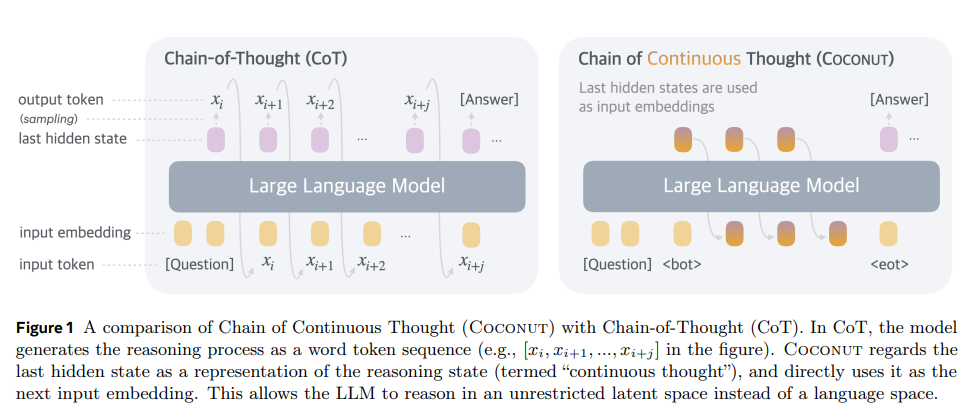

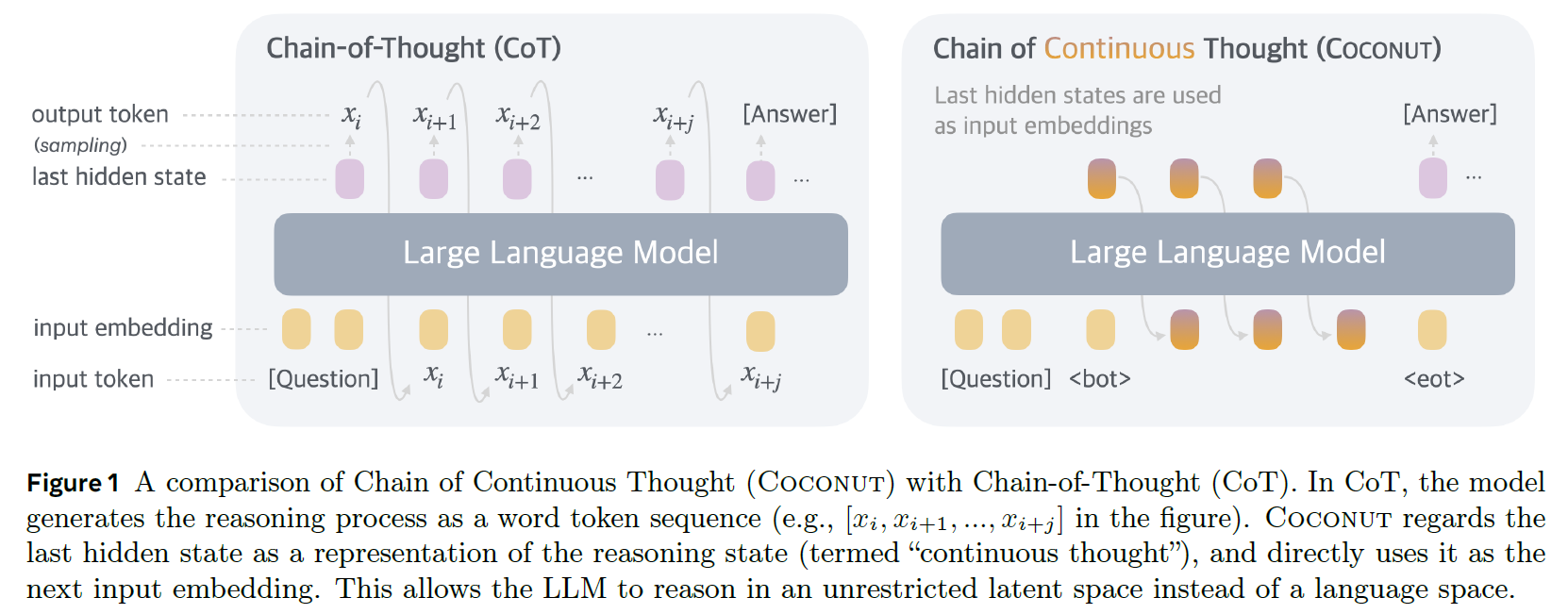

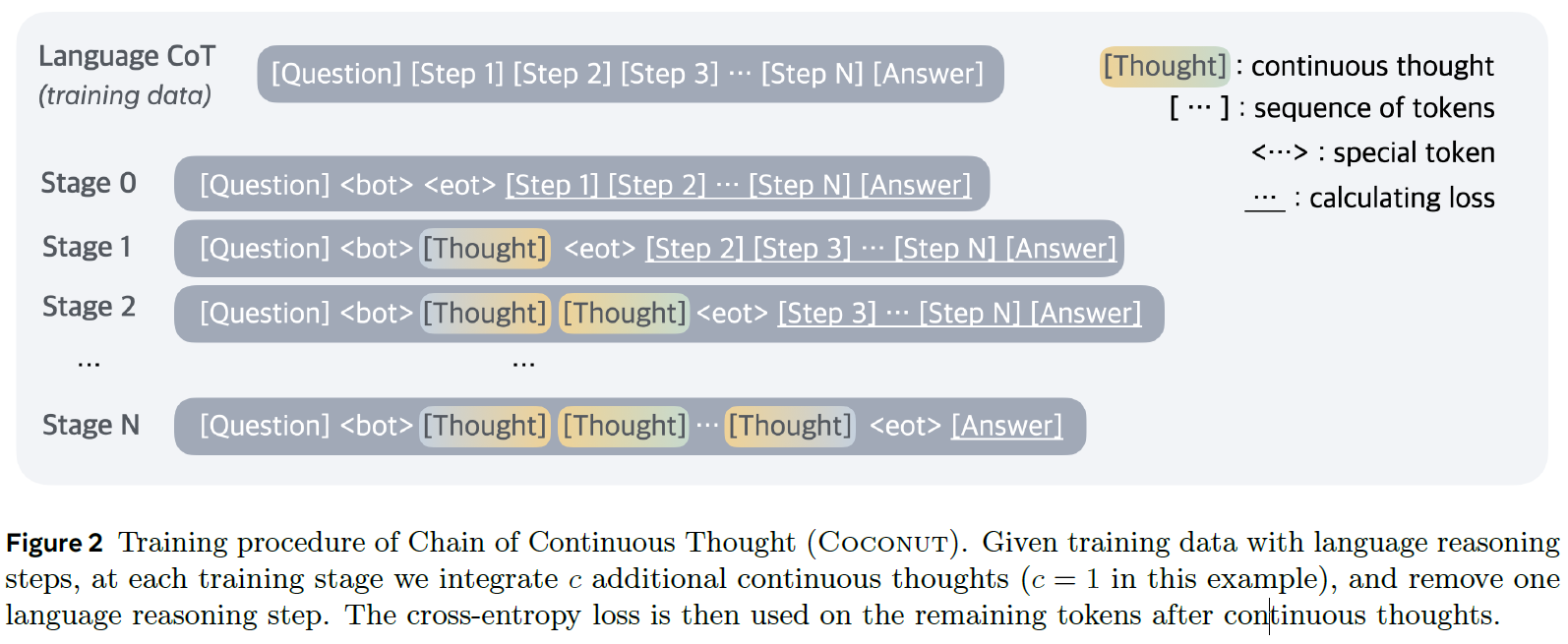

Meta AI has developed an innovative method known as Chain of Continuous Thought (Coconut) that is designed for training large language models (LLMs) to engage in reasoning within a continuous latent space. This unique approach involves the alternation between language and latent thought modes, leading to a significant enhancement in the models' reasoning capabilities.

The Efficiency of Coconut Method

The Coconut method, as proposed by Meta AI, has demonstrated a higher level of efficiency compared to traditional approaches. By integrating the Chain of Continuous Thought technique into the training process, large language models have shown improved performance in terms of reasoning tasks.

Additionally, the continuous latent space utilized in the Coconut method allows for a seamless transition between language understanding and abstract thinking, enabling LLMs to navigate complex reasoning scenarios with ease.

Enhancing Reasoning Capabilities

One of the key advantages of Meta AI's Coconut method is its ability to enhance the reasoning capabilities of large language models. Through the integration of continuous thought processes, LLMs are equipped to tackle complex problems that require a combination of linguistic understanding and logical reasoning.

This innovative approach opens up new possibilities for the application of language models in various domains, including natural language processing, machine translation, and sentiment analysis.

Furthermore, the Chain of Continuous Thought technique paves the way for more sophisticated AI systems that can engage in nuanced reasoning and decision-making processes.