OpenAI releases 'DeepSeek R1,' an inference model equivalent to OpenAI-o1

Chinese AI company DeepSeek has released its first generation inference models, DeepSeek-R1-Zero and DeepSeek-R1, as open source under the MIT license. DeepSeek claims that they perform on par with OpenAI o1 in certain AI benchmarks.

DeepSeek-R1/DeepSeek_R1.pdf at main · deepseek-ai/DeepSeek-R1 · GitHub

DeepSeek-R1 is here!

Performance on par with OpenAI-o1

Fully open-source model & technical report

MIT licensed: Distill & commercialize freely!

Website & API are live now! Try DeepThink at https://t.co/v1TFy7LHNy today!

DeepSeek-R1 Zero and its successor, DeepSeek-R1, are trained based on DeepSeek-V3-Base with Mixture of Experts (MoE) architecture, with a total parameter count of 671 billion and a context length of 128K.

Chinese AI company DeepSeek releases AI model 'DeepSeek-V3' comparable to GPT-4o, with a threatening 671 billion parameters - GIGAZINE

DeepSeek-R1 Zero was trained using reinforcement learning, rather than supervised fine-tuning (SFT), which is common in traditional LLM development. This technique allows it to spontaneously explore chains of thought to solve complex problems, allowing it to self-examine, reflect on itself, and generate long chains of thoughts.

However, DeepSeek reports that this approach had problems such as 'prone to infinite loops of text,' 'poor readability of output text,' and 'prone to mixing of multiple languages.' To address this issue, the successor DeepSeek-R1 combines two stages of SFT and two stages of reinforcement learning. The SFT stage uses initial data called cold start data, and the subsequent RL stage discovers improved inference patterns and adjusts to human preferences.

DeepSeek compared the benchmark results of DeepSeek-R1 with those of Claude-3.5-Sonnet-102, GPT-4o, DeepSeek V3, OpenAI o1-mini, and OpenAI o1-1217. In the English task, DeepSeek-R1 achieved 90.8% in MMLU, 92.9% in MMLU-Redux, and 84.0% in MMLU-Pro, all of which outperformed GPT-4 and Claude 3.5 Sonnet.

DeepSeek also showed particularly strong performance in the field of mathematics, achieving 97.3% in MATH-500 and 79.8% in AIME 2024, which DeepSeek claims are among the best in the field. In terms of coding ability, DeepSeek-R1 achieved a percentile of 96.3% on Codeforces and a rating of 2029, showing performance close to OpenAI-o1-1217. In addition, it achieved 65.9% on LiveCodeBench. In addition, DeepSeek-R1 also showed high performance in Chinese language tasks, achieving scores of 92.8% on CLUEWSC and 91.8% on C-Eval.

Bonus: Open-Source Distilled Models!

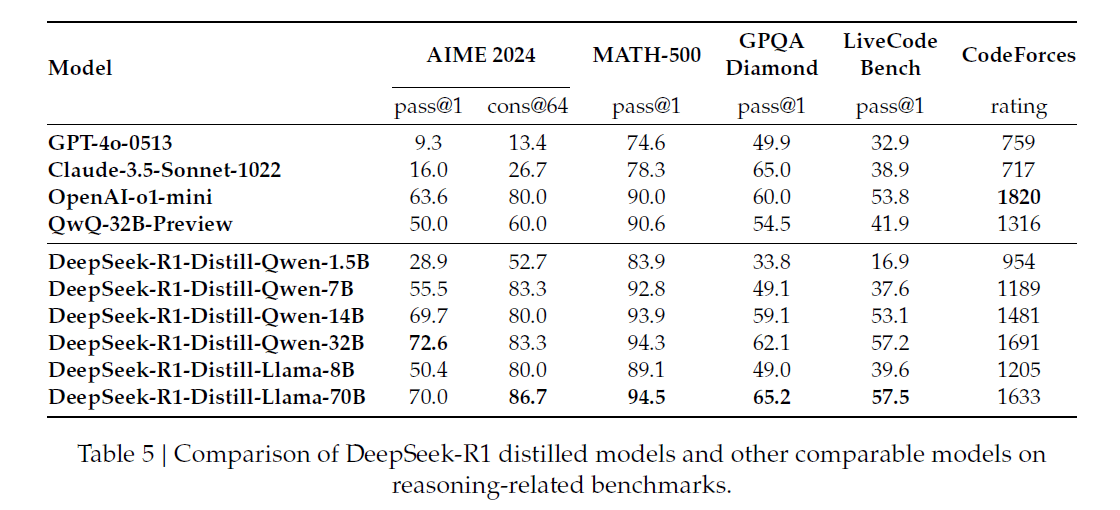

Distilled from DeepSeek-R1, 6 small models fully open-sourced

32B & 70B models on par with OpenAI-o1-mini

Empowering the open-source community

Pushing the boundaries of open AI!

DeepSeek claims that DeepSeek-R1 has been shown to perform as well or better than OpenAI-o1-1217 across a number of benchmarks, with particular emphasis on its performance in the areas of mathematics and coding, and that it also shows advantages in many areas compared to GPT-4 and Claude 3.5 Sonnet.

However, DeepSeek-R1 is an AI system developed in China. TechCrunch, an IT news site, points out the drawbacks of being a Chinese model, saying, 'DeepSeek-R1 is subject to benchmarking by China's internet regulators to ensure that its answers embody core socialist values. For example, R1 will not answer questions about the Tiananmen Square incident or Taiwan's autonomy.'

DeepSeek-R1, DeepSeek-R1 Zero, and models distilled to Llama and Qwen using DeepSeek-R1 as a teacher are provided under the MIT license. This means that as long as you include the copyright notice and this license, you are permitted to use DeepSeek-R1 commercially for free, modify it, and distill it for training other LLMs. In addition, the published models are available on the DeepSeek official website, and an OpenAI-compatible API is also provided at platform.deepseek.com.