How LLMs Store and Use Knowledge? This AI Paper Introduces...

Large language models (LLMs) are designed to understand and generate human-like text by storing vast knowledge repositories in their parameters. This unique capability allows them to perform complex reasoning tasks, adapt to various applications, and effectively interact with humans. Despite their impressive accomplishments, researchers are actively exploring how these systems store and utilize knowledge to further enhance their efficiency and reliability.

Challenges with Large Language Models

A significant challenge with large language models is their tendency to produce inaccurate, biased, or hallucinatory outputs. These issues stem from a limited understanding of how these models organize and access knowledge. Without clear insights into the internal interactions of different components within these architectures, addressing errors and optimizing performance remains a major obstacle. Existing studies often focus on individual elements rather than exploring the broader relationships between them, hindering improvements in factual accuracy and safe knowledge retrieval.

Introducing Knowledge Circuits

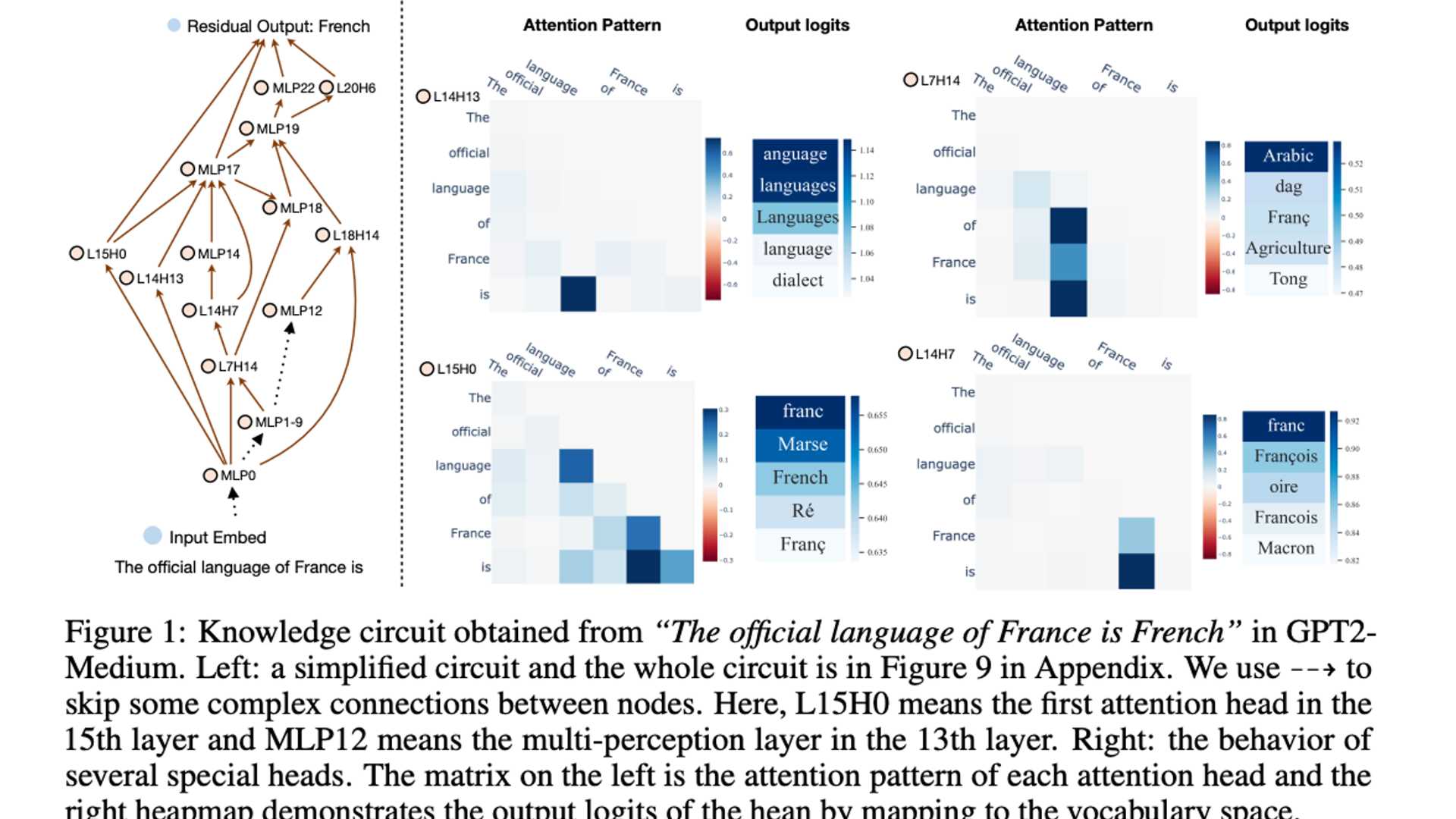

Researchers from Zhejiang University and the National University of Singapore have proposed a new concept called "knowledge circuits" to address these challenges. These circuits are interconnected subgraphs within a Transformer's computational graph, incorporating various components such as MLPs, attention heads, and embeddings. By demonstrating how knowledge circuits collectively store, retrieve, and apply knowledge efficiently, this approach offers a holistic perspective on how LLMs function internally.

Analyzing Knowledge Circuits

To construct knowledge circuits, researchers systematically analyzed the computational graph of models by ablating specific edges and observing performance changes. They identified critical connections and how different components interact to produce accurate outputs. Through this method, specialized roles for components like "mover heads" and "relation heads" were uncovered, showing how these circuits aggregate and refine knowledge to improve predictive accuracy.

Impact of Knowledge Circuits

Experiments showed that knowledge circuits could maintain a model's performance using only a fraction of its total parameters. Tasks like landmark-country relations saw significant improvements, highlighting the effectiveness of focusing on essential circuits to enhance accuracy. Knowledge circuits also improved the model's ability to interpret complex phenomena such as hallucinations and in-context learning.

Limitations of Existing Editing Methods

Existing knowledge-editing methods like ROME and fine-tuning layer techniques have limitations, often disrupting unrelated areas when adding new knowledge. These findings emphasize the need for more precise and robust editing mechanisms to avoid overfitting and ensure accurate knowledge representation.

Conclusion

This research presents a detailed perspective on how large language models operate by emphasizing knowledge circuits. By shifting the focus from isolated components to interconnected structures, this approach offers a comprehensive framework for analyzing and improving transformer-based models. The insights gained pave the way for better knowledge storage, editing practices, and model interpretability, addressing longstanding challenges in machine learning.

For more information, refer to the research paper and the GitHub page.