How Do LLM's Work?

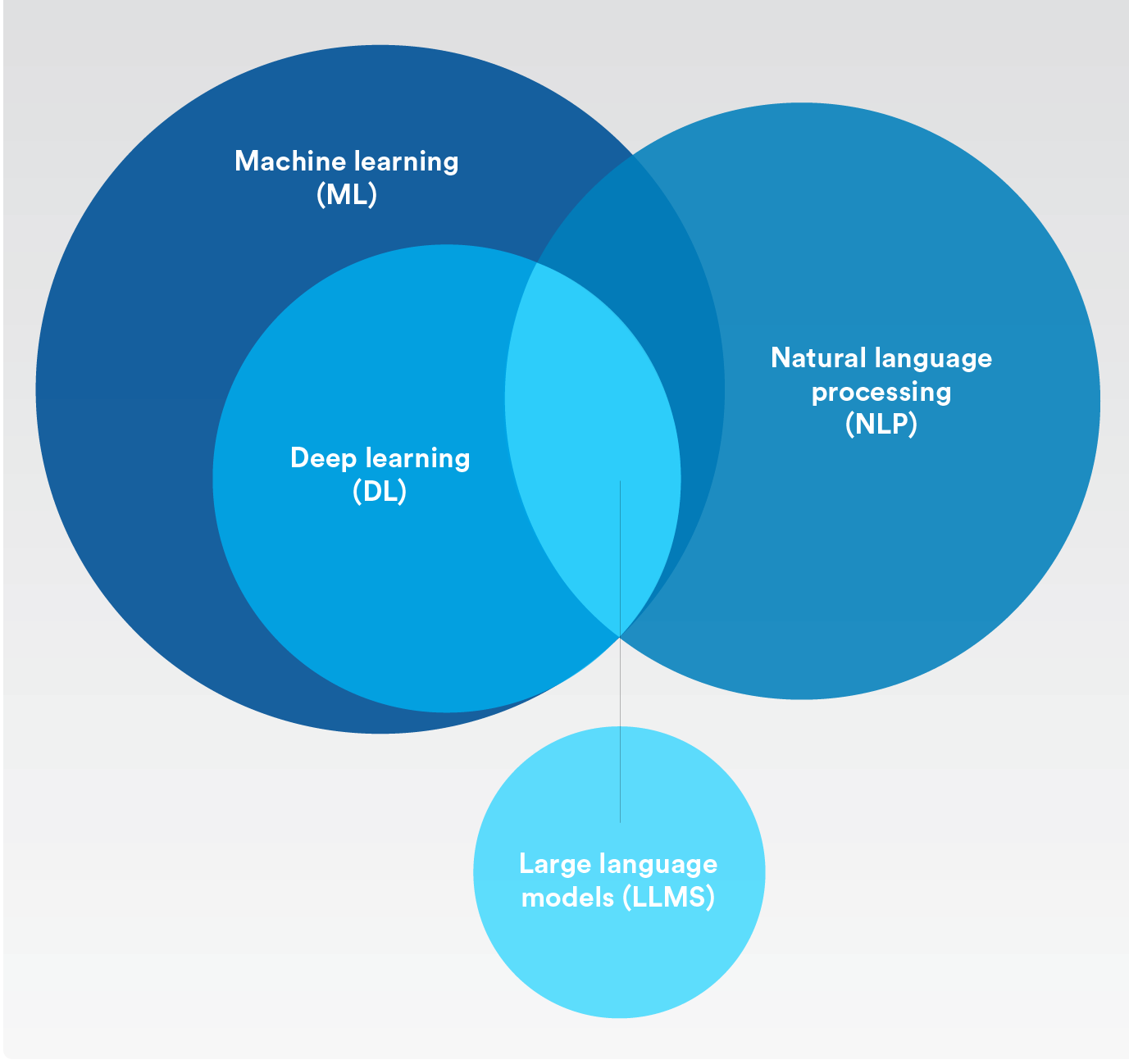

The field of Artificial Intelligence has seen significant advancements in recent years, with the development of Large Language Models (LLMs) playing a crucial role. One such model is GPT-3 (Generative pre-trained Transformer 3), created by Open AI. GPT-3 is known for its capabilities in natural language processing tasks, and its predecessor, Chat GPT, has gained widespread recognition in the AI community.

Understanding LLMs

BERT (Bidirectional Encoder Representations from Transformers) is another prominent LLM developed by Google, which has been widely used for various natural language applications. Facebook AI Research introduced RoBERTa (Robustly Optimized BERT Pretraining Approach) as an improved version of BERT, further enhancing transformer architecture performance.

One noteworthy model in the LLM space is BLOOM, the first multilingual LLM created by a consortium of organizations and scholars. These models have revolutionized the way AI assistants are developed, simplifying tasks such as content writing and improving overall productivity.

Training and inference are fundamental processes that LLMs follow. Before training, a vast amount of textual data is collected from sources like books, articles, and websites to enhance the accuracy of linguistic and contextual predictions. Tokenization, the process of dividing text into smaller units, is then carried out to facilitate model processing and comprehension.

The Training Process

During pre-training, LLMs learn from tokenized text data and anticipate the sequence of tokens based on unsupervised learning. The transformer architecture, with its self-attention mechanisms, forms the foundation of LLMs, allowing them to generate contextually appropriate text by focusing on relevant information.

Enhancing Language Generation

LLMs excel in capturing context and producing relevant responses by leveraging self-attention mechanisms. Beam search is a common technique used during the inference phase to generate high-quality and coherent text output. These models go through a series of steps to understand language patterns, contextualize information, and produce human-like text.

Overall, LLMs play a crucial role in natural language understanding, generation, and translation, offering a wide range of applications in the AI domain.

For more insights, you can refer to The Top AiThority Articles Of 2023