Exploring Laws of Robotics: A Synthesis of Constitutional AI and AI Ethics

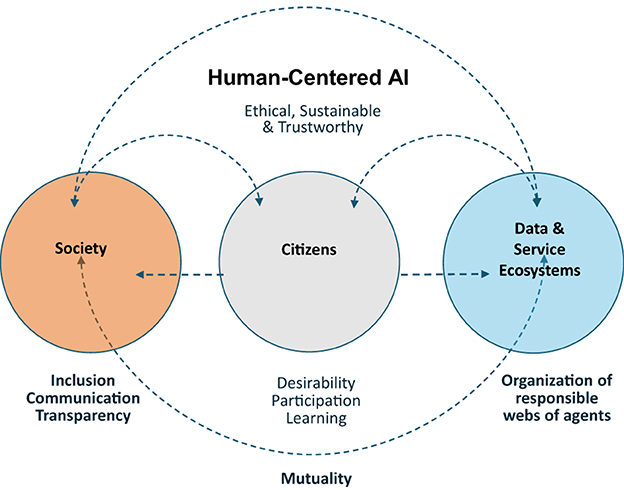

As today’s world increasingly harnesses ever more powerful AI systems, policymakers and developers must recognize the need for effective regulatory frameworks to ensure that the underlying Large Language Models (LLMs) are used ethically and responsibly. Integrating ordoliberal constitutional economics with AI ethics helps to create such frameworks through system prompts, reinforcement learning, and non-fine-tunable learning. Constitutional AI aims to embed ethical principles and robust safeguards into AI systems to ensure they operate within pre-defined boundaries, prioritizing safety, legality, and human rights. By embedding ethical considerations and compliance requirements directly into the operational core of AI systems, a focus on regulation and transparency of system instructions can proactively shape AI outcomes.

Isaac Asimov’s “Three Laws of Robotics” has long been a touchstone in discussions on AI and (computer) ethics. Introduced in his 1942 short story “Runaround” and popularized in subsequent works, these laws were designed to ensure ethical behavior by robots. These principles reflected an early attempt to create a legal and ethical framework for AI systems and technologies.

Considering the rise of Large Language Models (LLMs), this effort has returned with renewed urgency. This is particularly the case in the E.U., which, with its recently finalized AI Act, is seeking to position itself as a leading global standard-setter for “secure, trustworthy, and ethical AI.” In this context, OpenAI’s publication in May 2024 of its first draft, “Model Spec,” a comprehensive guide to determining the future behavior of its models, has so far received insufficient attention. The document proposes rules governing how LLMs should operate and outlines further goals, defaults, and exceptions designed to maximize the safety, legality, and usability of AI.

Integration of AI Ethics and Constitutional Economics

Recent research compares persuasive strategies between LLM and human-generated arguments by examining cognitive effort and moral-emotional language. Given the potential for LLMs to impact the integrity of information and shape democratic discourse, it is vital that legal and ethical guidelines for their use are established and implemented.

Building on the contemporary guidelines proposed by OpenAI, this paper explores integrating AI ethics principles with constitutional economics. How can the ordoliberal principles of constitutional economics be applied to developing LLMs to ensure they are both economically efficient and socially beneficial? What potential conflicts might arise between computational goals and human instructions, and how can these be effectively managed through a constitutional AI framework? This research aims to sketch an ordoliberal framework to govern LLM behavior, ensuring that it is consistent with democratic values, business-ethical principles, and human rights.

Policy and Governance Implications

In essence, this research aims to propose an updated and democratically legitimized form of the “laws of robotics” for the age of generative AI. The paper discusses how model prompts might be connected to “mini-publics” to ensure alignment with democratic values and societal norms.

The paper is structured as follows: Section 2 provides an overview of the academic literature on AI ethics, focusing on “constitutional AI” and “AI guardrails.” Section 3 begins with a detailed critique of the OpenAI Model Spec, describing the goals, rules, and hierarchical structure.