Metas brain2qwerty with the Meta Ai ️: A milestone in the non-invasive brain-to-text decoding

The development of brain2qwerty through Meta AI represents significant progress in the area of brain computer interfaces (BCIS). By using magnetoencephalography (MEG) and electroencephalography (EEG), this system succeeds in converting brain signals into text, achieving a sign of up to 81% under optimal conditions. Even though the technology is still under development, it shows great potential, especially for individuals with language or movement disorders seeking new communication channels.

Advancements in Brain Computer Interfaces

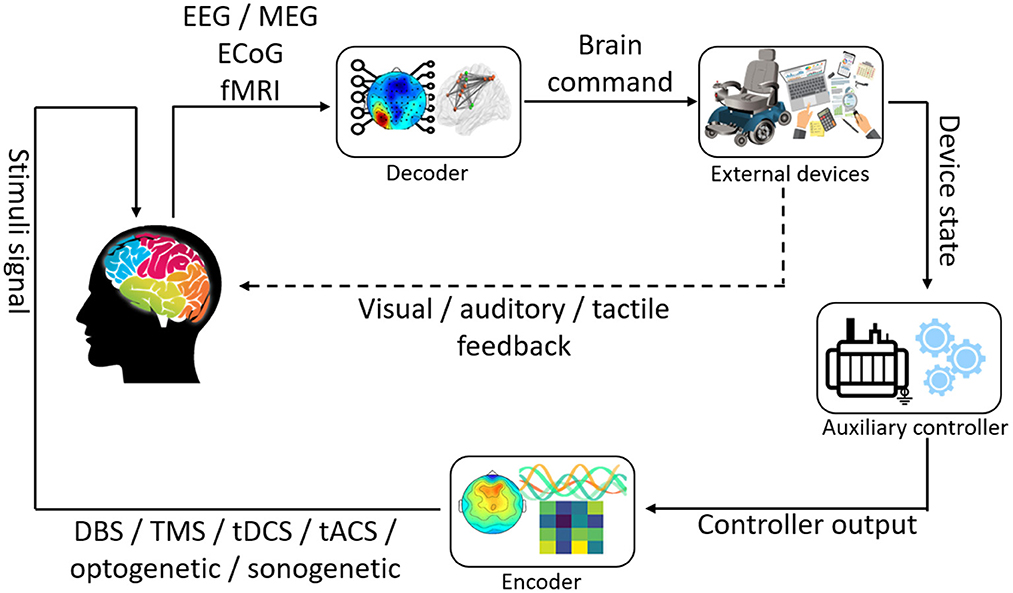

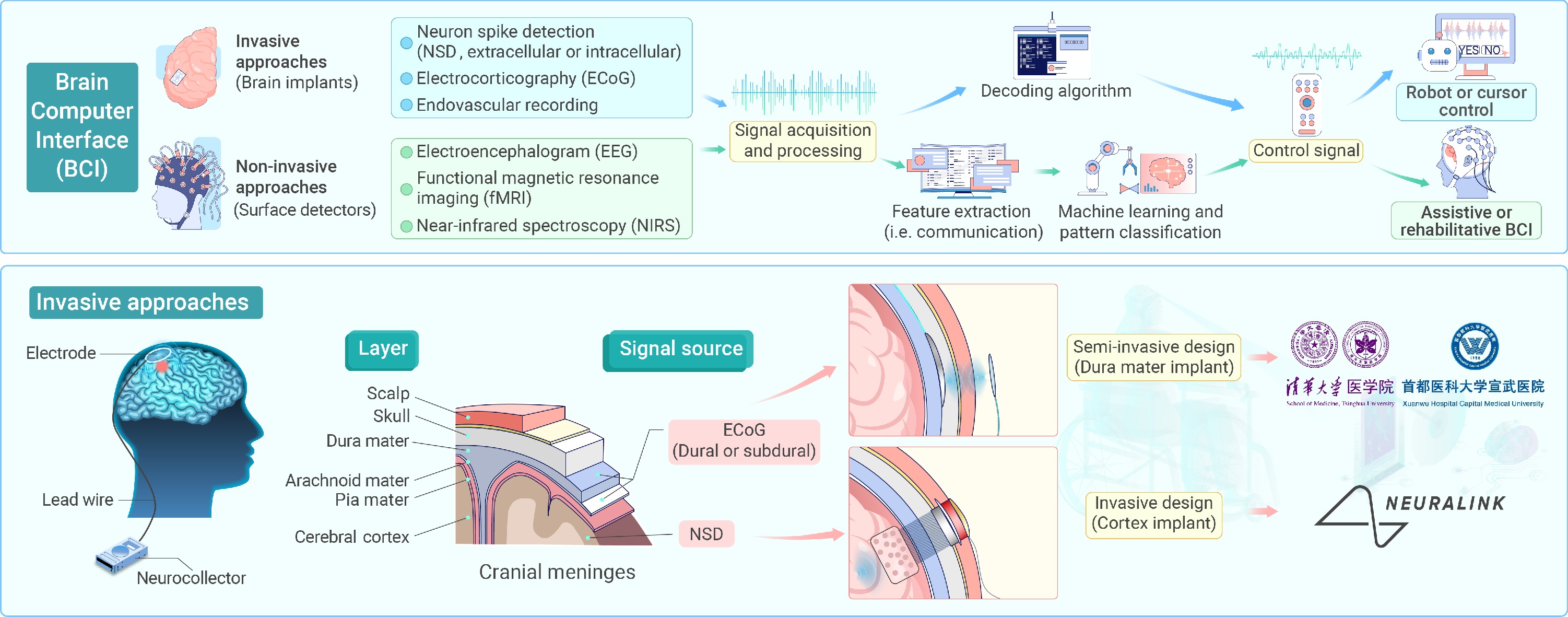

Brain computer interfaces aim to establish direct communication channels between the human brain and external devices. While invasive methods with implanted electrodes offer high accuracy, they come with risks such as infections and the need for surgical interventions. Non-invasive alternatives like EEG and MEG, though safer, have historically faced challenges with signal quality. Brain2qwerty from Meta Ai aims to address this by achieving a low error rate for MEG-based decoding.

EEG measures electrical fields on the scalp, while MEG records magnetic fields of neuronal activity. MEG provides higher spatial resolution and is less susceptible to signal distortions compared to EEG. This explains why Brain2qwerty with MEG achieves a lower error rate. However, the high cost and weight of MEG devices limit their accessibility for broad use.

The Brain2qwerty System

Brain2qwerty is trained with data from healthy subjects in MEG scanners, where they typed sentences to help the system identify neural signatures for each keyboard sign. The system can also correct typing errors, showcasing its cognitive integration capability. In tests, Brain2qwerty achieved an average character error rate, surpassing EEG-based systems.

Unlike traditional BCIs, Brain2qwerty leverages natural motor processes for decoding, reducing cognitive effort and enabling the translation of entire sentences from non-invasive brain signals.

Challenges and Future Prospects

While the technology shows promise, concerns about data privacy and commercialization remain. Meta emphasizes that Brain2qwerty only captures intentional movements, not unconscious thoughts. Ongoing research focuses on transfer learning and portable MEG systems to enhance usability.

Integration with voice models like GPT-4 could further enhance Brain2qwerty's capabilities. The potential applications for patients with communication limitations are vast, but further advancements in hardware and AI are needed to realize this vision.

Conclusion

Metas brain2qwerty demonstrates the potential for significant improvements in non-invasive BCIs through deep learning. While still in development, this technology opens the door to safe communication aids and offers hope for diverse user groups. Continued research will be crucial to bridging the gap with invasive systems and establishing ethical guidelines for the future.