Meta can't tell AI-generated images from real photos

Artificial intelligence is now prevalent in various aspects of our lives. The recent surge in AI applications, particularly with the release of OpenAI's ChatGPT, has captured consumer interest. Major players like Google and Meta have also entered the AI arena with their own platforms, offering a range of AI tools to the public, including those capable of generating realistic photos and videos.

The Challenge of Distinguishing Real from AI-Generated Content

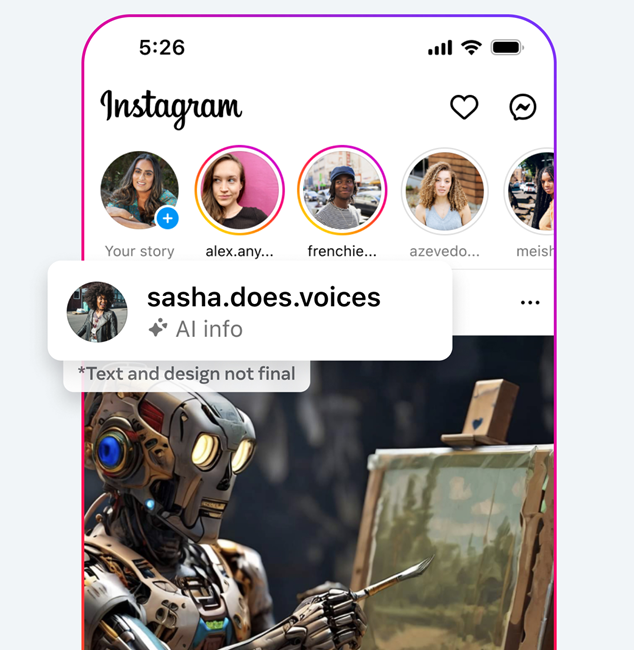

As AI tools have advanced, the line between real and AI-generated content has become increasingly blurred. In response to this challenge, Meta introduced the “Made with AI” labeling system on platforms such as Instagram, Facebook, and Threads. Despite Meta's efforts to aid users in discerning the origin of images, it appears that the labeling system may not always accurately identify images on its platforms.

According to a report by TechCrunch, Meta has been applying the “Made with AI” label to images that were not actually created using AI technology. This issue is further complicated by the fact that many images online undergo some form of editing or alteration. The mislabeling of images may not solely be due to misidentification but could also include images with post-production edits.

The Impact of AI on Film Editing: From Script to Screen

For photographers, the mislabeling of images can be frustrating, especially when their work has not involved AI technology. Speculations suggest that changes in Adobe's editing software, particularly in the realm of cropping functionality, may contribute to this mislabeling phenomenon.

Image editing has evolved significantly with the advent of AI tools that can easily manipulate visuals with minimal effort. While some edits may seem minor, they technically involve AI manipulation. The debate surrounding the labeling of edited images continues, with diverging opinions on whether all alterations, no matter how slight, should be disclosed as AI-generated.

The Impact on Photographers and Image Editing

Currently, Meta lacks the capability to differentiate between images that have been edited using AI tools and those that have not. As a result, the company applies labels based on industry-standard signals indicating AI generation or editing by third-party AI tools. Meta is actively collaborating with other entities to enhance its labeling process, aiming to accurately inform users about content created with AI.

While the existing labeling system remains in place, creators utilizing AI tools for editing may find their work labeled as AI-generated. However, as technology progresses and Meta refines its systems, these practices may evolve. Feedback is being considered to ensure that the labeling accurately reflects the degree of AI involvement in image creation.

Meta's Approach and Ongoing Developments

As we look to the future, the landscape of AI-generated content and its identification will continue to develop, potentially leading to more refined labeling practices and improved transparency for users.

Stay informed about the latest advancements in AI technology by visiting Meta's website.