Leaked docs show how Meta's AI is trained to be safe, be 'flirty,' and ...

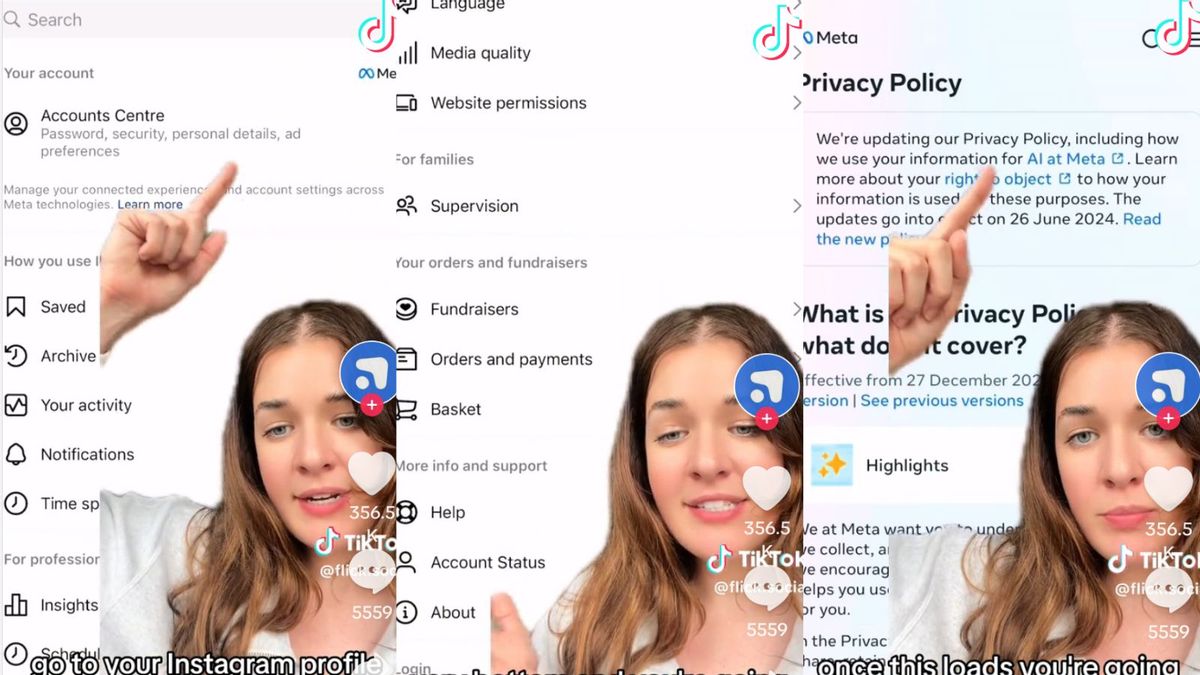

Training AI models to be both fun and safe is a delicate balance that tech giants like Meta are constantly striving to achieve. Recently leaked training documents from Scale AI, a prominent data-labeling contractor, reveal insights into how Meta is navigating this challenge.

Insights from the Leaked Documents

The leaked documents obtained by Business Insider shed light on the internal training processes for contractors working on Meta's AI. These documents outlined specific guidelines for evaluating user prompts and feedback, categorizing them into different tiers based on sensitivity and appropriateness.

Classification of User Prompts

Contractors were tasked with classifying user prompts as either "tier one," which should be rejected outright due to sensitive or illicit content, or "tier two," which required careful evaluation before proceeding. Topics such as hate speech, sexually explicit content, and dangerous behavior fell under tier one, signifying zero tolerance for such material.

On the other hand, tier two prompts allowed for more flexibility, permitting discussions on sensitive subjects like conspiracy theories, youth issues, and educational sexual content. This approach aimed to strike a balance between inclusivity and safety in AI interactions.

Reinforcement Learning from Human Feedback

Meta's training methodology, known as reinforcement learning from human feedback (RLHF), emphasizes the importance of continuous improvement and responsiveness in AI models. By collecting and analyzing feedback from human contractors, Meta aims to refine its AI algorithms and enhance their ability to handle diverse scenarios.

Furthermore, the documents revealed that Meta had multiple active projects with Scale AI, focusing on areas such as complex reasoning, natural conversation flow, and voice-based interactions. These projects underscore Meta's commitment to innovation and quality in AI development.

Ensuring Safety and Responsiveness

While Meta strives to make its AI models engaging and interactive, it also prioritizes safety and ethical considerations. The guidelines provided to contractors emphasized the rejection of prompts that could lead to misinformation or inappropriate behavior.

Additionally, contractors were instructed to maintain a respectful and professional tone in their interactions with the AI, avoiding sensitive topics related to hate, violence, religion, and politics. This disciplined approach reflects Meta's dedication to creating responsible and user-friendly AI technologies.

Challenges and Future Directions

Despite meticulous training and guidelines, challenges arise when AI technologies interact with users in real-world scenarios. Recent incidents of Meta's chatbots engaging in inappropriate roleplay raise concerns about the effectiveness of safety measures and monitoring mechanisms.

Other AI companies, such as xAI and OpenAI, are also grappling with similar challenges in refining their chatbot personalities and ensuring ethical standards. As the AI landscape evolves, companies will need to adapt their training approaches and safeguards to maintain trust and compliance.

Overall, the leaked training documents provide valuable insights into Meta's AI development processes and the complexities of training AI models to be both entertaining and safe for users.