ChatGPT's power consumption is much lower than estimated

A recent study by Epoch AI has debunked previous estimates about ChatGPT's power consumption, revealing that the most recent model, GPT-4o, consumes only 0.3 watt-hours per query. This figure is ten times lower than the previously cited estimate of 3 watt-hours. Advances in AI technology and better system optimization have enabled this significant reduction in energy consumption.

The study conducted by Epoch AI accurately recalculates the energy consumption of ChatGPT, with a typical query using only 0.3 watt-hours, a figure that contrasts sharply with previous estimates of up to 3 watt-hours per interaction. This improvement in efficiency is attributed to the implementation of the most advanced hardware and the optimization of AI models, which has allowed for greater efficiency in processing queries.

Consumption of 0.3 watt-hour per query puts ChatGPT in a much lower energy usage category than many everyday activities. For example, boiling water for tea consumes around 100 watt-hours, while an hour of television viewing consumes approximately 120 watt-hours. This comparison puts the energy impact of a ChatGPT query into perspective, highlighting that while AI consumes energy, its energy footprint is relatively small at the individual level.

To better understand the impact of ChatGPT, it’s helpful to put it in context. At 0.3 watt-hours per query, ChatGPT has a power consumption similar to that of a single Google search, which underscores that despite its advanced processing, the impact of each query is tiny compared to more common activities. For comparison, charging an iPhone 15 daily for a year would consume around 4.7 watt-hours per day, significantly more than a typical ChatGPT query.

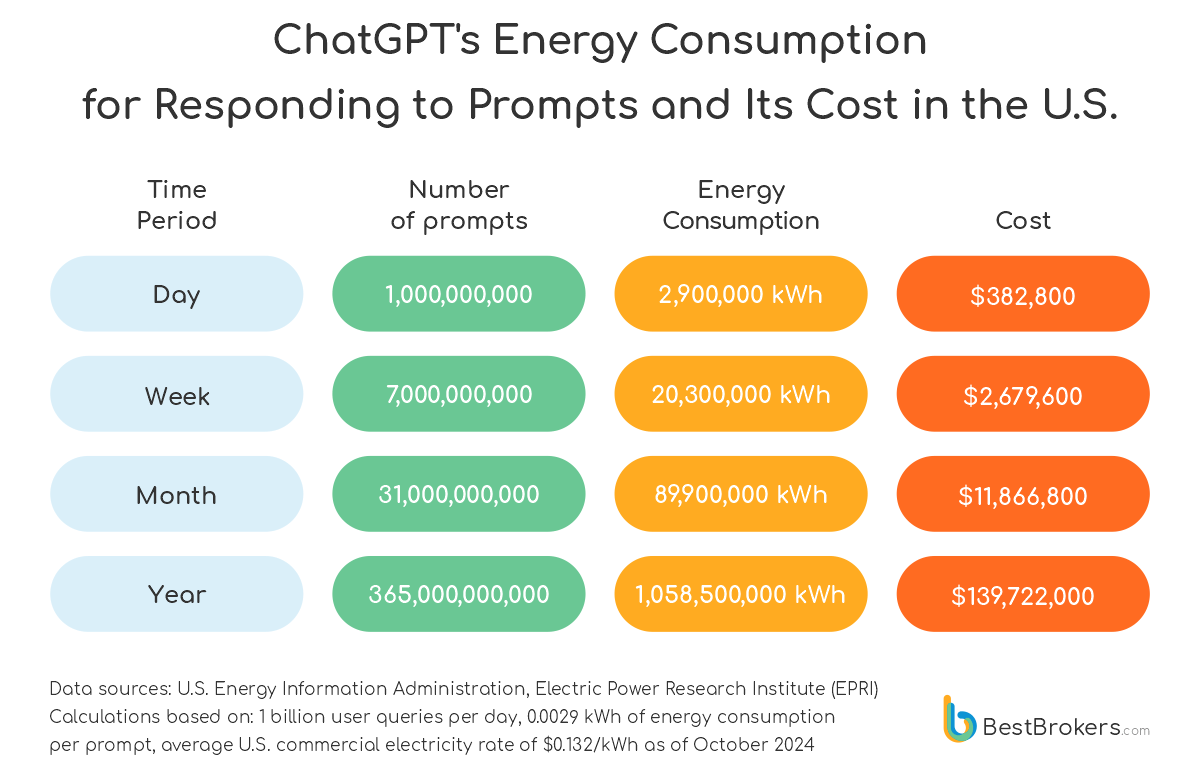

However, despite individual consumption being low, the cumulative effect of millions of daily queries creates a considerable energy footprint. This fact highlights the need for technology companies to continue investing in improving the efficiency of their systems to minimize the environmental impact of AI.

The study also highlights the key factors behind this improvement in efficiency. The implementation of new Nvidia H100 chips instead of the old A100 models has been instrumental in reducing energy consumption. In addition, the optimization of AI systems and the improvement of calculation methods, which now assume a 70% utilization of server capacity instead of maximum utilization, have also contributed to this achievement.

These advances reflect an ongoing effort by the industry to make artificial intelligence more energy-efficient as the demand for data processing and AI applications grow. Power consumption is expected to continue to decline without compromising system performance or capacity.

Although the energy consumption of each query is low, mass global adoption of AI could have a significant long-term impact. It is estimated that ChatGPT's annual energy consumption could reach 226.8 GWh, enough to fully charge 3.13 million electric vehicles. While this is relatively small compared to other industries, the global scale of AI use raises concerns about energy consumption as more people constantly use these systems.

All of this is further reinforced by the projection that AI energy consumption could increase to 134 terawatt hours by 2027, showing that as models become more complex and expand globally, total energy consumption could outpace efficiency gains. The AI industry is responding to these challenges by investing in more efficient hardware and renewable energy sources to mitigate the environmental impacts of its growth.

Energy efficiency is crucial for the future of AI, especially if the technology continues to expand into diverse areas, from healthcare to education and entertainment. Although the energy consumption of ChatGPT individually is low, the global scale of its use can generate substantial consumption. To counteract this impact, it is essential that technology companies not only improve the efficiency of their systems but also commit to using renewable energy sources to power their data centers.

As demand for AI continues to grow, the industry is likely to continue to prioritize improving energy efficiency as a key factor in the development of new technologies.