“Why Does ChatGPT 'Delve' So Much?”: FSU Researchers Begin to Uncover the Reasoning Behind ChatGPT's Responses

With nearly 70 million monthly ChatGPT users in the U.S., large language models and chatbots have become integral to various aspects of daily life, corporate initiatives, research methodologies, and more. Despite their widespread use, the training mechanisms behind these large language models and the rationale behind their responses continue to be enigmatic.

Exploring ChatGPT's Language Patterns

Florida State University researchers, Tom Juzek and Zina Ward, have embarked on a groundbreaking study to delve into why ChatGPT exhibits certain response patterns. Their research endeavor, titled “Why Does ChatGPT ‘Delve’ So Much? Exploring the Source of Overrepresentation in Large Language Models,” was recently published in the Proceedings of the 31st International Conference on Computational Linguistics. This study represents the initial scientific evidence shedding light on why ChatGPT tends to overuse specific words.

Investigating Language Model Behavior

Juzek, a computational linguist, and Ward, a philosopher of science, collaborated to identify 21 words that exhibited an unusually high frequency in scientific abstracts over the past four years without a clear explanation. The researchers discovered that human preference played a significant role in the overuse of these words.

By comparing human-written scientific abstracts from 2020 and 2024, the team pinpointed words that experienced a surge in usage. Subsequently, they created a database of these abstracts and tasked ChatGPT with rewriting them. The comparison between the original and ChatGPT-generated abstracts revealed a correlation between the words that spiked in usage and those overused by the language model.

Impact of Human Preference Data

The researchers conducted an online experiment to assess how human preference and feedback influenced the language model's word choice. Results indicated that participants reacted unfavorably to certain buzzwords like ‘delve,’ highlighting the intricate relationship between human preference and language model outputs.

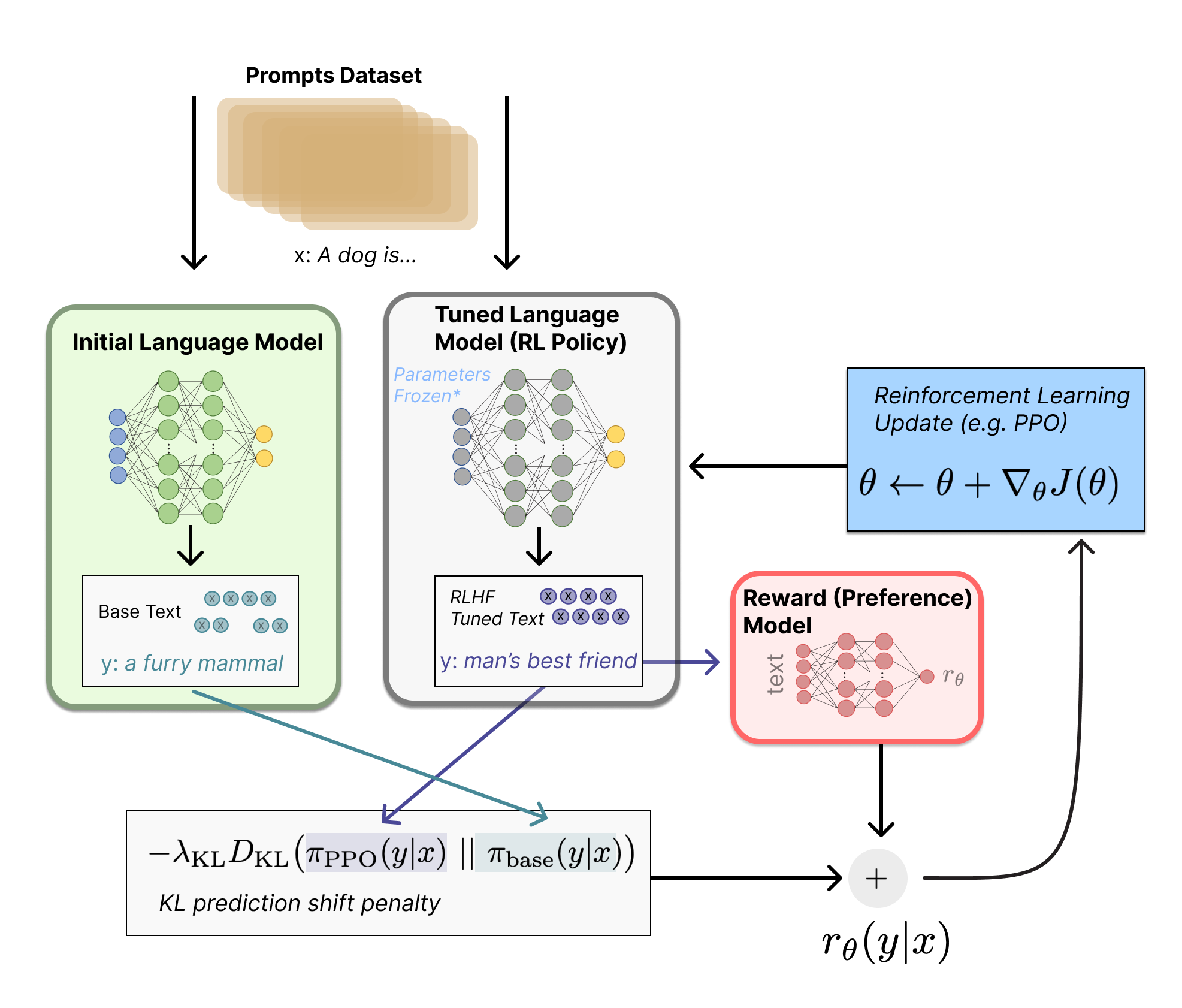

Furthermore, a model comparison study involving Meta’s Llama model revealed that the version trained on human preference data exhibited a lesser degree of surprise when exposed to abstracts containing buzzwords compared to the base model. This finding underscores the influence of human preference data on the lexical choices of large language models.

Future Research Directions

Presenting their work at the 31st International Conference on Computational Linguistics, the researchers emphasized the far-reaching implications of their study at the intersection of linguistics, computational linguistics, computer science, data science, philosophy, and ethics. As the field of generative AI continues to expand, understanding the dynamics between technology and human language remains crucial.

As Juzek and Ward continue their investigation into complex language models, they aim to utilize tools and methodologies from computational linguistics to further unravel the mysteries surrounding large language models.

For more information on the research conducted at the Department of Modern Languages and Linguistics, visit mll.fsu.edu. Explore the work undertaken in the Department of Philosophy at philosophy.fsu.edu. To learn about the Interdisciplinary Data Science Master’s Degree Program, visit datascience.fsu.edu.