Can the xFakeSci tool identify fake AI-generated content?

The growing use of generative artificial intelligence (AI) tools like ChatGPT has increased the risk of human-appearing content plagiarized from other sources. A new study published in Scientific Reports assesses the performance of xFakeSci in differentiating authentic scientific content from ChatGPT-generated content.

Understanding AI-generated Content

AI generates content based on the supply of prompts or commands to direct its processing. Aided and abetted by social media, predatory journals have published fake scientific articles to lend authority to dubious viewpoints. This could be further exacerbated by publishing AI-generated content in actual scientific publications.

The Role of xFakeSci Algorithm

Previous research has emphasized the challenges associated with distinguishing AI-generated content from authentic scientific content. Thus, there remains an urgent need to develop accurate detection algorithms. In the current study, researchers utilized xFakeSci, a novel learning algorithm that can differentiate AI-generated content from authentic scientific content.

Research Findings

During training, researchers used engineered prompts to identify fake documents and their distinctive traits with ChatGPT. Thereafter, xFakeSci was used to predict the document class and its genuineness. Two types of network training models were based on ChatGPT-generated and human-written content obtained from PubMed abstracts.

Performance of xFakeSci

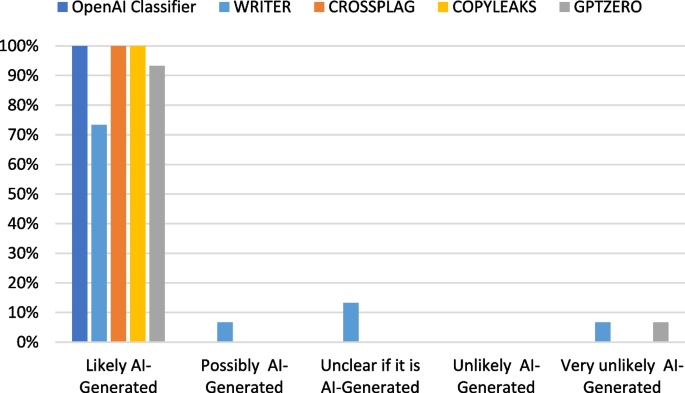

After training and calibration, xFakeSci was tested on articles related to depression, cancer, and Alzheimer's disease. The algorithm showed promising results in accurately identifying AI-generated content and outperformed conventional data mining algorithms like Naïve Bayes, Support Vector Machine (SVM), Linear SVM, and Logistic Regression.

Conclusion

The xFakeSci algorithm is a valuable tool in detecting fake AI-generated content and shows potential for various applications in the field of scientific research. However, ethical considerations must be taken into account to ensure responsible use of generative AI tools.

Future research could further explore the use of xFakeSci and other technologies to enhance the detection of counterfeit reports and improve the overall scientific integrity.

Posted in: Device / Technology News | Medical Science News | Medical Research News

Tags: Artificial Intelligence, Cancer, Depression, Research