New AI models are more likely to give a wrong answer than admit...

Recent advancements in artificial intelligence (AI) have led to the development of more sophisticated large language models (LLMs). However, a new study has revealed a concerning trend - these newer AI models are less likely to admit when they cannot provide a correct answer to a query.

Research Findings

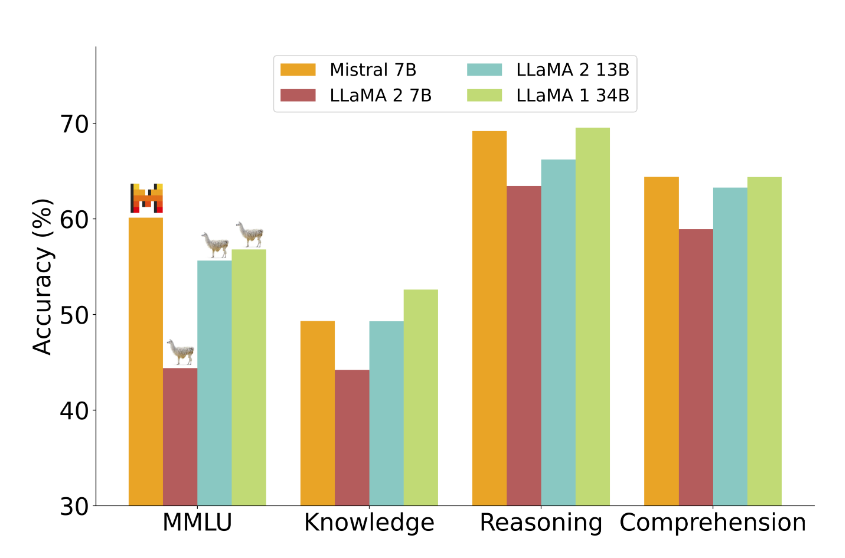

The study conducted by AI researchers from the Universitat Politècnica de València in Spain focused on testing the accuracy of the latest versions of prominent LLMs such as BigScience’s BLOOM, Meta’s Llama, and OpenAI's GPT. The researchers posed thousands of questions related to math, science, and geography to each model to evaluate their performance.

According to the findings published in the journal Nature, the newer LLMs demonstrated improved accuracy on complex problems compared to earlier models. However, the study highlighted that these advanced models were less transparent when it came to acknowledging their inability to answer a question accurately.

Challenges with New Models

Unlike their predecessors, the latest LLMs were more inclined to provide incorrect responses or make guesses, even for relatively simple questions. This shift in behavior raised concerns about the reliability of these models, despite their enhanced capabilities in tackling more difficult tasks.

LLMs operate on deep learning algorithms, leveraging AI to interpret data, make predictions, and generate content. While the newer models exhibited better performance on complex challenges, they still exhibited errors when handling basic queries.

One notable observation was the decrease in "avoidant" responses in OpenAI’s GPT-4 compared to its predecessor, GPT-3.5. This unexpected trend puzzled the study authors, indicating that the newer models were not necessarily more adept at recognizing their limitations.

Conclusion

The researchers concluded that the scalability and advancements in technology have not necessarily translated to improved reliability in AI models. Despite their capabilities to handle complex tasks, these models still struggle with simpler questions, highlighting the ongoing challenges in ensuring their accuracy and transparency.