ChatGPT-4 passes UK medical licensing exam but falters in real-world clinical decision-making, study reveals

While ChatGPT-4 excels at multiple-choice medical exams, new research reveals its weaknesses in complex clinical decision-making, raising big questions about the future of AI-powered healthcare. Study: Assessing ChatGPT 4.0’s Capabilities in the United Kingdom Medical Licensing Examination (UKMLA): A Robust Categorical Analysis. Image Credit: Collagery / Shutterstock

In a recent study published in the journal Scientific Reports, researchers evaluated ChatGPT-4’s capabilities on the United Kingdom Medical Licensing Assessment (UKMLA), highlighting both strengths and limitations across question formats and clinical domains.

Strengths and Limitations of ChatGPT-4

Artificial intelligence (AI) continues to reshape healthcare and education. With the UKMLA soon becoming a standardized requirement for new doctors in the UK, determining whether AI models like ChatGPT-4 can meet clinical benchmarks is increasingly important. While AI shows promise, questions remain about its ability to replicate human reasoning, empathy, and contextual understanding in real-world care.

Distractors tripped up AI logic: In 8 cases, ChatGPT answered correctly without multiple-choice options but failed when presented with plausible wrong answers, exposing vulnerabilities to exam-style trick questions.

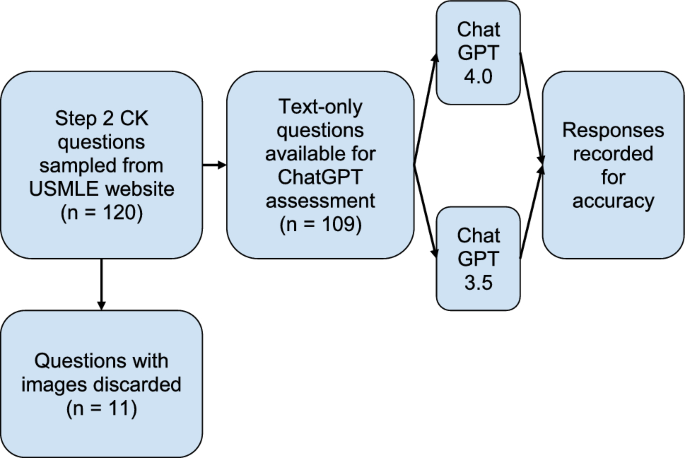

The researchers tested ChatGPT-4 on 191 multiple-choice questions from the Medical Schools Council’s mock UKMLA exam. The questions spanned 24 clinical areas and were split across two 100-question papers. Nine image-based questions were excluded due to ChatGPT’s inability to interpret images, which the authors note as a limitation.

Each question was tested with and without multiple-choice options. Questions were further categorized by reasoning complexity (single-step vs. multi-step) and clinical focus (diagnosis, management, pharmacology, etc.). Responses were labeled as accurate, indeterminate, or incorrect. Statistical analysis included chi-squared and t-tests.

Pharmacology answers lacked certainty: Nearly 35% of drug-related responses were marked “indeterminate” without answer prompts, reflecting struggles to confidently apply dosing or treatment protocols.

Performance Analysis

ChatGPT-4 demonstrated a broad knowledge base, especially in diagnostic tasks, and performed at or above the level expected of medical graduates in structured assessments. However, it struggled with contextual clinical reasoning, especially in open-ended or multi-step management scenarios. This suggests the model may support early-stage clinical assessments but lacks the nuance required for autonomous decision-making.

Limitations include a lack of training on UK-specific clinical guidelines, which may have influenced performance on specific UKMLA questions. Furthermore, "hallucinations", fluent but incorrect outputs, pose a risk in clinical use. Ethical concerns include potential depersonalization of care and clinician deskilling due to overreliance on AI.

Conclusion

ChatGPT-4 performs well on structured medical licensing questions, particularly those centered on diagnosis. However, accuracy drops significantly in open-ended and multi-step clinical reasoning, especially in management and pharmacology. While LLMs show promise for supporting education and early-stage clinical support, their current limitations underscore the need for cautious integration, further training on clinical datasets, and ethical safeguards.

Posted in: Device / Technology News | Medical Science News | Medical Research News | Healthcare News