ChatGPT Bombs Accounting and Tax Exams: 47% v. 77% for Students

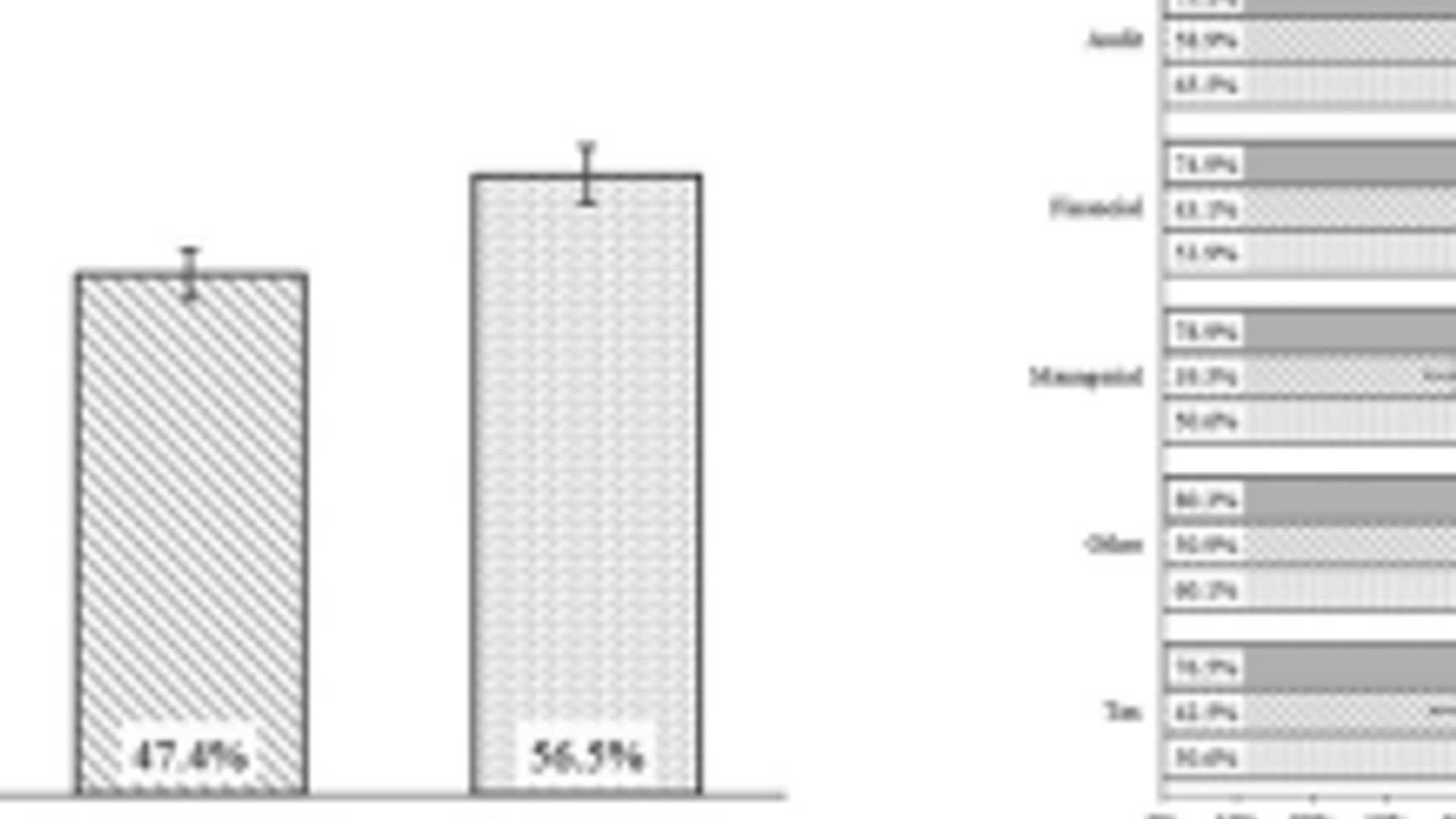

Last month, OpenAI launched GPT-4, the newest AI chatbot product that passed the bar exam with a score in the 90th percentile and got a nearly perfect score on the GRE Verbal test. However, when it comes to accounting exams, BYU and over 186 other universities tested the original version, ChatGPT, and found that it performed poorly (47.4%) compared to students (76.7%).

Lead author David Wood, a BYU professor of accounting, said that ChatGPT is a game changer that will improve the teaching process for faculty and the learning process for students despite its poor performance. Students scored an overall average of 76.7%, while ChatGPT scored 47.4% on 28,085 questions from accounting assessments and textbook test banks.

On 11.3% of the questions, ChatGPT performed higher than the student average, particularly on AIS and auditing. However, it did poorly on tax, financial, and managerial assessments, possibly because it struggled with the mathematical processes required for these questions.

While ChatGPT’s performance on accounting exams is not impressive, it is still generating considerable attention for its ability to respond to users’ questions through natural language text, and is expected to improve in the future.