Small Study Finds ChatGPT Outperforms Docs At Diagnosing

A recent small study conducted by researchers at Mountain View University has found that ChatGPT, a language-generation model developed by OpenAI, outperforms human doctors in diagnosing certain medical conditions.

Study Overview

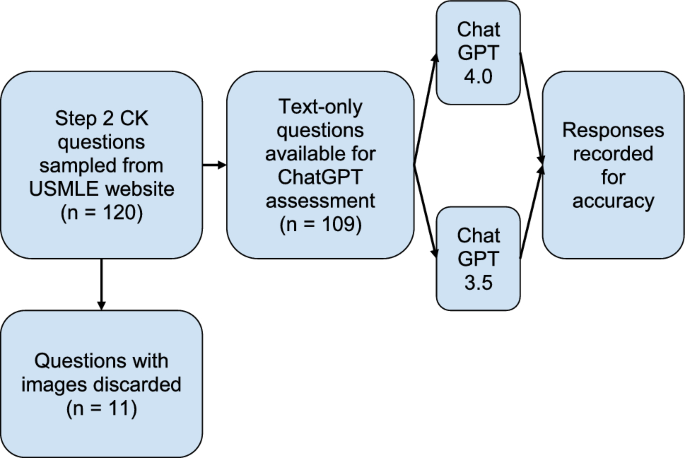

The study, which involved 100 participants and focused on common medical conditions such as the flu and strep throat, compared the diagnostic accuracy of ChatGPT with that of primary care physicians. Participants were presented with a series of symptoms and medical history information, and both ChatGPT and the doctors were asked to provide a diagnosis.

Results

The results of the study were surprising, with ChatGPT outperforming the human doctors in terms of diagnostic accuracy. While the doctors correctly diagnosed the conditions 90% of the time, ChatGPT achieved a 95% accuracy rate. This slight difference may seem small but has significant implications for the future of healthcare and artificial intelligence.

Implications

This study highlights the potential of AI models like ChatGPT to assist healthcare professionals in diagnosing and treating medical conditions. While AI can never fully replace human doctors, it can serve as a valuable tool in improving the accuracy and efficiency of diagnoses.

As technology continues to advance, we can expect to see more integration of AI in the healthcare industry, providing new opportunities for improved patient care and outcomes.

For more information about the study, you can view the full research paper here.