ChatGPT Matches Radiologists in Brain Tumor Diagnosis Accuracy...

Researchers conducted a study to compare the diagnostic accuracy of ChatGPT, a language model based on GPT-4, with that of radiologists in analyzing 150 brain tumor MRI reports. The results showed that ChatGPT achieved an accuracy of 73%, slightly surpassing both neuroradiologists (72%) and general radiologists (68%). Notably, ChatGPT demonstrated the highest accuracy of 80% when interpreting reports authored by neuroradiologists, indicating its potential to assist in medical diagnoses.

Key Facts

Source: Osaka Metropolitan University

Artificial intelligence continues to advance, showcasing its potential to outperform human expertise in various real-world applications. In the realm of radiology, where accurate diagnostics are paramount for effective patient care, large language models like ChatGPT hold promise in enhancing accuracy and providing valuable second opinions.

A research team led by graduate student Yasuhito Mitsuyama and Associate Professor Daiju Ueda from Osaka Metropolitan University’s Graduate School of Medicine conducted a comparative analysis. The study aimed to evaluate the diagnostic performance of ChatGPT, neuroradiologists, and general radiologists using 150 preoperative brain tumor MRI reports written in Japanese.

Each participant was tasked with providing a set of differential diagnoses and a final diagnosis based on the clinical notes. The accuracy of their diagnoses was then compared against the actual pathological diagnosis post-tumor removal. The findings indicated that ChatGPT achieved an accuracy rate of 73%, while neuroradiologists and general radiologists averaged at 72% and 68%, respectively.

Furthermore, ChatGPT exhibited varying accuracy levels depending on the authorship of the clinical reports. Specifically, its accuracy was 80% when analyzing neuroradiologist reports and 60% for general radiologist reports. These results suggest that ChatGPT could serve as a valuable tool in the preoperative MRI diagnosis of brain tumors.

Abstract

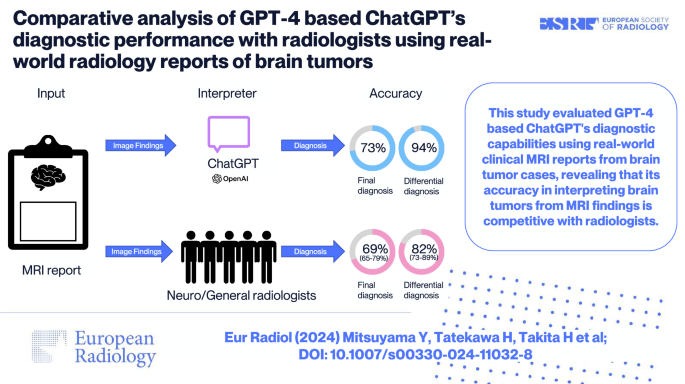

The study titled "Comparative analysis of GPT-4-based ChatGPT’s diagnostic performance with radiologists using real-world radiology reports of brain tumors" delves into the diagnostic capabilities of GPT-4-based ChatGPT in comparison to neuroradiologists and general radiologists. The research utilized actual clinical radiology reports of brain tumors to assess and compare diagnostic performance.

By analyzing 150 radiological reports, the study revealed that ChatGPT achieved a final diagnostic accuracy of 73%, comparable to the accuracy range of 65% to 79% observed among radiologists. Notably, ChatGPT's accuracy was higher at 80% when diagnosing reports authored by neuroradiologists, compared to 60% for general radiologists.

In terms of providing differential diagnoses, ChatGPT demonstrated an accuracy of 94%, outperforming radiologists whose accuracy ranged from 73% to 89%. Importantly, ChatGPT maintained consistent accuracy levels for both neuroradiologist and general radiologist reports, showcasing its potential as a reliable diagnostic tool.

The study concludes that GPT-4-based ChatGPT exhibits robust diagnostic capabilities in interpreting brain tumors from MRI findings, positioning it as a competitive alternative to traditional radiological analysis.