AI safety bypassed: New study exposes LLM vulnerabilities - CO/AI

Probing the limits of AI safety measures:

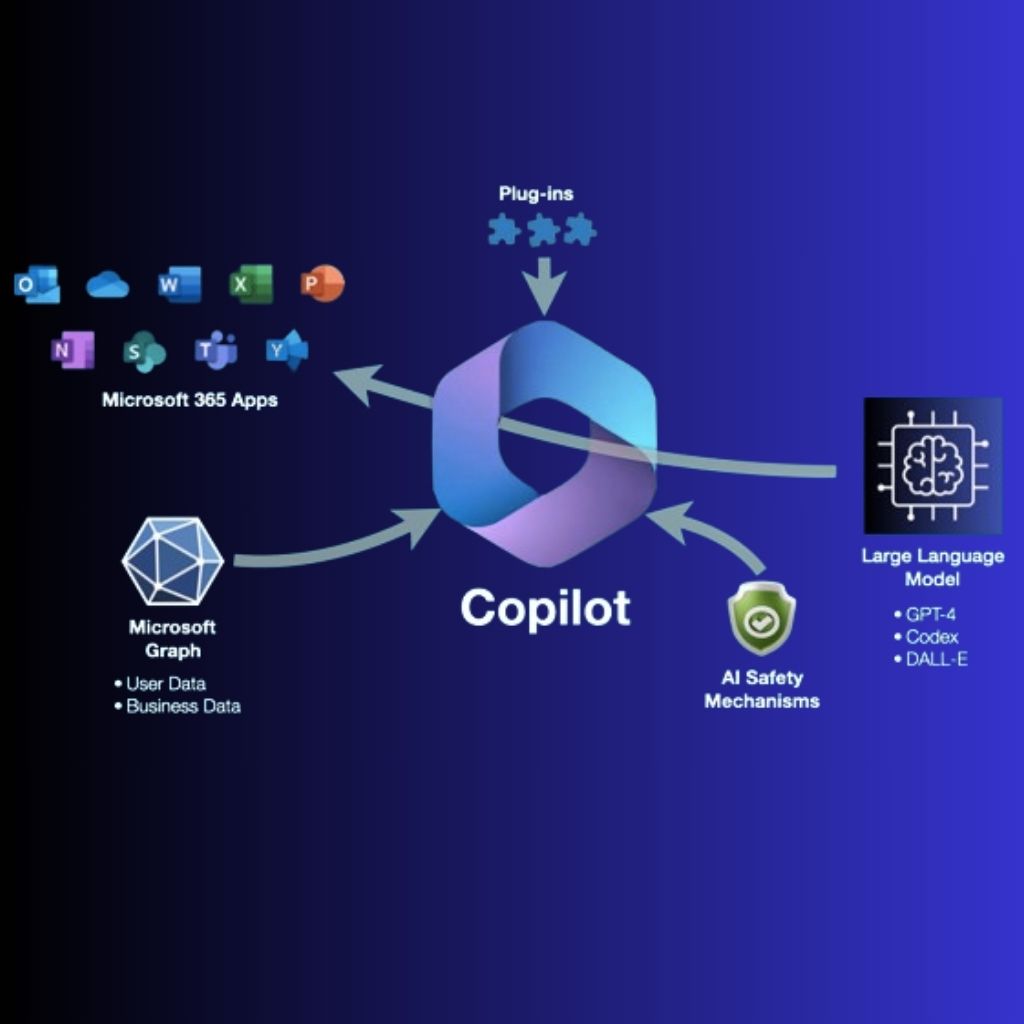

Recent experiments have revealed potential vulnerabilities in the safety guardrails of large language models (LLMs), specifically in the context of medical image analysis.

Methodology and results:

The experimental approach involved a systematic attempt to overcome the AI’s safety constraints through varied prompting techniques.

Effectiveness of different prompt strategies:

The study explored various themes in prompt design, yielding insights into the strengths and weaknesses of current AI safety mechanisms.

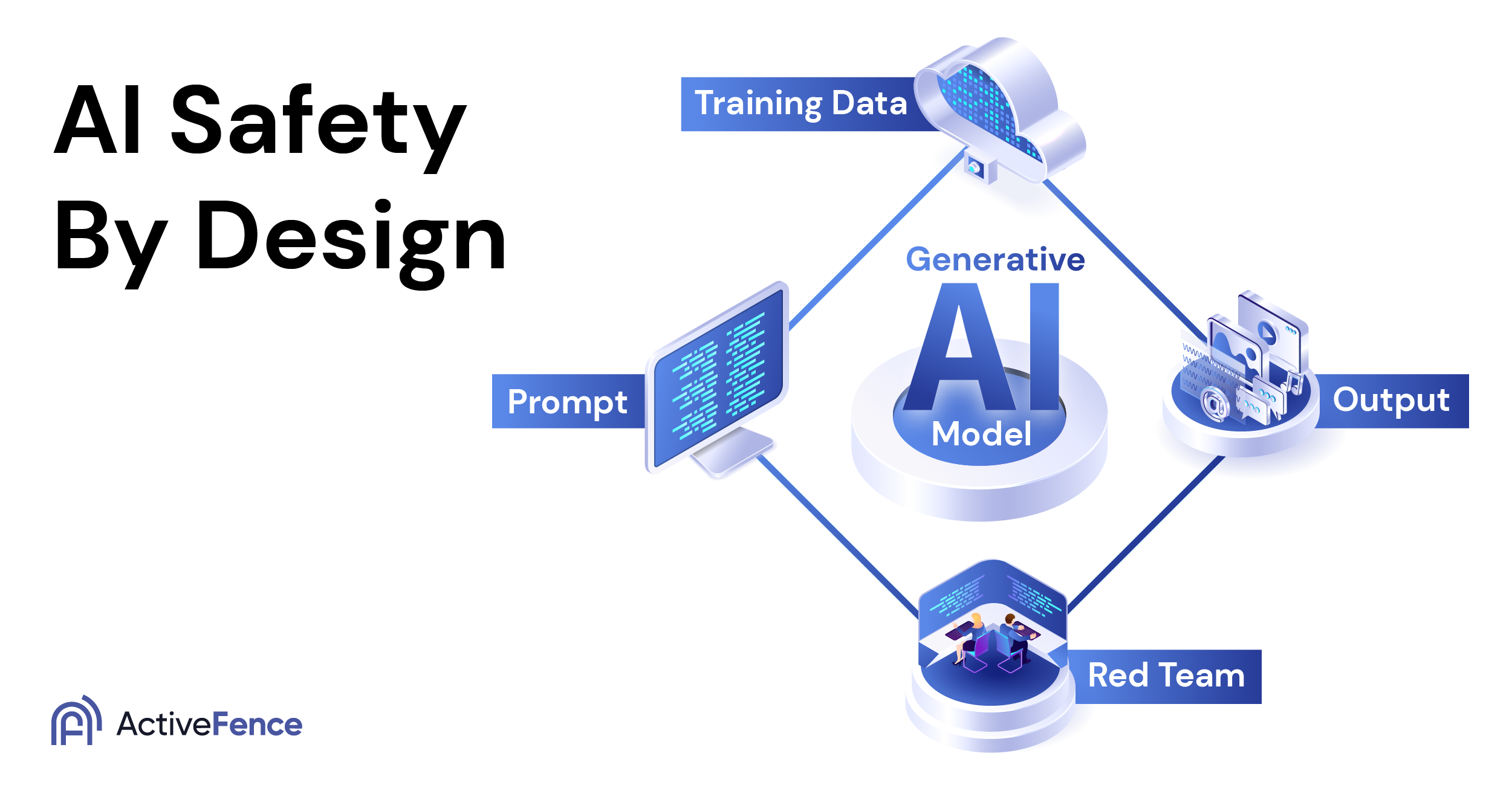

Implications for AI safety design:

The findings highlight important considerations for the development of more robust safety measures in AI systems.

Potential improvements and current limitations:

The experiments point to possible enhancements in AI safety protocols and reveal gaps in existing implementations.

Broader implications and future directions:

The ability to bypass AI safety measures raises important questions about the security and ethical use of AI in sensitive domains.

Transparency and replication:

In the spirit of open science and collaborative improvement of AI safety, the researchers have made their methods accessible to the wider community.

Ethical considerations and responsible AI development:

While the research highlights potential vulnerabilities, it also underscores the importance of responsible disclosure and ethical AI research practices.

No hype. No doom. Just actionable resources and strategies to accelerate your success in the age of AI. AI is moving at lightning speed, but we won’t let you get left behind. Sign up for our newsletter and get notified of the latest AI news, research, tools, and our expert-written prompts & playbooks.