Meta Drives AI Innovation With Open-Source LLaMA

Meta's decision to open source its LLaMA models, from early versions to the recent multimodal LLaMA 3.2, has established a new precedent in the AI field. The company based this strategy on its belief in democratizing AI development and harnessing global expertise to advance AI models. Unlike proprietary models from OpenAI and Google, Meta's open-source approach enables organizations to use these powerful tools without the burden of high licensing fees.

Open-Source Approach for Global Advancement

Chief AI Scientist Yann LeCun at Meta drew a compelling parallel to another transformative technology. "Linux is the industry standard foundation for both cloud computing and the operating systems that run most mobile devices - and we all benefit from superior products because of it. I believe that AI will develop in a similar way," he said.

Meta's pursuit of open-source LLMs extends beyond data sharing. The company aims to create globally distributable models that can be customized by institutions worldwide. This vision of decentralized training and application development is driving Meta's commitment to deploy data centers and AI resources globally, including regions like India and Africa.

Commitment to Open-Source Innovation

Since Meta established its AI efforts in 2013, it launched more than 1,000 open-source projects. Many gained significant adoption, including Segment Anything and DINO. The LLaMA project, in particular, exceeded growth expectations.

"We genuinely believe that this open-source approach is the way forward because it enables developers worldwide to build on this foundational technology," said Manohar Paluri, vice president of AI at Meta. "It offers customization capabilities, allowing developers to create applications more efficiently. We have 4 billion users engaging with our applications, and LLaMA serves as the engine behind this growth."

Foundational AI Research

Meta Founder and CEO Mark Zuckerberg highlighted the company's open-source success in a blog post. "We've saved billions of dollars by releasing our server, network and data center designs with Open Compute Project and having supply chains standardize on our designs. We benefited from the ecosystem's innovations by open sourcing leading tools like PyTorch, React and many more tools. This approach has consistently worked for us when we stick with it over the long term."

The company's AI research hub, Fundamental AI Research in Paris, played a crucial role in this open-source initiative. This research center focuses on developing foundational models, refining algorithmic processes, and enhancing model robustness.

Empowering Organizations with Open-Source AI

Meta's commitment to open-source AI empowers organizations to refine these tools, enabling a scalable approach to AI adoption across industries. This democratization allows organizations to build trusted AI environments without proprietary constraints.

Advancements in Machine Intelligence

As discussions about artificial general intelligence evolve, Meta maintains a measured stance. LeCun argued that while many predicted AGI's rapid arrival, current LLMs still needed critical faculties - such as persistent memory and nuanced understanding - essential for true intelligence.

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/22977156/acastro_211101_1777_meta_0002.jpg)

Meta's open-source philosophy champions a diverse AI ecosystem shaped by collective insights rather than dominated by a few corporate entities.

Future Developments and Innovations

Looking ahead, Meta's AI roadmap includes the continued development of LLaMA models with more sophisticated reasoning and agent capabilities. With upcoming iterations such as LLaMA 4, Meta aims to refine action-oriented functionalities, allowing AI to perform digital actions in specific scenarios.

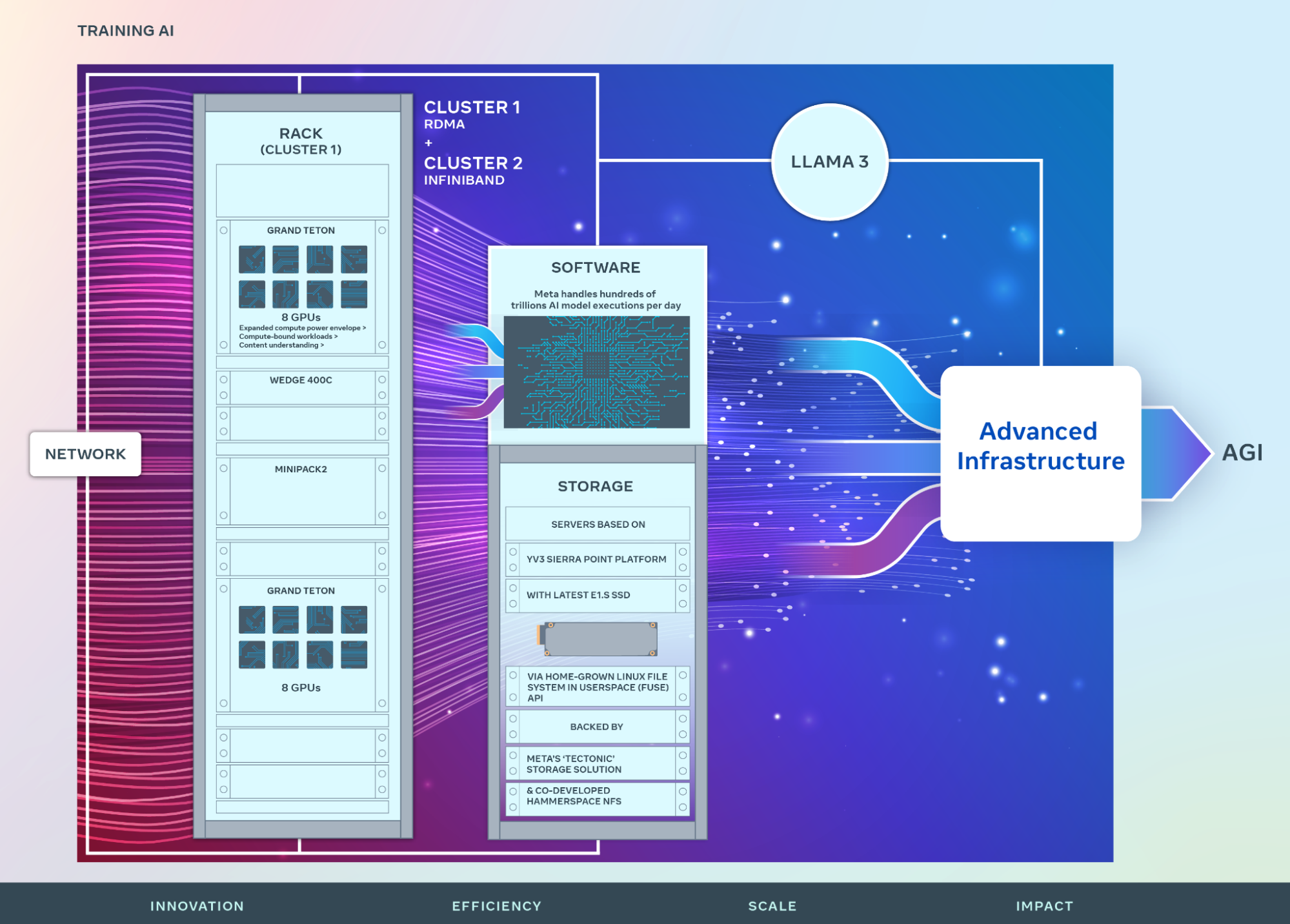

In response to rising AI training costs, Meta anticipated an evolution toward distributed AI training, pooling computational resources from multiple regions and institutions worldwide.

Our website uses cookies. Cookies enable us to provide the best experience possible and help us understand how visitors use our website. By browsing cio.inc, you agree to our use of cookies.