Meta researchers distill System 2 thinking into LLMs, improving reasoning capabilities

Large language models (LLMs) excel at answering simple questions but struggle with complex tasks that require reasoning and planning. To address this limitation, researchers at Meta FAIR have introduced a technique known as "System 2 distillation," which enhances the reasoning capabilities of LLMs without the need for intermediate steps.

The Concept of System 1 and System 2 Thinking

In cognitive science, System 1 and System 2 represent two different modes of thinking. System 1 is fast, intuitive, and automatic, used for tasks like pattern recognition and quick judgments. On the other hand, System 2 is slower, deliberate, and analytical, required for complex problem-solving and planning.

LLMs are typically associated with System 1 thinking, capable of generating text rapidly but lacking in deliberate reasoning abilities. Recent studies have explored methods to make LLMs mimic System 2 thinking by prompting them to generate intermediate reasoning steps, leading to more accurate results for complex tasks.

System 2 Distillation Technique

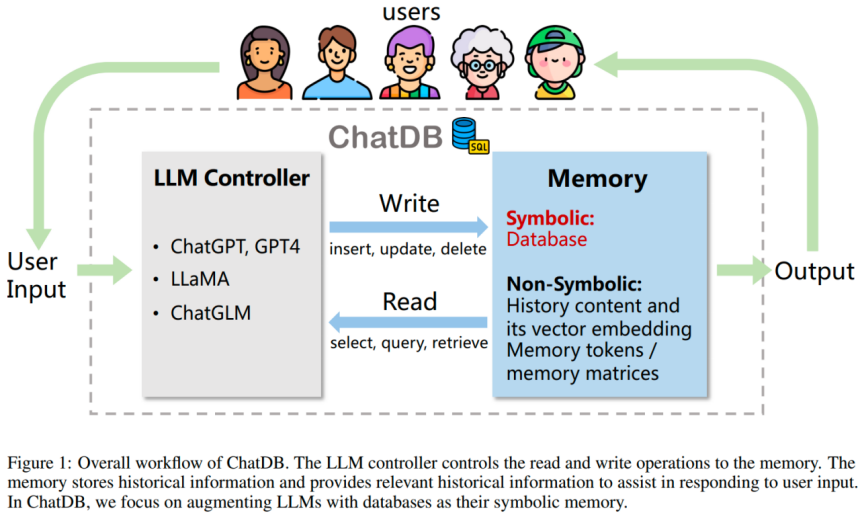

The System 2 distillation technique developed by Meta AI researchers leverages the model's own System 2 reasoning capabilities to enhance its System 1 generation. Through a process of prompting, verification, and fine-tuning, the model learns to skip intermediate reasoning steps and directly provide answers.

Evaluation and Results

The researchers evaluated the System 2 distillation method across various reasoning tasks and prompting techniques, demonstrating significant improvements in performance. Distilled models showed enhanced accuracy and faster response times compared to traditional System 2 methods, making them more computationally efficient.

While System 2 distillation has shown promising results, there are still challenges in distilling certain types of reasoning skills into LLMs' fast-paced inference mechanism. Further research is needed to explore the technique's impact on smaller models and its broader application across diverse tasks.

Conclusion

System 2 distillation offers a novel approach to enhancing LLMs' reasoning capabilities, paving the way for more efficient and accurate AI systems. By distilling complex tasks into simplified outputs, researchers aim to optimize LLM performance and facilitate advancements in natural language processing.