Balancing Transparency and Risk: An Overview of the Security and Privacy Concerns in Open-Source Machine Learning Models

The field of artificial intelligence (AI) has seen significant advancements in recent years, largely due to the adoption of open-source machine learning models in both research and industry. These models, already trained by a few key players and publicly released, serve as the foundation for various applications. However, while these open-source models offer great utility, they also come with inherent privacy and security risks that are often underestimated.

For instance, seemingly harmless models may have hidden functionalities that can be exploited to manipulate systems, leading to potentially dangerous outcomes such as instructing autonomous vehicles to disregard other cars. The consequences of privacy and security breaches can range from minor disruptions to severe incidents like physical harm or data exposure.

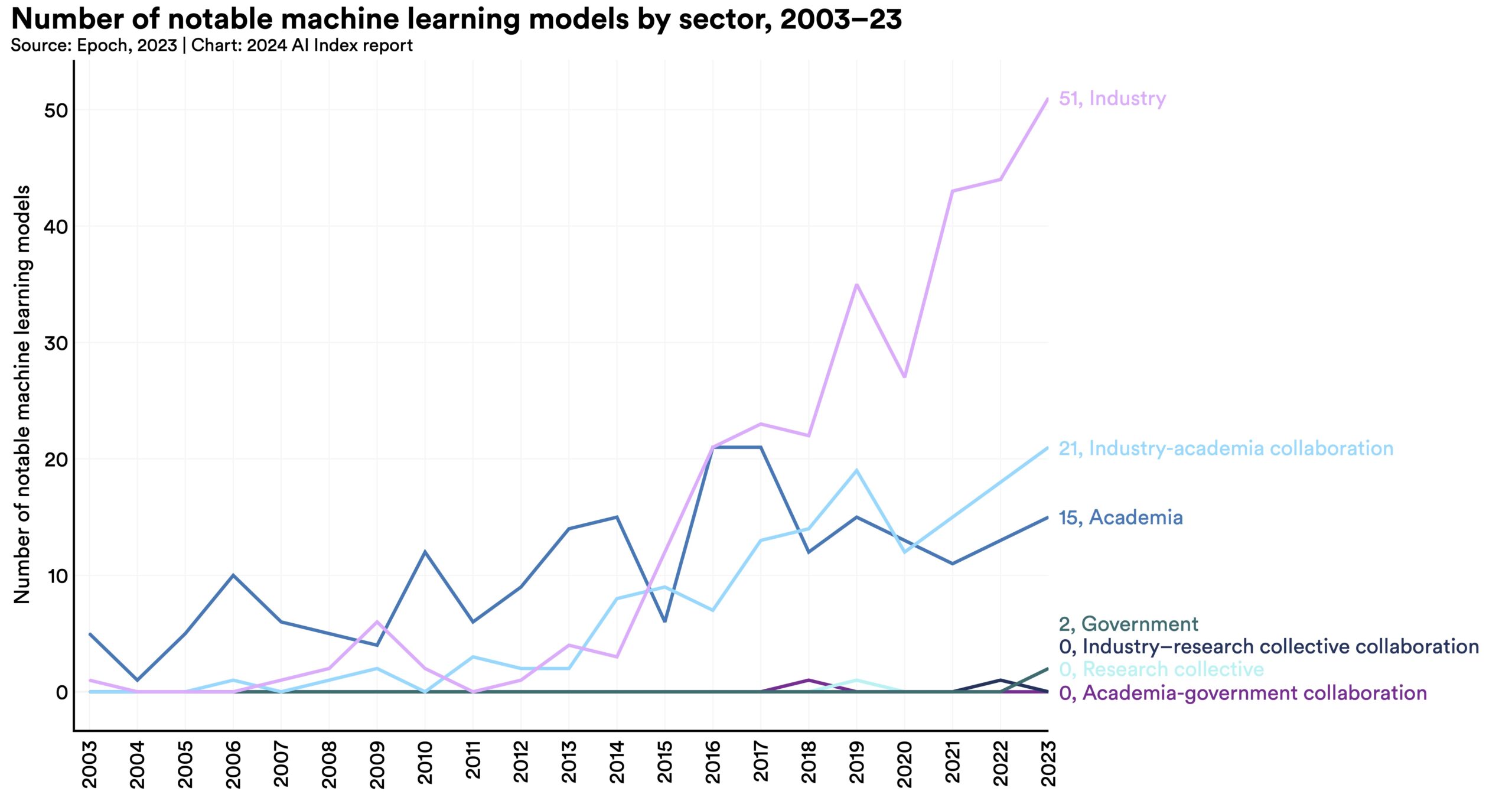

The Rise of Open-Source Models

With the advancement in computing power, large models are now trained on extensive datasets, often sourced from the public internet. While many models remain closed-source, there is a growing trend towards developing open-source models that serve as the basis for various tasks. For example, models like Stable Diffusion utilize pre-trained components from other models to enhance performance.

Some models, like OpenAI's GPT-4 and Google's Gemini, are entirely closed-source and accessible only through APIs. On the other hand, models like BLOOM, OpenLLaMA, and LLaMA are open-source, providing both the training code and parameters.

The Need for Trustworthiness in Open-Source Models

While open-source models offer accessibility and collaboration opportunities through platforms like Hugging Face and TensorFlow Hub, ensuring the trustworthiness of these models is crucial. With the architecture and training details publicly available, malicious actors have an advantage in exploiting vulnerabilities.

Trustworthy machine learning encompasses security, safety, and privacy considerations. Safety pertains to the model's resilience against malfunctions, while security focuses on protecting against intentional attacks. Privacy involves safeguarding sensitive information during training and model usage.

Protecting Privacy and Security in AI Systems

This work highlights common privacy and security threats associated with open-source models, using a face classification model as an example. It discusses prominent attacks as outlined by the German Federal Office for Information Security.

By understanding and addressing these risks, we aim to promote the responsible and secure use of AI systems, ensuring that transparency and risk are effectively balanced in the development and deployment of open-source machine learning models.

If you want to access the full conference paper, you can download it here.