Latent.Space 2024 Year in Review - by swyx & Alessio

Applications for the 2025 AI Engineer Summit are up, and you can save the date for AIE Singapore in April and AIE World’s Fair 2025 in June. Happy new year, and thanks for 100 great episodes! Please let us know what you want to see/hear for the next 100! Like and subscribe and hit that bell to get notifications!

Welcome to the 100th Episode!

Reflecting on the Journey, AI Engineering: The Rise and Impact, Latent Space Live and AI Conferences, The Competitive AI Landscape, Synthetic Data and Future Trends.  , Creative Writing with AI, Legal and Ethical Issues in AI, The Data War: GPU Poor vs. GPU Rich, The Rise of GPU Ultra Rich, Emerging Trends in AI Models, The Multi-Modality War, The Future of AI Benchmarks, Pionote and Frontier Models, Niche Models and Base Models, State Space Models and RWKB, Inference Race and Price Wars, Major AI Themes of the Year, AI Rewind: January to March, AI Rewind: April to June, AI Rewind: July to September, AI Rewind: October to December, Year-End Reflections and Predictions

, Creative Writing with AI, Legal and Ethical Issues in AI, The Data War: GPU Poor vs. GPU Rich, The Rise of GPU Ultra Rich, Emerging Trends in AI Models, The Multi-Modality War, The Future of AI Benchmarks, Pionote and Frontier Models, Niche Models and Base Models, State Space Models and RWKB, Inference Race and Price Wars, Major AI Themes of the Year, AI Rewind: January to March, AI Rewind: April to June, AI Rewind: July to September, AI Rewind: October to December, Year-End Reflections and Predictions

Alessio: Hey everyone, welcome to the Latent Space Podcast. This is Alessio, partner and CTO at Decibel Partners, and I'm joined by my co host Swyx for the 100th time today. swyx: Yay, um, and we're so glad that, yeah, you know, everyone has followed us in this journey. How do you feel about it? 100 episodes.

Alessio: Almost two years that we've been doing this. We've had four different studios. Uh, we've had a lot of changes. You know, we used to do this lightning round. When we first started that we didn't like, and we tried to change the question. The answer swyx: was cursor and perplexity. Alessio: Yeah, I love mid journey. It's like, do you really not like anything else? Like what's, what's the unique thing? And I think, yeah, we, we've also had a lot more research driven content. You know, we had like 3DAO, we had, you know. Jeremy Howard, we had more folks like that. I think we want to do more of that too in the new year, like having, uh, some of the Gemini folks, both on the research and the applied side.

The Rise of AI Engineering

It's been a ton of fun. I think we both started, I wouldn't say as a joke, we were kind of like, Oh, we should do a podcast. And I think we kind of caught the right wave, obviously. And I think your rise of the AI engineer posts just kind of get people to congregate, and then the AI engineer summit. And that's why when I look at our growth chart, it's kind of like a proxy for like the AI engineering industry as a whole, which is almost like, even if we don't do that much, we keep growing just because there's so many more AI engineers. So did you expect that growth or did you expect that would take longer for like the AI engineer thing to kind of become, you know, everybody talks about it today.

swyx: So, the sign of that, that we have won is that Gartner puts it at the top of the hype curve right now. So Gartner has called the peak in AI engineering. I did not expect, um, to what level. I knew that I was correct when I called it because I did like two months of work going into that. But I didn't know, You know, how quickly it could happen, and obviously there's a chance that I could be wrong.

AI Engineering in the Industry

But I think, like, most people have come around to that concept. Hacker News hates it, which is a good sign. But there's enough people that have defined it, you know, GitHub, when they launched GitHub Models, which is the Hugging Face clone, they put AI engineers in the banner, like, above the fold, like, in big. So I think it's like kind of arrived as a meaningful and useful definition. I think people are trying to figure out where the boundaries are. I think that was a lot of the drama that happens behind the scenes at the World's Fair in June. Because I think there's a lot of doubt or questions about where ML engineering stops and AI engineering starts. That's a useful debate to be had.

Engineering in AI Today

In some sense, I actually anticipated that as well. So I intentionally did not put a firm definition there because most of the successful definitions are necessarily underspecified and it's actually useful to have different perspectives and you don't have to specify everything from the outset. Yeah, I was at AWS reInvent and the line to get into like the AI engineering talk, so to speak, which is, you know, applied AI and whatnot was like, there are like hundreds of people just in line to go in.

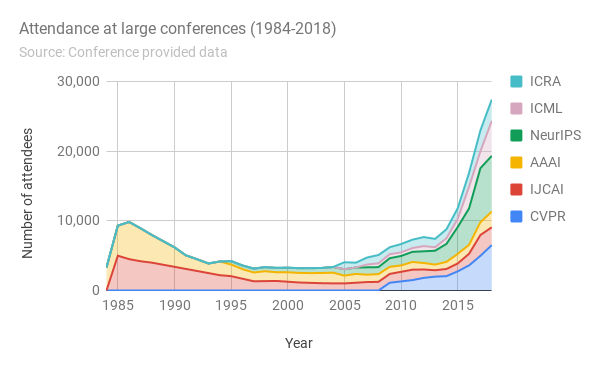

I think that's kind of what enabled me. People, right? Which is what you kind of talked about. It's like, Hey, look, you don't actually need a PhD, just, yeah, just use the model. And then maybe we'll talk about some of the blind spots that you get as an engineer with the earlier posts that we also had on on the sub stack. But yeah, it's been a heck of a heck of a two years. You know, I was trying to view the conference as like, so NeurIPS is I think like 16, 17, 000 people. And the Latent Space Live event that we held there was 950 signups. I think. The AI world, the ML world is still very much research heavy. And that's as it should be because ML is very much in a research phase.

But as we move this entire field into production, I think that ratio inverts into becoming more engineering heavy. So at least I think engineering should be on the same level, even if it's never as prestigious, like it'll always be low status because at the end of the day, you're manipulating APIs or whatever. But Yeah, wrapping GPTs, but there's going to be an increasing stack and an art to doing these, these things well. And I, you know, I think that's what we're focusing on for the podcast, the conference and basically everything I do seems to make sense. And I think we'll, we'll talk about the trends here that apply. It's just very strange. So, like, there's a mix of, like, keeping on top of research while not being a researcher and then putting that research into production.

Advancing AI Research

So, people always ask me, like, why are you covering Neuralibs? Like, this is a ML research conference and I'm like, well, yeah, I mean, we're not going to, to like, understand everything Or reproduce every single paper, but the stuff that is being found here is going to make it through into production at some point, you hope. And then actually like when I talk to the researchers, they actually get very excited because they're like, oh, you guys are actually caring about how this goes into production and that's what they really really want. The measure of success is previously just peer review, right? Getting 7s and 8s on their um, Academic review conferences and stuff like citations is one metric, but money is a better metric.

Innovating AI Conferences

Money is a better metric. Yeah, and there were about 2200 people on the live stream or something like that. Yeah, yeah. Hundred on the live stream. So I try my best to moderate, but it was a lot spicier in person with Jonathan and, and Dylan. Yeah, that it was in the chat on YouTube. I would say that I actually also created. Layen Space Live in order to address flaws that are perceived in academic conferences. This is not NeurIPS specific, it's ICML, NeurIPS. Basically, it's very sort of oriented towards the PhD student, uh, market, job market, right? Like literally all, basically everyone's there to advertise their research and skills and get jobs.

And then obviously all the, the companies go there to hire them. And I think that's great for the individual researchers, but for people going there to get info is not great because you have to read between the lines, bring a ton of context in order to understand every single paper. So what is missing is effectively what I ended up doing, which is domain by domain, go through and recap the best of the year. Survey the field. And there are, like NeurIPS had a, uh, I think ICML had a like a position paper track, NeurIPS added a benchmarks, uh, datasets track. These are ways in which to address that issue. Uh, there's always workshops as well. Every, every conference has, you know, a last day of workshops and stuff that provide more of an overview.

Recapping the Year

But they're not specifically prompted to do so. And I think really, uh, Organizing a conference is just about getting good speakers and giving them the correct prompts. And then they will just go and do that thing and they do a very good job of it. So I think Sarah did a fantastic job with the startups prompt. I can't list everybody, but we did best of 2024 in startups, vision, open models. Post transformers, synthetic data, small models, and agents. And then the last one was the, uh, and then we also did a quick one on reasoning with Nathan Lambert. And then the last one, obviously, was the debate that people were very hyped about.

It was very awkward. And I'm really, really thankful for John Franco, basically, who stepped up to challenge Dylan. Because Dylan was like, yeah, I'll do it. But He was pro scaling. And I think everyone who is like in AI is pro scaling, right? So you need somebody who's ready to publicly say, no, we've hit a wall. So that means you're saying Sam Altman's wrong. You're saying, um, you know, everyone else is wrong. It helps that this was the day before Ilya went on, went up on stage and then said pre training has hit a wall. And data has hit a wall. So actually Jonathan ended up winning, and then Ilya supported that statement, and then Noam Brown on the last day further supported that statement as well.

So it's kind of interesting that I think the consensus kind of going in was that we're not done scaling, like you should believe in a better lesson. And then, four straight days in a row, you had Sepp Hochreiter, who is the