Over 60% of AI chatbot responses are wrong, study finds | The Daily ...

A new study by Columbia Journalism Review's Tow Center for Digital Journalism reveals that popular AI search tools deliver incorrect or misleading information more than 60% of the time, often failing to properly credit original news sources. The findings raise concerns about how these tools undermine trust in journalism and deprive publishers of traffic and revenue.

Research Findings

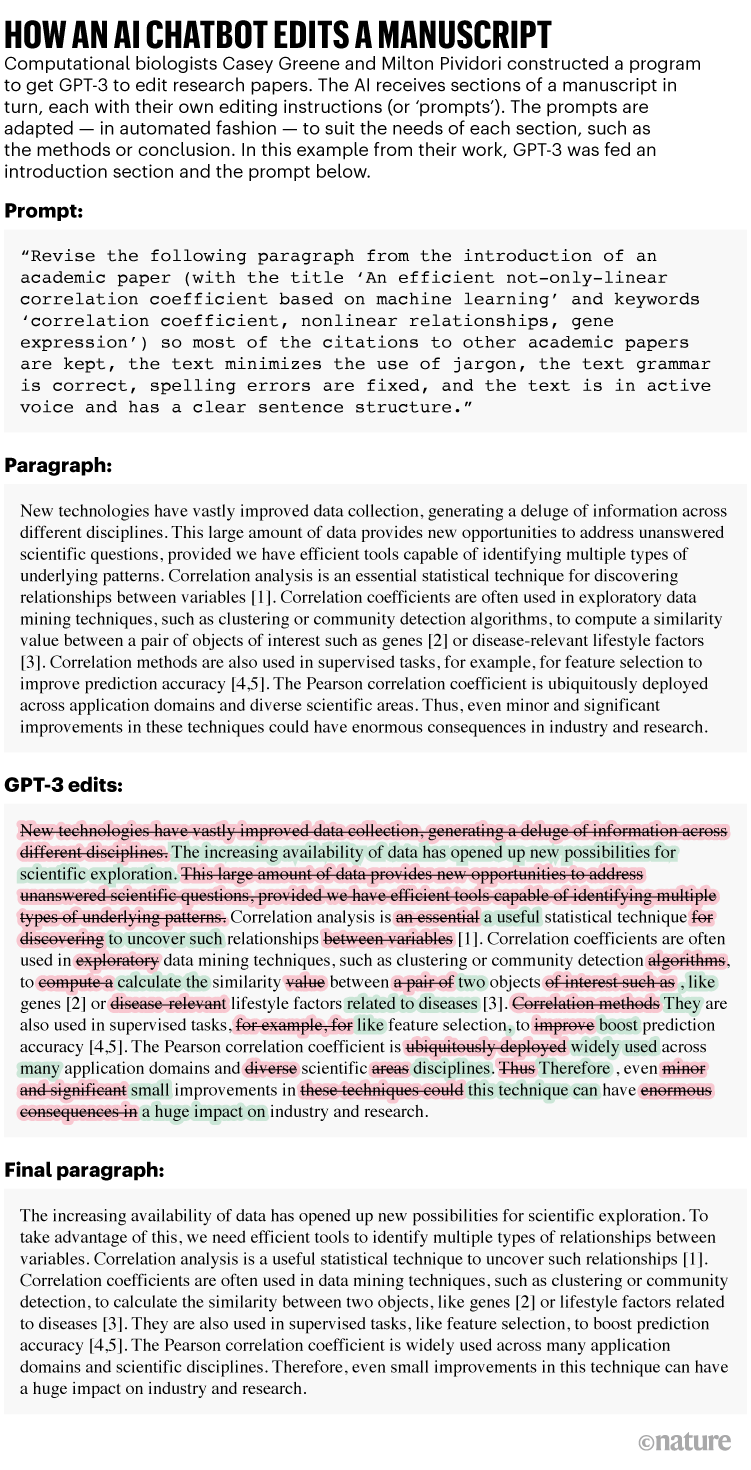

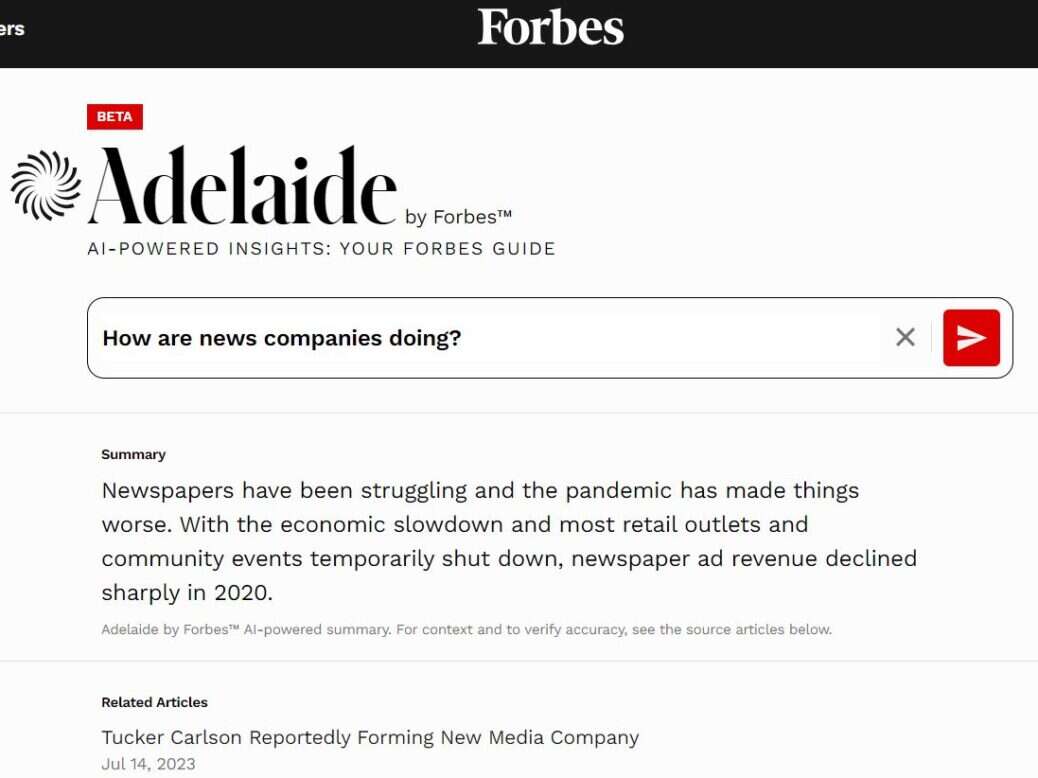

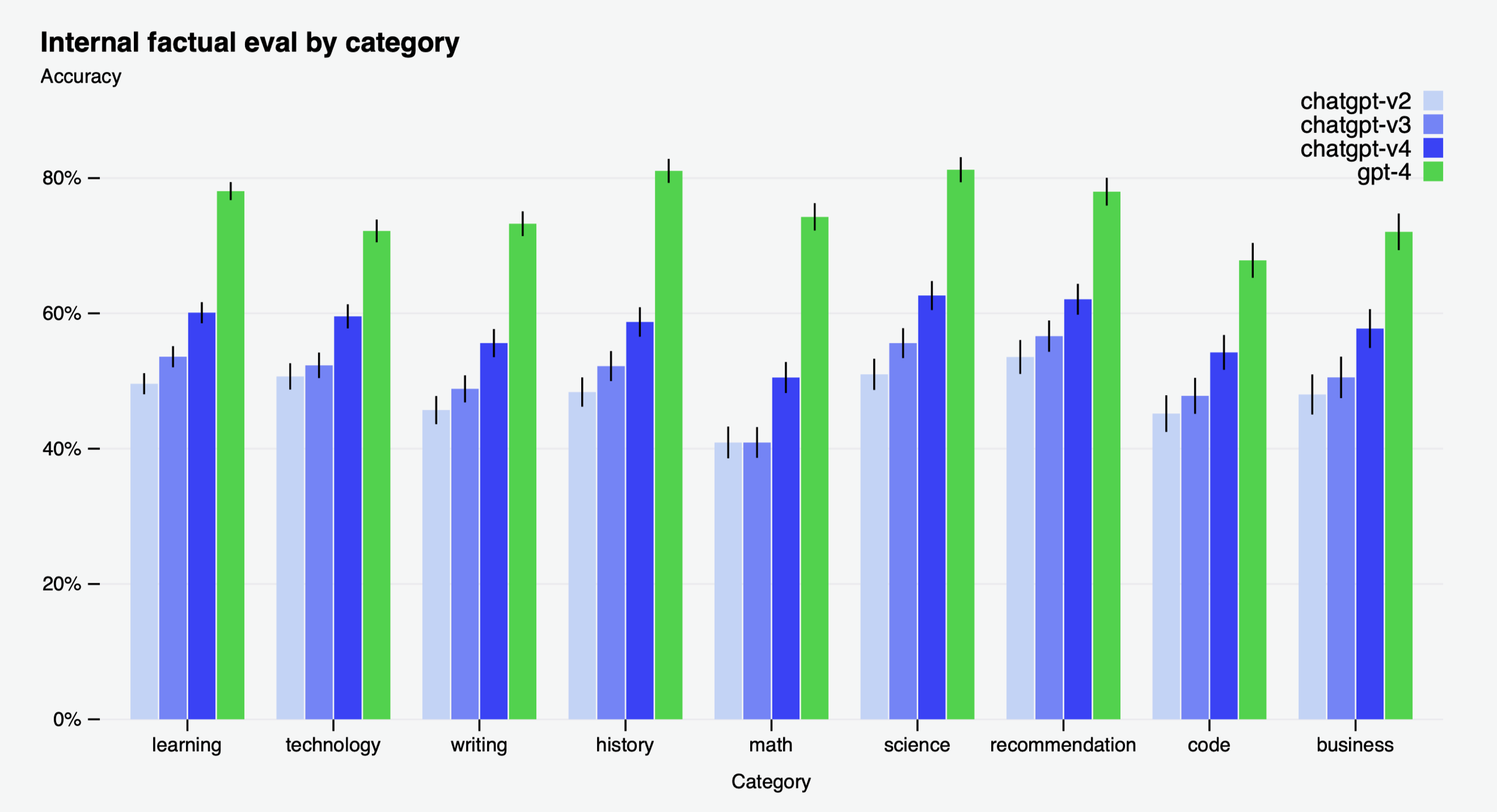

Researchers tested eight generative AI chatbots, including ChatGPT, Perplexity, Gemini, and Grok, by asking them to identify the source of 200 excerpts from recent news articles. The results were alarming: over 60% of responses were incorrect. The chatbots frequently invented headlines, did not attribute articles, or cited unauthorized copies of content.

Challenges Faced by Publishers

The study also highlighted that the AI tools frequently bypassed publishers' attempts to block their access. This lack of control over crawlers prevents publishers from monetizing valuable content and paying journalists. Misattributions by chatbots can damage publishers' reputations, leading to false credibility being lent to incorrect answers.

Even when chatbots named the right publisher, they often linked to broken URLs, syndicated versions, or unrelated pages. The chatbots rarely admitted uncertainty, presenting wrong answers with unwarranted confidence. Premium models like Perplexity Pro and Grok 3 performed worse than free versions, despite their higher cost.

Call for Improvement

The researchers emphasized that flawed citation practices are systemic and not isolated to one tool. They urge AI companies to prioritize transparency, accuracy, and respect for publisher rights. While some AI companies have signed content deals with publishers, these agreements do not ensure accuracy in citations.

According to the study, as AI reshapes how people access information, news publishers face an uphill battle to protect their content and credibility. Users are advised against blindly trusting free AI products and to be cautious of the information they consume.

The full research study can be found here.