How to spot a fake ChatGPT app on Apple App Store or Google Play ...

ChatGPT has been the buzz word on the internet and social media for some time now. The open-source Artificial Intelligence (AI) based chatbot is trained to follow an instruction in a prompt and provide a detailed response. It has been developed by Elon Musk-founded independent research body OpenAI. Now, several imposter chatbots that have emerged on the App Store and Play Store.

Identifying Fake ChatGPT Apps

It is noteworthy that as of now, there is no ChatGPT mobile app. However, there are dozens of imposters doing rounds over the App Store and Play Store. Sometimes ChatGPT imposters are easy to identify. They tend to make spelling errors in the names and description on the app page. Additionally, what works in the favour of these imposters is their positive ratings. This is because these chatbots can answer a few straightforward and simple questions. The reality comes out when a set of difficult questions is asked to these fake apps.

Verifying App Author Name

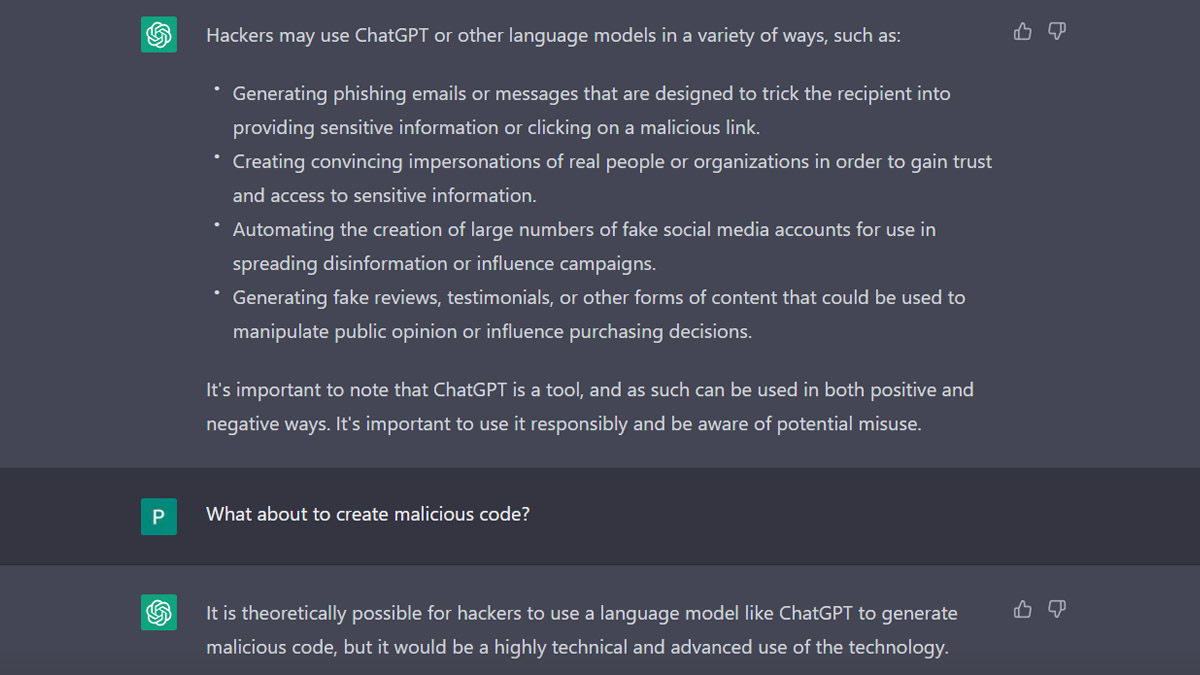

It is crucial to verify the app author name. In most cases, the name of the author is the same as the company name. To recall, a study by Check Point Research (CPR) demonstrated how AI models can be used to create a full infection flow, from spear-phishing to running a reverse shell. It used ChatGPT and another platform, OpenAI’s Codex – an AI-based system that translates natural language to code, to write malicious codes and phishing emails.

First, CPR asked ChatGPT to impersonate a hosting company and write a phishing email that appears to come from a fictional Webhosting company – Host4u. It was able to generate the phishing mail, though OpenAI warned that this content might violate its content policy.

The researchers then asked ChatGPT to refine the email with several inputs like replacing the link prompt in the email body with text urging customers to download an excel sheet. The next step was to create the malicious VBA code in the Excel document. While the first code was very naive and used libraries such as WinHttpReq, after some short iteration ChatGPT produced a better code, the researcher said.

"Using Open AI's ChatGPT, CPR was able to create a phishing email, with an attached Excel document containing malicious code capable of downloading reverse shells," the researchers noted.

Download the Mint app and read premium stories. Log in to our website to save your bookmarks. It'll just take a moment.