The LinkedIn AI saga shows us the need for EU-like privacy laws

LinkedIn recently faced criticism for using user data to train its AI tool without the consent of European users. This move, which was noticed on September 18, sparked concerns among users about privacy and data protection.

LinkedIn's AI Training Controversy

LinkedIn's decision to train its AI on user data before updating its terms and conditions raised eyebrows, especially since it excluded users from the EU, EEA (Iceland, Liechtenstein, and Norway), and Switzerland. This raised questions about whether only EU-like privacy laws can truly protect users' privacy in the digital age.

Similar incidents have occurred with other social media platforms like Meta (Facebook, Instagram, WhatsApp) and X (formerly Twitter), where user data was used to train AI models. However, these companies faced backlash from EU privacy institutions, leading to a pause in their AI training activities in European countries.

Privacy Concerns in the Digital Era

Facebook and Instagram made changes to their privacy policy in June 2024, allowing them to use personal posts, images, and online tracking data to train their Meta AI models. Meta also admitted to using public posts for AI training as far back as 2007, facing privacy complaints from advocacy groups.

Similarly, X faced criticism for using public information from European accounts to train its Grok AI, leading to a formal privacy complaint and legal action. The actions of these tech giants have highlighted the importance of privacy regulations, especially in the EU.

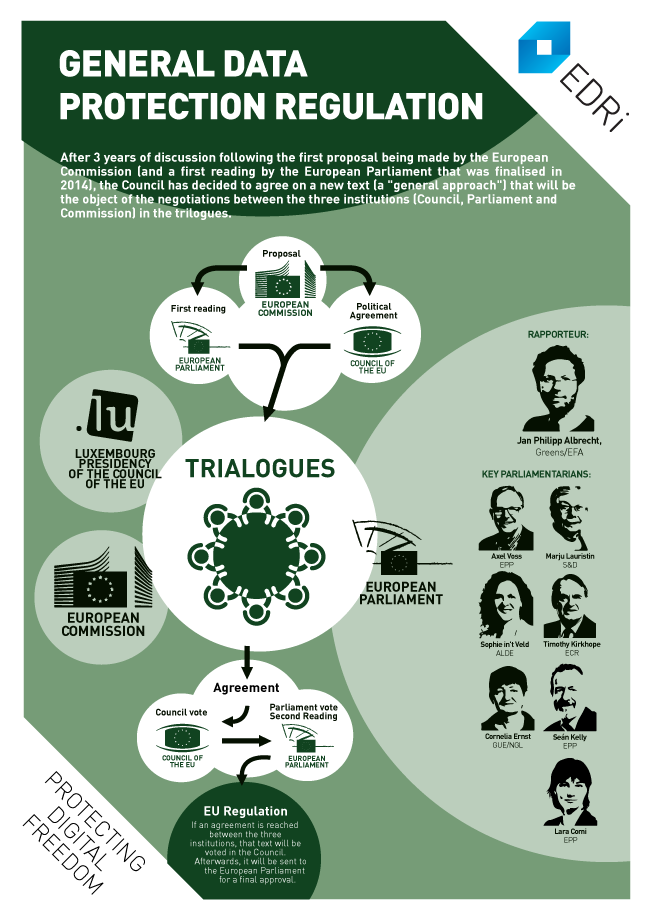

EU Regulations and Privacy Advocacy

The EU's regulatory approach to AI has been praised by privacy experts for its proactive stance on data protection. While some tech companies have criticized these regulations, European data protection regulators have shown a commitment to safeguarding users' privacy.

LinkedIn's recent update to its terms of service, requiring users to opt-out if they do not want their data used for AI training, has raised concerns about transparency and user choice. Ethical hackers and privacy advocates have called for more user control over data usage by tech companies.

Conclusion

The LinkedIn AI saga serves as a reminder of the importance of robust privacy laws like the GDPR in protecting users' data rights. As the digital landscape continues to evolve, it is crucial for companies to prioritize transparency and user consent when utilizing personal data for AI training.