Recent Advancements in AI Privacy Regulations

Recent advancements in artificial intelligence (AI) have revolutionized tools like ChatGPT and Google Gemini, making them essential components of both personal and professional life. However, this rapid progress has sparked significant concerns regarding privacy and data protection.

Managing Data Privacy with OpenAI

OpenAI, the developer behind ChatGPT, offers users the ability to effectively manage their data. This includes options such as disabling model training to ensure that user conversations do not contribute to training future AI models. Users can access these controls by logging into their OpenAI accounts, navigating to their profile settings, and turning off the data training option. It's important to note that this setting applies only to future conversations, as past chats may still be accessible on OpenAI's servers.

User Data Collection by Google Gemini

On a different front, Google Gemini, which has largely replaced the Google Assistant on many Android devices, also collects user data for training purposes. While Google states that only select responses undergo human review, users who are wary of data collection can opt out by disabling their activity within the Gemini interface. Additionally, for those who wish to preserve their chats, there is an option to delete past interactions, with Google automatically removing conversations older than 18 months. However, certain data may still persist for up to three years if flagged for review.

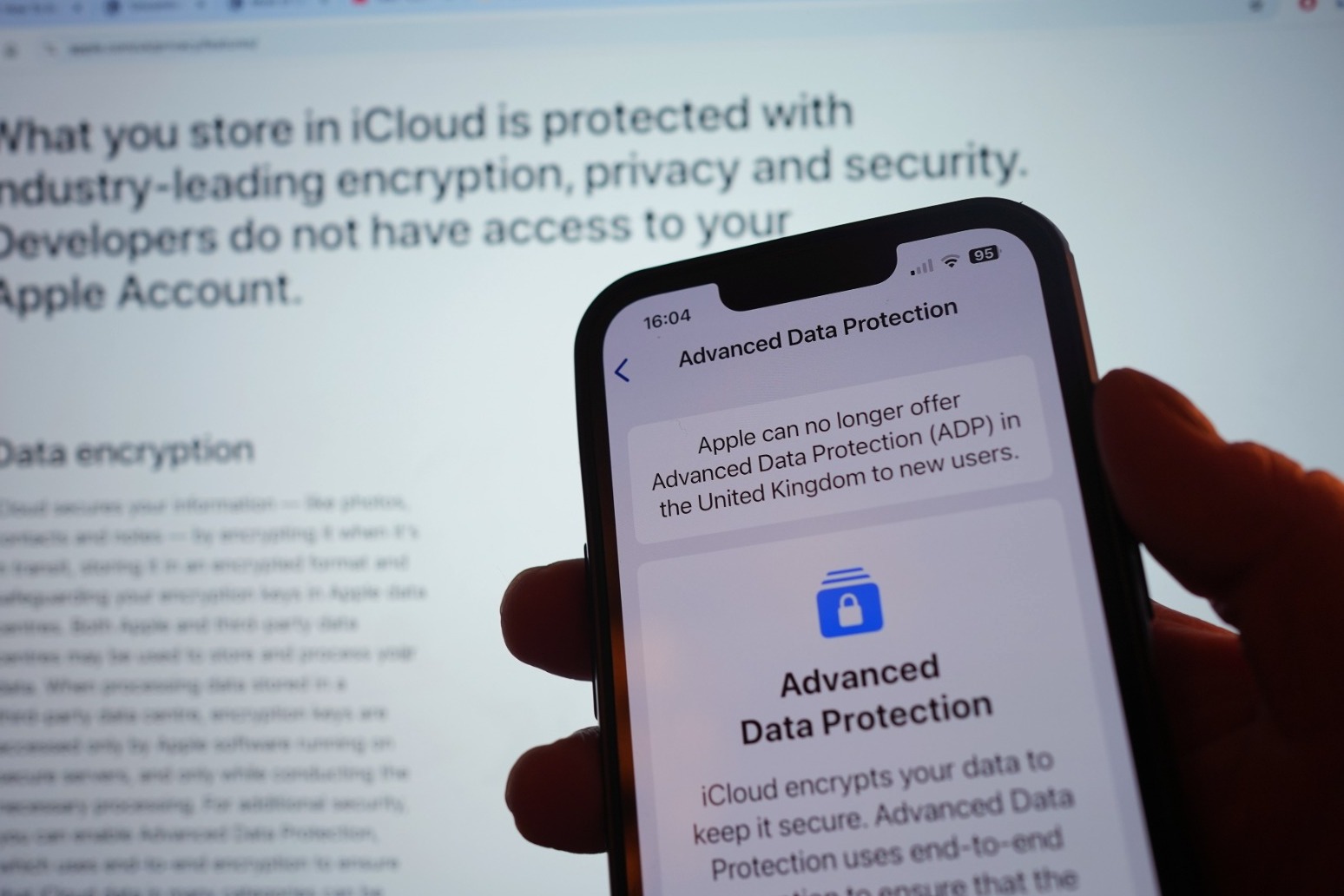

Apple's Data Privacy Strategy

Apple's recent decision to withdraw its advanced data protection tool, ADP, from the UK due to government pressure highlights the ongoing conflict between user privacy and governmental regulations. This move underscores the challenges faced by companies in safeguarding user privacy while also meeting law enforcement requirements for data access.

The Intersection of Innovation and User Trust

The increasing reliance on AI technology raises pertinent questions about data storage, accessibility, and usage. As the demand for transparency and user agency grows, there is a pressing need for clearer regulations and practices to protect sensitive information.

Future Outlook and Collaborative Efforts

While AI tools like ChatGPT and Google Gemini are likely to persist, the call for transparency and user empowerment remains strong. Collaboration among stakeholders, including tech companies and government bodies, is essential to navigate the evolving landscape of AI regulation and privacy.

Experts caution against the risks of unregulated AI and stress the importance of incorporating user feedback in the development process. Balancing the protection of user privacy with leveraging data for advancement will continue to be a delicate task with significant implications.