ChatGPT, Meta and Google generative AI should be designated "high risk" under dedicated artificial intelligence laws

A bipartisan committee of senators recommends that ChatGPT and Meta and Google's generative AI products should be designated as "high risk" under dedicated artificial intelligence laws. The committee suggests that a dedicated AI act should be established to regulate the most high-risk technologies.

The committee accuses tech giants of stealing from Australian creators and calls for the government to develop a scheme ensuring fair remuneration when creative work is used by AI tools.

Recommendations by the Bipartisan Committee

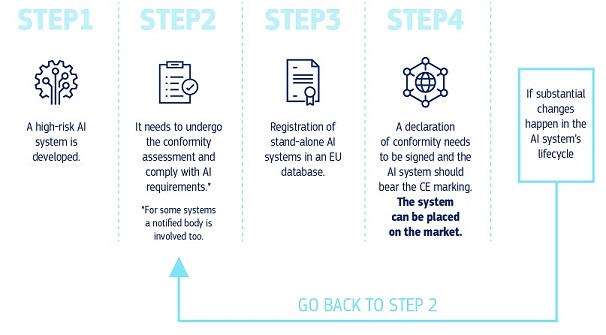

The bipartisan recommendation states that high-risk AI technologies, such as those developed by ChatGPT, Meta, and Google, should be subject to strict regulation or potential bans under dedicated artificial intelligence laws. The committee emphasizes the need for overarching legislation explicitly prohibiting certain uses of AI and providing a comprehensive framework for AI applications across various sectors.

Senator Ed Husic is tasked with developing the government's response to the rising prevalence of AI technologies, with a focus on ensuring that companies do not exploit Australians.

Protecting Rights and Addressing Risks

Artificial intelligence offers significant potential for improving productivity and well-being, but it also introduces new risks and challenges to individual rights and freedoms. The committee emphasizes the necessity of new AI laws to rein in big tech companies and establish robust protections for high-risk AI applications.

The committee stresses the importance of treating general-purpose AI models as high-risk by default, with mandated transparency, testing, and accountability measures in place. It also highlights the need for regulations to address potential biases, discrimination, and errors in AI algorithms.

Ensuring Transparency and Accountability

The committee calls for increased transparency from AI developers, particularly regarding the use of copyrighted works in training datasets. It advocates for mechanisms to guarantee fair remuneration to creators whose work is utilized in AI products, underscoring the significance of protecting the rights of individuals working in the creative industries.

In conclusion, the committee recommends a risk-based approach to AI governance, balancing the need to mitigate significant risks associated with AI technologies while fostering the industry's growth in a safe and responsible manner.