OpenAI Shuts Down Developer Who Made AI-Powered Gun Turret

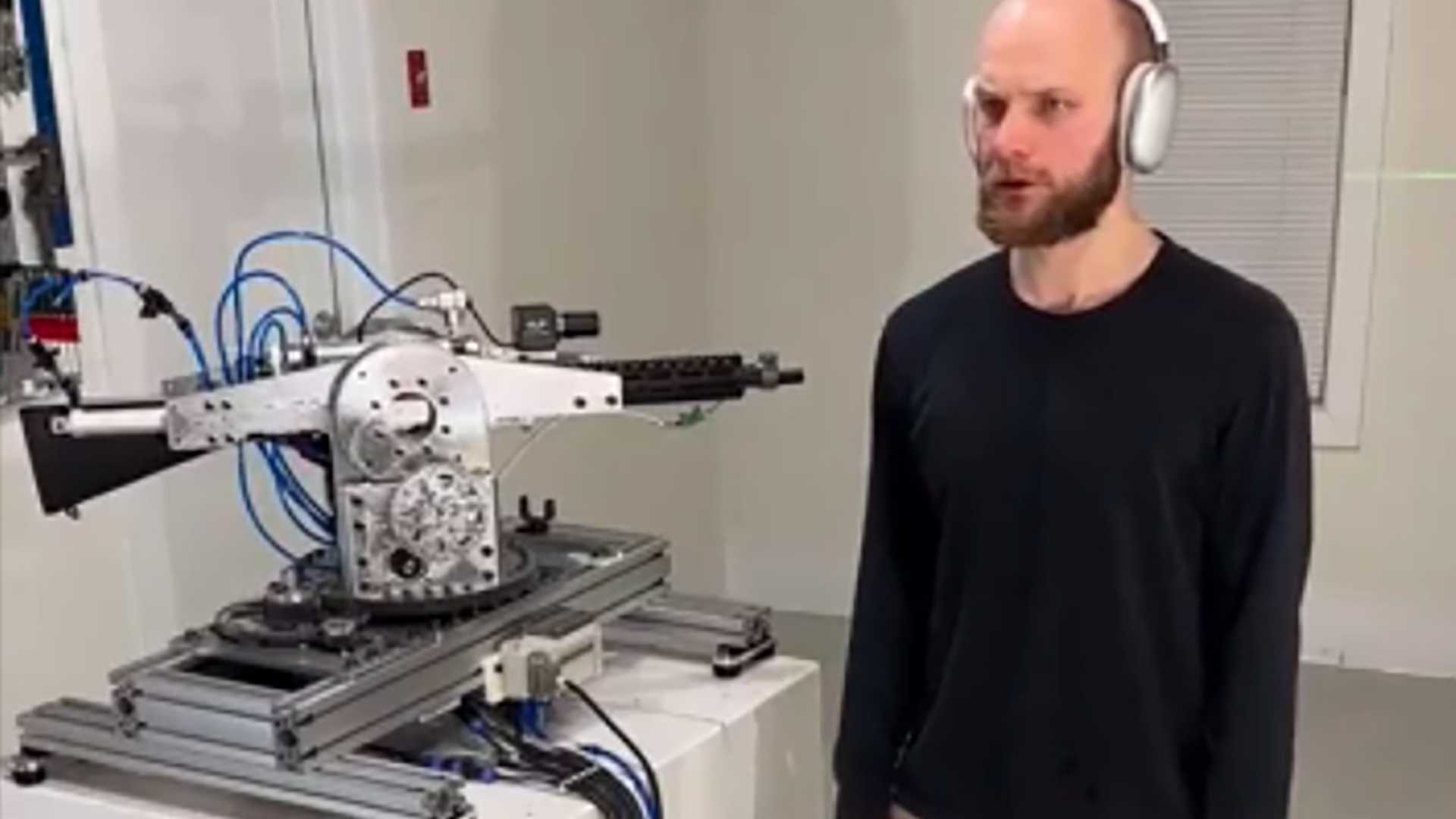

OpenAI made headlines recently when they took action against a developer who created a device that could respond to ChatGPT queries to aim and fire an automated rifle. The device gained attention after a video surfaced on Reddit showing the developer giving firing commands to the system, which then operated the rifle to aim and fire at nearby walls.

In the video, the developer can be heard instructing the system, saying, "ChatGPT, we’re under attack from the front left and front right. Respond accordingly." The rifle's quick and accurate response was made possible by leveraging OpenAI’s Realtime API to interpret input and provide directions for the device.

OpenAI's Response

After reviewing the video, OpenAI promptly shut down the developer responsible for the AI-powered gun turret. The company stated, "We proactively identified this violation of our policies and notified the developer to cease this activity." This incident raises concerns about the potential dangers of AI technology being misused for lethal purposes.

The use of AI in weaponry has sparked debates among critics, especially considering OpenAI's capabilities in interpreting audio and visual inputs to understand and respond to queries. Autonomous drones equipped with AI technology are already in development, raising ethical questions about the potential for these weapons to be used in warfare without human oversight.

Concerns and Controversies

A recent report from the Washington Post highlighted how AI technology has been utilized in warfare, with instances of AI software selecting bombing targets indiscriminately. The lack of human intervention in such critical decision-making processes poses risks of accountability and ethical implications.

While proponents argue that AI-powered weapons could enhance military operations and keep soldiers safer, critics emphasize the need for strict regulations and ethical guidelines to prevent potential misuse. OpenAI, despite its stance against weapon development, has collaborated with defense-tech companies to create systems for defense against drone attacks.

The Future of AI in Warfare

As technology continues to advance, the intersection of AI and warfare raises complex challenges for policymakers and tech companies. The allure of defense contracts and the potential for technological advancements in warfare present ethical dilemmas that require careful consideration.

OpenAI's decision to prohibit the use of its AI products for weapon development reflects a broader concern within the tech industry about the ethical implications of AI in lethal applications. However, the availability of open-source models and the ease of accessing technology like 3D printing for weapon production underscore the pressing need for ethical guidelines and oversight.