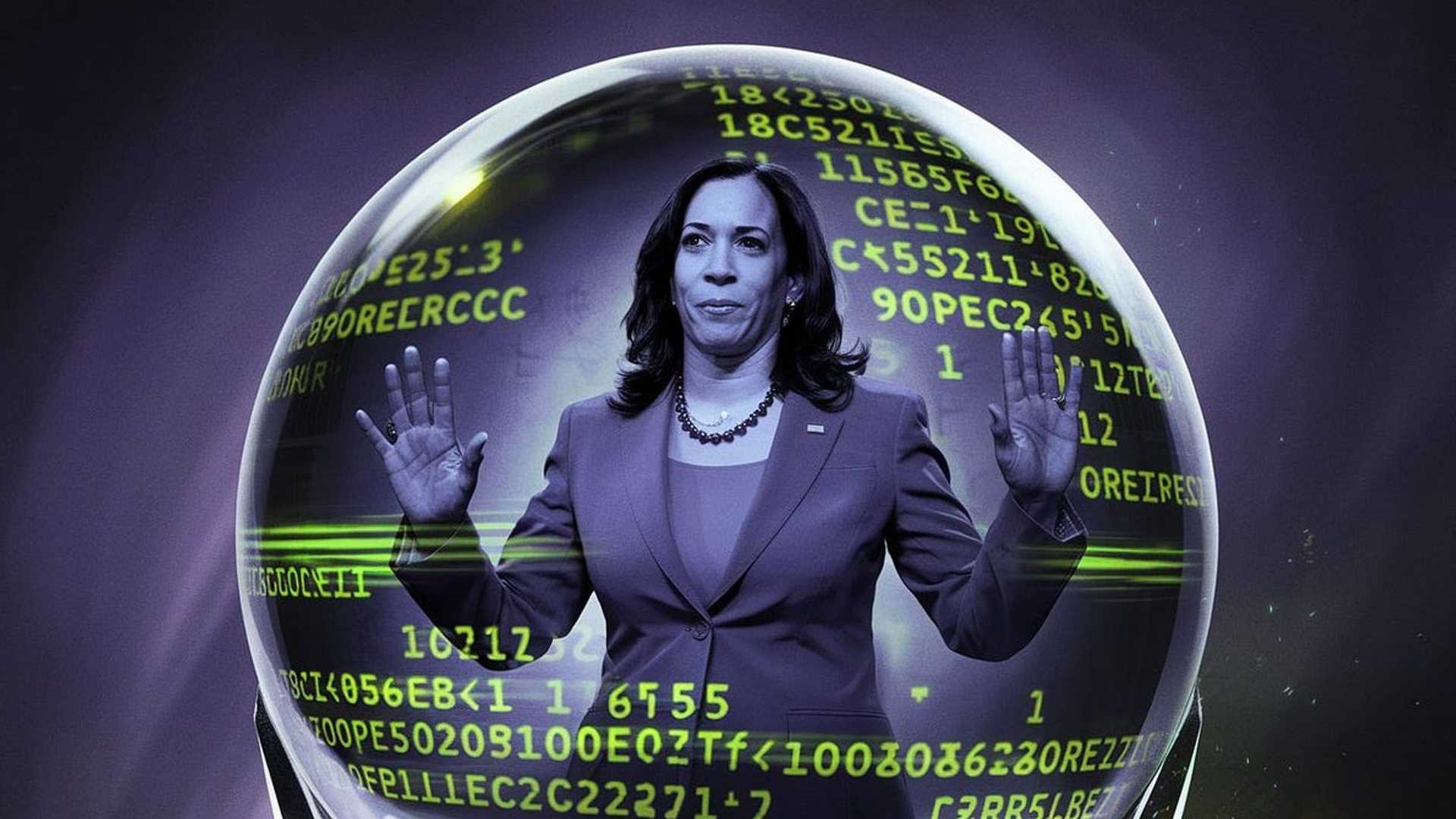

Why ChatGPT failed so spectacularly at predicting the US election ...

ChatGPT’s incorrect prediction bias for Kamala Harris reveals issues in AI training data, narrative preferences, and safeguards affecting neutrality. The US presidential elections are over. Regular readers will know that I attempted to use AI (ChatGPT) to predict the outcome. Ultimately, this failed, as the hundreds of trials I ran all consistently favored Kamala Harris. My motives were apolitical and objective — the experiment was about the capacity of AI to access the wisdom of crowds.

Previously, the methodology was used successfully by economists at Baylor University. Weird as it sounds, AI has been proven to be better at predicting our behavior than we are. I recommend Prediction Machines: The Simple Economics of Artificial Intelligence (2022) by Agrawal, Gans & Goldfarb.

I still believe in the potential of AI to aggregate crowd intelligence. AI is more well-read than any person in history and has access to all the latest news and updates on the rapidly evolving field of Generative AI space. From cutting-edge research and developments in LLMs, text-to-image generators, to real-world applications, and the impact of generative AI on various industries.

🏆 7x Top Writer. AI Whisperer & Prompt Engineer. Writing about the effective use of AI in copywriting, art, design, branding & games!