The government says more people need to use AI. Here's why that's...

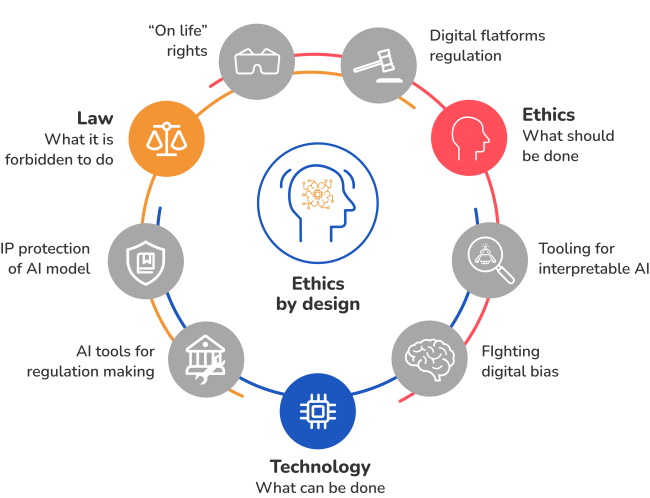

The Australian government recently released voluntary artificial intelligence (AI) safety standards and proposed greater regulation for the use of AI in high-risk situations.

The take-home message from federal Minister for Industry and Science, Ed Husic, emphasized the need for more people to use AI to build trust. However, the question arises - why do people need to trust this technology, and why is it important for more people to use it?

AI systems are trained on vast data sets using complex mathematics that are often beyond the understanding of most individuals. These systems produce results that cannot be easily verified, with even state-of-the-art systems prone to errors. For instance, ChatGPT's accuracy is declining, and Google's Gemini chatbot has made comical recommendations like putting glue on pizza.

Public skepticism of AI is justified, given the technology's track record. From concerns about job losses to the various harms AI presents - such as accidents involving autonomous vehicles, bias in recruitment systems, and discriminatory legal tools - the risks associated with AI cannot be overlooked.

AI Adoption and Risks

Despite the government's push for increased AI usage, recent reports have shown that humans outperform AI in various tasks. The rush to adopt new technology without assessing its suitability is a common pitfall. It's essential to discern when AI is the appropriate tool for a given task.

One of the significant risks of widespread AI use is the potential leakage of private data. Companies collecting data on a massive scale raise concerns about transparency, privacy, and security. The use of such data for training models, data security measures, and potential third-party access are areas of uncertainty for users.

Trust Exchange Program and Data Collection

The government's proposed Trust Exchange program has raised alarms about the extensive collection of personal data. Collaboration with large technology companies, including Google, has fueled concerns about mass surveillance and data privacy.

Moreover, the influence of technology on politics and behavior, coupled with automation bias, poses further risks to society. Blind trust in AI without proper education could lead to comprehensive surveillance and control systems, eroding social trust and autonomy.

Regulation and Oversight

While AI regulation is crucial, it should not be synonymous with mandatory usage. Establishing standards for the use and management of AI systems, as advocated by international bodies like the International Organisation for Standardisation, can promote responsible AI deployment.

It's essential to focus on safeguarding individuals rather than coercing widespread adoption and trust in AI. Prioritizing informed decision-making and ethical AI practices can lead to a more secure and transparent technological landscape.