How will multimodal AI redefine film and storytelling?

On 13 May 2024, Open AI hit the news with the announcement of its newest AI model GPT 4o, which can respond to audio, vision, and text input in real time. Earlier in the month, a music video created using Open AI’s AI system Sora, called “Washed Out — The Hardest part” was published on Youtube: In just one day, it garnered over 38k views. Directed by LA based artist Paul Trillo, human labor and intentionality were still part of the video’s making process. However, viewers are shocked: “So now a multimodal AI system can generate film-like videos without real human performers or footage shot by a real camera in real locations?” People wonder about the mesmerising capacity of AI models: what’s next? Leading AI scientists have forecasted that multimodal AI systems are a key growth point in 2024 and the following years. How this will impact creative industries such as film, animation, music, gaming etc., draws attention from professionals across industries and the public alike.

Challenging Conceptions of Media Culture

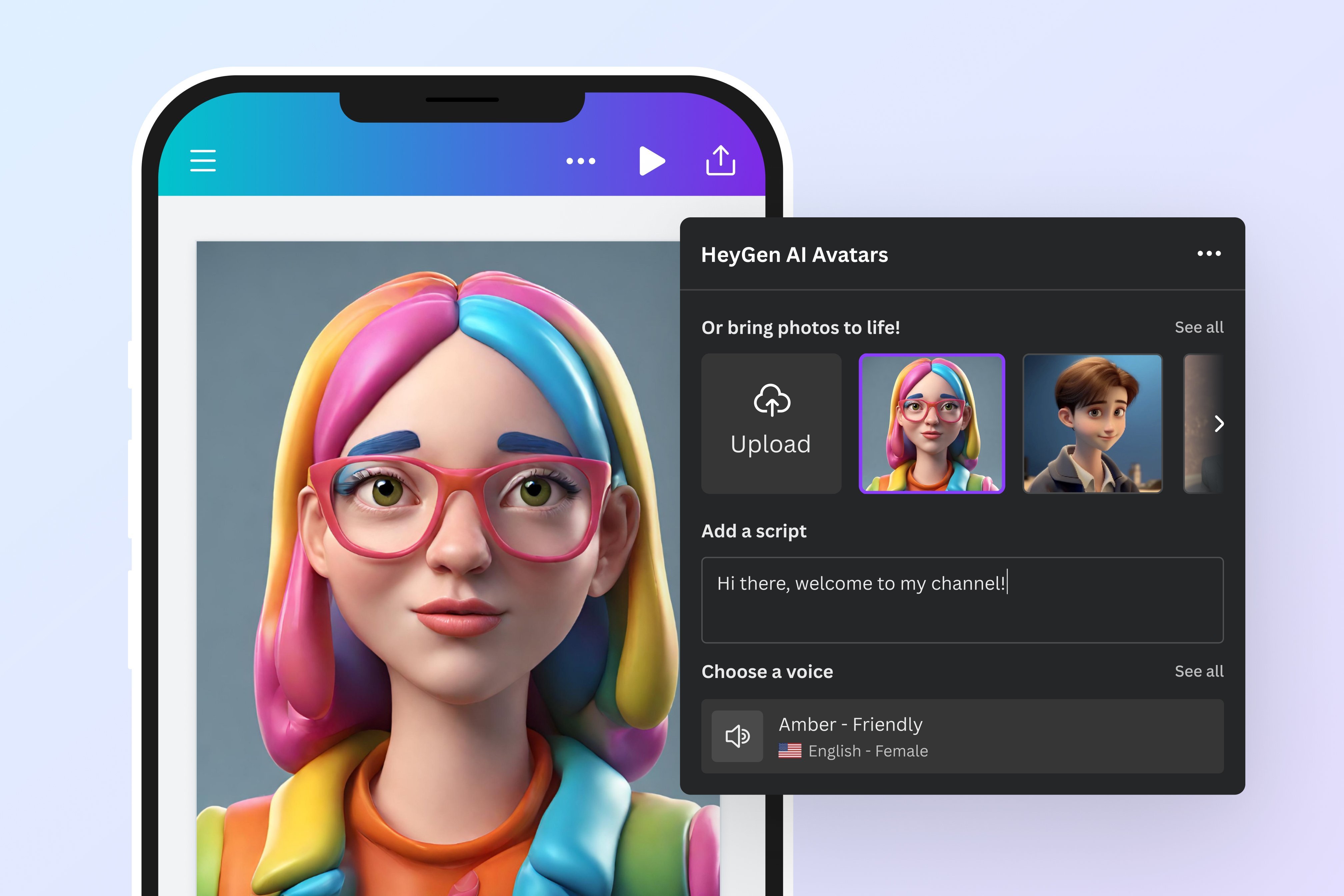

Generative AI video tools challenge our conceptions of media culture. Applications such as Runway AI, Stable Video Diffusion, Emu Video (Meta), and Pika Labs can generate high-resolution videos that last between 4–10 seconds with prompts in the formats of texts, images, and videos. They frequently release new filters and functions such as lip sync, depth extraction, 3D capture, to achieve complex visual effects in a few moments. As computing efficiency and AI chips improve in the coming years, the length of videos created by AI models may increase substantially.

Changing the Narrative Landscape

AI generated or augmented films and videos can crack into existing modes of story lines in cinema and other media arts. One feature of AI videos is that frames of images tend to jump cut from one scene to another. The sequences disrupt logic and rationale. In “The Hardest Part”, for instance, the young students dressed in hippies style run into spaces oddly “glued” together: from school bus interior to classrooms, from narrow hallways in laundry rooms to the interiors of 1960s-fashioned flying cars, from water inundated caves to garage on fire, and so on. The seemingly human-like characters in the video walk in crippling manners: they crash into endless spaces creating a dizzying effect for the viewers. While the main characters seem to be clearly a couple, the narrative trajectory of what they are doing and why is hard to grasp. Such a visual effect induces what film & media studies scholar Shane Denson termed as ‘discorrelation’ between the image and human experience.

The Impact of Machine Learning Models

The Machine Learning models used during AI training processes reduce and compress input data into vectors in the latent space. Such processes extract an image/video from its rich narrative contexts and classify it with similar objects. Only the main object in question is detected and the rest of the information is blurred. Additionally, one label could only capture the partial meaning of (sometimes not even accurate) what’s going on in an entire image. Contextual information and representations of real world experience evaporate. Although the resistance against realistic representations resonates with 20th century art movements such as Surrealism and Dadaism, the complete destabilization of a storyline makes AI-generated videos a challenge to meaning creation. One advantage of AI models is their modularity production, a core symptom of modern mass culture. Video generation tools present the possibility of various combinations of movements against various backgrounds. Portraying authentic scenes and moods will be critical for artists making impactful AI videos, opening new avenues for creativity.

The Future of AI in Filmmaking

So multi-modal AI such as Sora, Runway and Pika labs may not replace human-made films, but they are on the path to dramatically change our screen culture. If one wants to improve efficiency in generating different backgrounds to specific scenes where humans are still engaged in the acting process, AI might be a good facilitator. Human intentionality and emotions are the key in developing meaningful AI films. Film makers, playwrights, artists, and creators can take this opportunity to hone their story telling skills that bring out the nuances of human interactions, behaviors, sensibilities. They can also co-create with AI to understand contexts and enrich storytelling, rather than replacing it.