What Lessons Should the U.S. Learn From DeepSeek? | IFP

IFP Director of Emerging Technology Policy Tim Fist presented this testimony on April 8, 2025 before the U.S. House Committee on Science, Space, and Technology, during a hearing titled "DeepSeek: A Deep Dive." The Committee recorded his oral remarks in the following video; his full written testimony is included below.

Subcommittee Chairman Obernolte, Committee Chairman Babin, Subcommittee Ranking Member Stevens, and other members of the subcommittee: Good morning, and thank you for the opportunity to testify today. I’m the Director of Emerging Technology Policy at the Institute for Progress (IFP), a nonprofit and nonpartisan research organization focused on innovation policy. Our organization is dedicated to accelerating the pace of scientific progress and steering the advancement of emerging technologies in a direction that is compatible with American values. As one example, we engage in a research partnership with the National Science Foundation to support its work on improving scientific grantmaking and ensuring the American R&D enterprise is operating effectively.

Lessons from DeepSeek

Many of my policy recommendations are drawn from IFP’s response to the Office of Science and Technology Policy’s request for information for an action plan for AI. In December and January, the Chinese AI company DeepSeek open-sourced two new models — V3 and R1 — and launched a free chatbot app that rapidly topped mobile app store rankings. In March alone, it was used over 800 million times. For context, OpenAI’s ChatGPT saw 5 billion uses that month — but the directional shift is clear: China is now distributing models close to the frontier globally, fast, and for free.

That prompted some to label DeepSeek a “Sputnik moment” for American AI. The analogy is rhetorically powerful, but technically misleading. Where Sputnik represented a dramatic leap in capability, DeepSeek’s most impressive advances are comparable to US models from mid-2024 — about eight months behind the state of the art. Unlike Sputnik’s closed technologies, DeepSeek’s models are freely available. And while the Sputnik project was designed and manufactured using Soviet technologies, DeepSeek heavily relies on American technologies.

Security Concerns and Risks

The wide availability of cheap and capable Chinese models presents a security concern. China’s civilian and military sectors are famously “fused,” where the CCP can exert top-down control of companies and their products. This risk is made more severe by the fact that AI models and applications can be designed to have backdoors that we have no good way of detecting, or designed with modifications to spread specific ideologies.

Insofar as Chinese models are only used in chatbots, these risks are likely not an acute threat to national security. But some risks are clearly present: DeepSeek’s chatbot application has demonstrated a tendency to suppress information on certain topics and amplify propaganda Beijing uses to discredit critics. And there are reasons to think these risks will grow.

National Security Risks

The new paradigm in AI development is “agents” — AI applications that can operate autonomously to achieve user-specified goals, like conducting research or maintaining software. If agents based on DeepSeek’s models are deployed by American or allied organizations, this presents a clear vector for national security risks.

Late last year, the NSF launched a program on open-source security, whose due date for proposals is later this month. Such programs should be expanded to tackle problems specific to open-source AI, including AI agents.

Institutional Technical Capability

DeepSeek’s research was publicly available. Its reliance on US chips was known. And yet its impact caught Washington off guard — much like Huawei’s 2023 release of a smartphone powered by SMIC’s 7nm chip, which revealed a blind spot in our understanding of Chinese manufacturing capacity.

The real issue is a lack of institutional technical capability. US policymakers need fast, expert analysis of foreign models, chips, and deployments — before they become headline events. That requires a dedicated team able to:

- Assess foreign AI capabilities accurately and quickly.

- Anticipate future AI advancements and threats.

- Provide recommendations for proactive responses.

Leveraging American Technologies and Export Controls

DeepSeek uses powerful computer chips developed by NVIDIA — an American firm — and its core algorithmic approach is based directly on breakthroughs made by American AI researchers.

The fact that Chinese AI developers rely on American technologies gives Washington a powerful source of leverage in the form of export controls. DeepSeek’s founder has openly acknowledged that “[their] problem has never been funding; it’s the embargo on high-end chips.”

Shaping Technological Development for American Interests

In doing so, the federal government should recognize it has an essential role to play in shaping the direction of technological development. The full might of the American R&D engine has been a powerful force for aligning emerging technologies with American interests in the past, and it can be now for AI.

Within the field of AI, we have seen massive advances in fundamental capabilities, without equivalent advances in model robustness, interpretability, verification, and security. Private companies are less focused on these areas and more focused on discovering commercial applications. But the American public has a strong interest in ensuring that models are trustworthy in their application.

This also matters for American competitiveness and national security. If US models are more reliable than their foreign counterparts, it is more likely that American firms will be the provider of choice for the world, including in scientific applications that do not have strong commercial promise, but are important for soft power and advancing basic research.

The rapid deployment of AI systems for US military applications is also hindered by fundamental AI reliability challenges.

R&D Projects and Funding Mechanisms

Here, I’ll lay out a range of promising R&D projects and funding mechanisms the federal government could use to solve these problems. America’s open-source AI ecosystem is strong, but not unchallenged. Based on publicly available information, DeepSeek’s models have already been downloaded over 12 million times, not far behind Meta’s, at 24 million.

Federal prize competitions are a proven tool for accelerating innovation and are already authorized under the America COMPETES Act. Prizes work particularly well for open-source AI.

A natural institutional home for such efforts is the proposed NIST Foundation, created under bipartisan legislation from this Committee. A foundation could channel public, private, and philanthropic funds toward national goals, including open-source AI leadership.

Finally, to boost adoption, the National AI Research Resource (NAIRR) should host American open-source models — subsidizing distribution for startups, researchers, and small firms. Making US models easier to test, trust, and deploy is essential to securing long-term advantage.

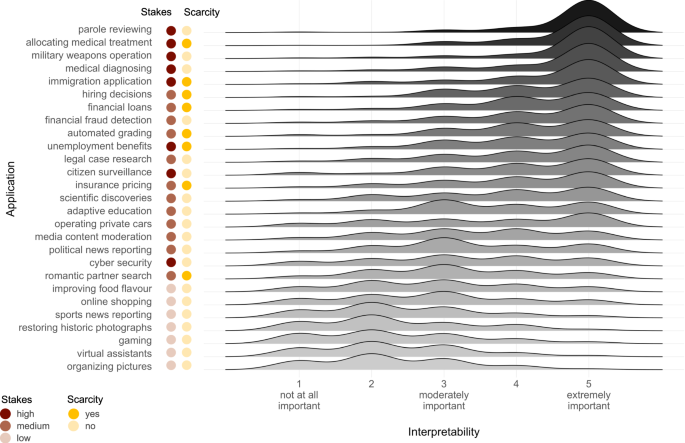

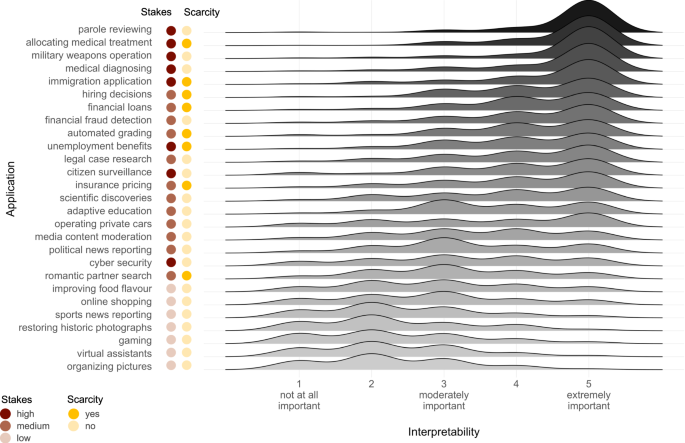

AI interpretability research aims to develop a more concrete understanding of a model’s predictions, decisions, or behavior. Solving interpretability will allow for safer and more effective AI systems via more precise control, and the ability to detect and neutralize adversarial modifications such as hidden backdoors.