AI And The Law: The Lawyers Are Innovating Too - The Innovator

No one likes to see the lawyers get involved. But in AI, as in all things, the lawyers have a very definite role to play. The use of AI implicates rights and interests and grey areas, and it will produce new opportunities and new types of agreements, along with new types of disputes. It will test the application of existing laws and reveal gaps to be filled with new laws. So, the law and lawyers are at the beginning their own steep innovation curve, along with everyone else.

The Legal Landscape in the United States

It would be easy to think that the legal issues are all about copyright, with Open AI and Stability AI and other foundation models being sued by over a dozen companies for breach of their copyrights. In fact, it is partly about that – these are indeed important issues to sort out in the courts and in board rooms. But there is much more to it. In the United States, by direction of the White House in the Executive Order issued on October 30, 2023, each component of the U.S. Government is required to review their use of and rules for the use of AI. That work is in progress and being reported out on a regular basis.

Much of the work implicates new codes of conduct and guidelines that will be informed by internal legal counsel, as well as the harder work of applying such guidelines to real-world fact patterns. Alongside this, the same U.S. agencies are reviewing their existing regulations to establish and then articulate how those laws-on-the-books apply to the use of AI within their domains. They are reviewing existing regulatory powers and enforcement policies.

Enforcement and Regulation

For instance, all of the following have publicly pledged to enforce federal laws to promote responsible innovation in the context of automated systems: the Equal Employment Opportunity Commission (EEOC), Department of Justice (DOJ) Civil Rights Division, Federal Trade Commission (FTC), Consumer Finance Protection Board (CFPB), Department of Education (DOE), Department of Health and Human Services (HHS), Department of Homeland Security (DHS), Department of Labor (DOL) and Department of Housing and Urban Development (HUD).

Similarly, the EEOC has emphasized that companies using AI to make or support hiring or promotion decisions should expect the rules to apply as they would in the absence of those advanced tools. They are subject to enforcement actions for violations or incorrect use or discriminatory practices. Even in the absence of new legislation and regulation, we are likely to see other such applications of existing law in the U.S.

International Implications

In Canada, Air Canada was successfully sued when a chatbot from its website served a customer incorrect information about bereavement fares. The customer relied on the incorrect information, and Air Canada argued that because the chatbot was developed by a third-party, the carrier did not bear responsibility for its performance. The British Columbia Civil Resolution Tribunal (CRT) ruled otherwise, ordering compensation for the customer stating: “It should be obvious to Air Canada that it is responsible for all the information on its website. It makes no difference whether the information comes from a static page or a chatbot.”

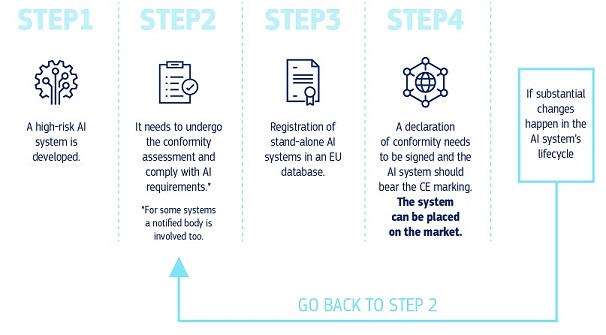

In Europe, the new EU AI Act and related regulations will provide many more guardrails on AI use and impact, protecting the rights of EU citizens and imposes obligations on businesses operating within the Common Market.

Beyond the application of specific regulatory restrictions, of concern to businesses and their lawyers, is the simple fact that we know that Generative AI systems, in part because of their basic design, still make a lot of errors. Organizations rolling these tools out will need, at a minimum, strong governance for how the tools are developed and used, how employees are trained on the tools, and how customers are invited to interact with them.

Errors will occur, and that is an expected part of experimentation, but the absence of ex ante governance to anticipate, minimize and account for those errors is now within the ambit of the lawyers and regulators who may be imposing ex post liability. Those that insist on moving fast and breaking things would do well to remember that the lawyers are innovating too.