24 of the best large language models in 2025

Large language models, also known as LLMs, are the driving force behind the generative AI boom. These black box AI systems use deep learning on extensive datasets to comprehend and generate new text. The foundation for modern LLMs dates back to 2014 when the attention mechanism was introduced. This machine learning technique, designed to replicate human cognitive attention, made its debut in a research paper titled "Neural Machine Translation by Jointly Learning to Align and Translate." Further advancements led to the creation of the transformer model in 2017 with the paper "Attention Is All You Need."

One of the most prominent large language model families today, including the generative pre-trained transformer series and BERT, are based on the transformer model. For instance, ChatGPT from OpenAI quickly gained over 100 million users in just two months after its launch in 2022. Various models from tech giants like Google, Amazon, and Microsoft, as well as open-source alternatives, have since emerged.

Relevant Large Language Models

Below are some of the noteworthy large language models that are shaping the field:

BERT

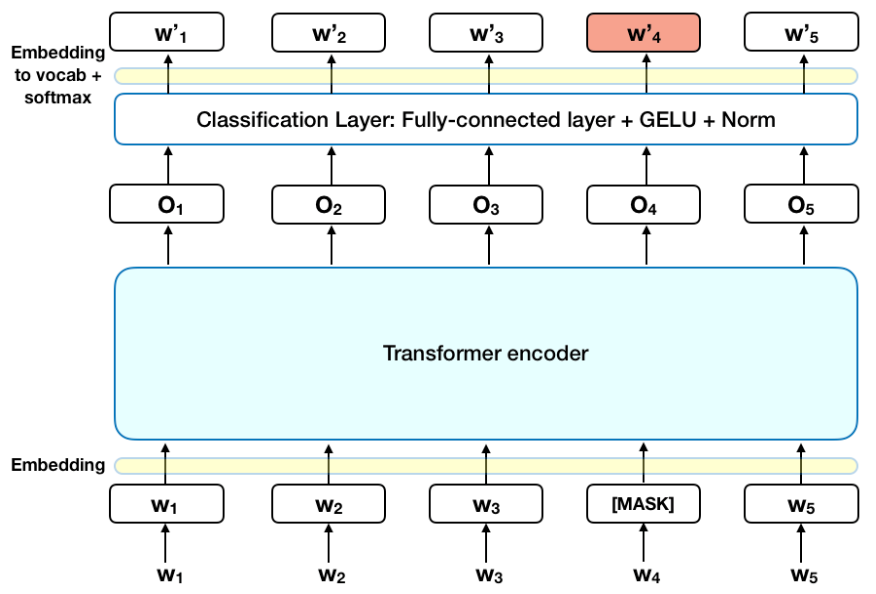

BERT, introduced by Google in 2018, is a transformer-based model that excels in converting sequences of data. With 342 million parameters, BERT was pre-trained on a vast dataset before being fine-tuned for specific tasks such as natural language inference and sentence text similarity.

Claude LLM

Claude, focused on ethical AI, ensures that AI outputs align with principles of being helpful, harmless, and accurate. The latest iteration, Claude 3.5 Sonnet, exhibits enhanced understanding of nuance, humor, and complex instructions, making it ideal for application development.

Cohere

Cohere offers a range of LLMs like Command, Rerank, and Embed, which can be tailored to specific business requirements. These models enable companies to train and fine-tune LLMs for their unique use cases.

DeepSeek-R1

DeepSeek-R1, an open-source reasoning model, specializes in complex problem-solving and logical inference using reinforcement learning techniques.

Ernie

Baidu's Ernie powers the Ernie 4.0 chatbot and has gained popularity with over 45 million users. Operating primarily in Mandarin, Ernie boasts 10 trillion parameters and competencies in multiple languages.

Falcon

Falcon, a transformer-based model series by the Technology Innovation Institute, offers multi-lingual capabilities and various model sizes for different applications.

Gemini

Google's Gemini family of LLMs, ranging from Ultra to Nano, are multimodal models capable of handling text, images, audio, and video. Gemini's integration into various Google services underscores its versatility and utility.

Gemma

Google's Gemma models, trained on similar resources as Gemini, provide open-source LLMs with varied parameter sizes for different applications, including local deployment and integration with Google Vertex AI.

GPT-3

OpenAI's GPT-3, with over 175 billion parameters, revolutionized language models upon its release in 2020. Its successor, GPT-3.5, fine-tuned using reinforcement learning, powers models like ChatGPT and exhibits advanced capabilities.