ChatGPT's Advanced Voice Mode gets a significant update to make ...

OpenAI introduced Advanced Voice Mode last year alongside the launch of GPT-4o. This feature uses natively multimodal models, such as GPT-4o, and can respond to audio inputs in as little as 232 milliseconds, with an average of 320 milliseconds, similar to human response time in a typical conversation. It can also generate audio that feels more natural, pick up on non-verbal cues, such as the speed you’re talking, and respond with emotion.

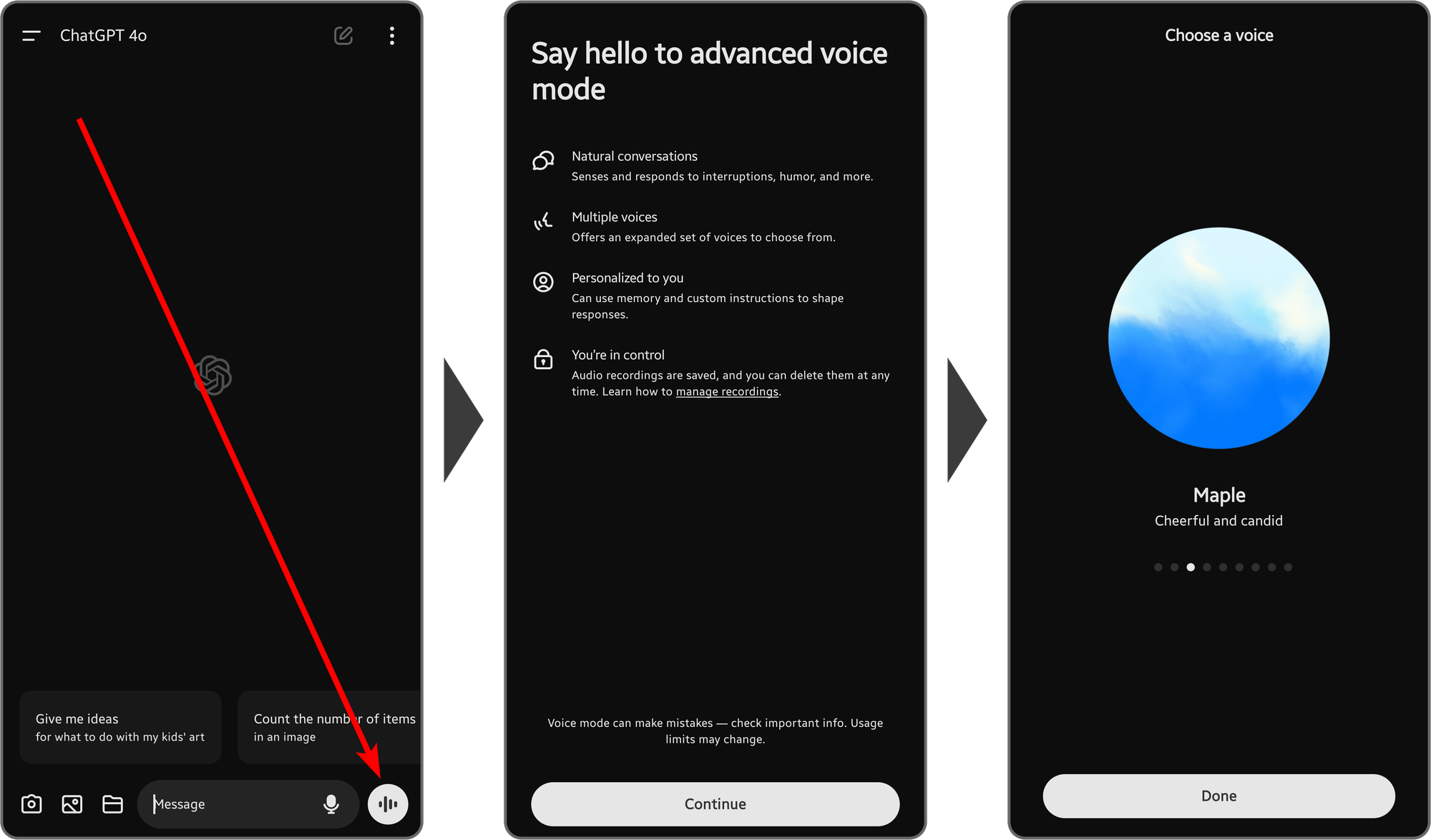

Update on Advanced Voice Mode

Early this year, OpenAI released a minor update to Advanced Voice Mode that reduced interruptions and improved accents. Today, OpenAI has launched a significant upgrade to Advanced Voice Mode, making it sound even more natural and human-like. Responses now feature subtler intonation, realistic cadence—including pauses and emphasis—and more accurate expressiveness for certain emotions such as empathy and sarcasm.

Wow, new expressive voice in @ChatGPTapp doesn’t just talk, it performs. Feels less like an AI and more like a human friend. Nice work @OpenAI team. 🎤🎶🚀 pic.twitter.com/LRkKNs3g3C

Translation Support and Availability

This update also introduces support for translation. ChatGPT users can now use Advanced Voice Mode to translate between languages. Simply ask ChatGPT to start translating, and it will continue translating throughout the conversation until instructed to stop. This feature effectively replaces the need for dedicated voice translation apps.

Conclusion

With these significant updates, Advanced Voice Mode by ChatGPT is setting new standards in AI communication, bringing it closer to human-like interactions.

© Since 2000 Neowin®. All trademarks mentioned are the property of their respective owners.