Understanding the Concerns Raised by Artificial Intelligence Developers

The development of artificial intelligence (AI) continues to push boundaries and raise important questions about its implications. Recently, a group of AI developers, including current and former employees of leading AI companies like Open AI and Google DeepMind, came together to voice their concerns in an open letter titled "A right to warn about advanced artificial intelligence."

The letter highlights the immense potential of AI technology to benefit humanity while also acknowledging the serious risks associated with its development. These risks include exacerbating existing inequalities, potential misuse for manipulation and misinformation, and the looming threat of losing control over autonomous AI systems, which could have catastrophic consequences, even leading to human extinction.

While AI companies and governments worldwide have recognized these risks, the developers argue that there is still a lack of transparency and accountability in the industry. They call for stricter regulations and commitments from AI development companies to address these concerns effectively.

The Key Recommendations from the Open Letter

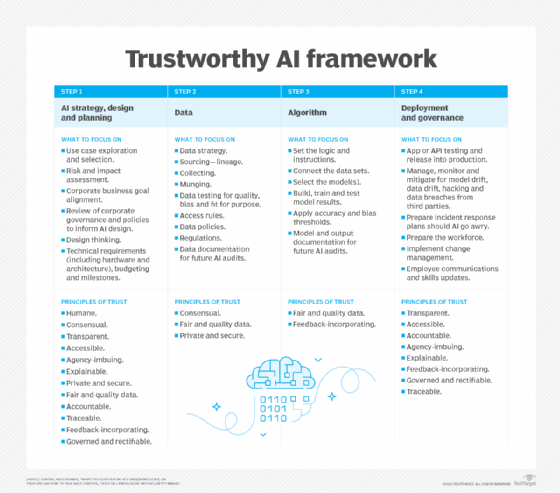

The developers outline four main recommendations for AI companies to mitigate risks and ensure responsible AI development:

- Implement a ban on silencing criticism related to AI risks

- Ensure no retaliation against employees raising concerns

- Establish anonymous processes for employees to report concerns to relevant authorities

- Enhance transparency and accountability in sharing information with governments and the public

Notable figures within the AI community, such as Daniel Kokotajlo and William Saunders, who resigned from OpenAI due to concerns about AI risk management, have endorsed the letter. These developments underscore the growing importance of ethical considerations and risk mitigation in AI research and development.

As the global AI industry continues to expand rapidly, discussions around data privacy, security, and ethical challenges are becoming more urgent. Countries like Brazil, Chile, and the European Union are already taking steps to regulate AI technologies and ensure their responsible use.

Despite the rapid pace of AI innovation, the voices of caution within the industry are crucial for steering its development in a safe and ethical direction. The collaborative efforts of AI developers to raise awareness about potential risks and advocate for responsible AI governance are essential for shaping the future of this transformative technology.

Artificial Intelligence Specialist