Top Multimodal AI Tools & Workflow Automation 2025

Multimodal AI tools combine diverse data types such as text, images, video, and audio into one powerful system. These AI models are shaping the future of content creation, automation, and interaction by understanding and generating across multiple formats simultaneously.

Google Gemini

Google Gemini offers a sophisticated AI model with multimodal capabilities, leading performance benchmarks, and optimization for various applications, aiming to empower users with advanced AI technology while posing challenges in complexity and availability for some users.

Claude by Anthropic

Claude, an adaptable AI automaton by Anthropic, offers sophisticated natural language processing, ethical adherence, document handling, API integration, and unique feedback mechanisms, with free user inquiry restrictions compared to paid users. Claude 3.7 Sonnet offers both immediate responses and broad, step-by-step details.

DeepSeek

DeepSeek is an artificial intelligence enterprise that provides open-source complex language models. Its premier model, DeepSeek-V3, is distinguished by its superior performance and energy efficiency.

ChatGPT

ChatGPT is a sophisticated AI language model from OpenAI that aids in text generation, translation, coding, and other tasks. Its premium version offers supplementary features.

Vertex AI

Vertex AI, a Google Cloud tool, streamlines ML workflows, providing access to state-of-the-art models and extensive lifecycle tools, offering accelerated development, scalability, and seamless integration, while novice complexity, vendor lock-in, and resource intensity pose challenges.

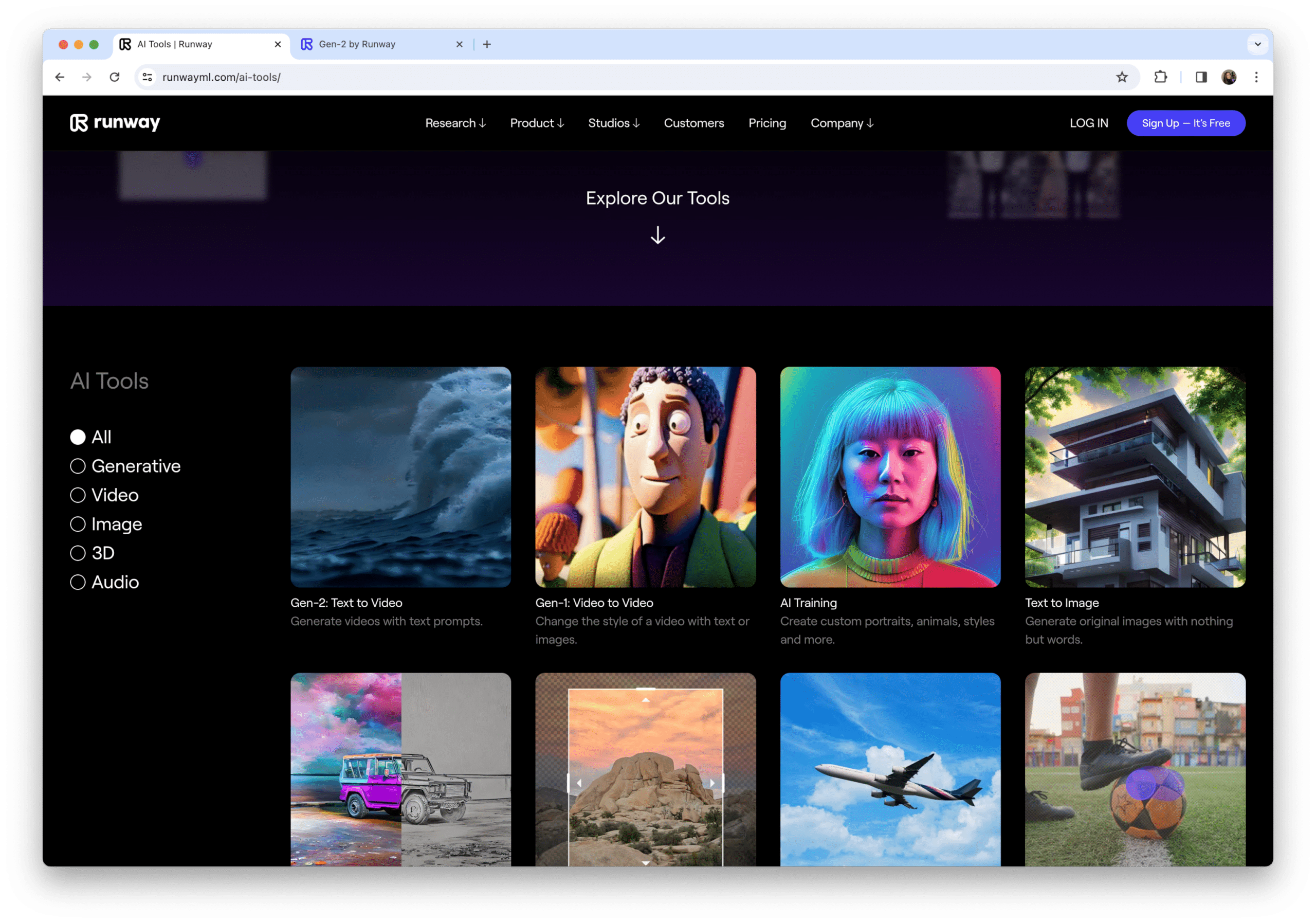

Runway

Runway offers over 30 AI-powered tools for modifying text, images, and videos, including AI training, color grading, green screen effects, and super-slow motion.

Groupify AI

At Groupify AI, we bring you a curated collection of Multimodal AI Tools designed to understand and generate across text, image, audio, and video formats. These advanced AI models and generative models combine different data types into unified Artificial Intelligence systems, enabling creators, developers, and businesses to streamline complex workflows. At its core, multimodal AI follows the familiar AI approach founded on AI models and machine learning models.

Leveraging the capabilities of natural language processing, these models can interpret context, sentiment, and semantics from textual inputs while correlating them with corresponding visual, auditory, or spatial data. Whether you're looking to build intelligent applications or generate rich multimedia content, Groupify AI is your destination for the best multimodal models of AI for every use case. We also feature insights into how Meta AI will offer future AI multimodal models, showcasing the cutting edge of the field and the growing integration of advanced neural network architectures across diverse platforms.

Driven by advanced multimodal models and foundation models, these tools are increasingly powered by large language models like Claude 3 and integrated systems developed by leading research teams such as Google DeepMind. Generative AI capabilities are now being enhanced with tools like Runway Gen-2, which enable highly realistic video and image generation from text and audio inputs. This progression marks a significant leap in the evolution of AI, blending creativity and computation through unified systems that understand and produce content in deeply contextual and human-like ways.

At Groupify AI, your go-to platform for AI discovery, we help you explore the most versatile and future-driven Multimodal AI Tools available today. Dive into our curated listings to find the best AI apps that break format boundaries, amplify productivity, and unlock new creative possibilities. These tools are often built upon powerful large language models and neural network architectures that can seamlessly integrate and interpret different types of data inputs. By combining natural language processing with advanced vision and audio recognition systems, they enable more dynamic interactions and outputs across use cases. As these technologies continue to evolve, the role of neural networks and language models will only grow, driving a new wave of intelligent applications that adapt and respond with human-like precision.