AMA for Developers with OpenAI's API Team: Post Your Questions...

Little Dev Day confirmed: The Shipmas API drop is happening this Tuesday. Join the AMA with the API team right after the release! To prepare for the event—or if you’re unable to attend—you can post your questions here in advance. The AMA will begin at approximately 2024-12-17T18:30:00Z, right after the Shipmas live stream. You can also follow @OpenAIDevs on X (Twitter) to learn when the event will start. https://x.com/OpenAIDevs

We’re looking forward to your questions, helpful answers, and an all-around great time! See you there!

Hello, Great news, so:

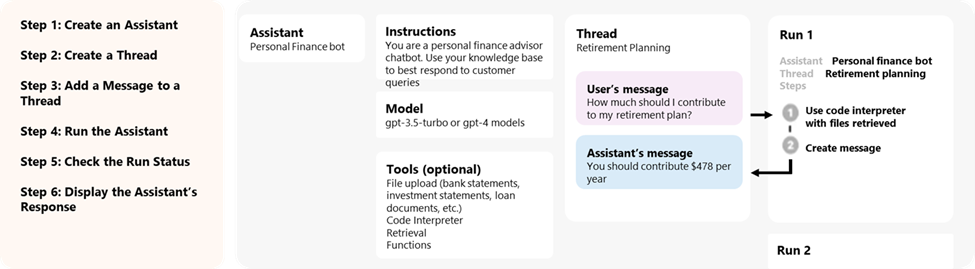

We may expect the O1 Assistant API??? And the source info (broken for many months) Do you have any information on whether the Assistants API will make it easier for us to retrieve the actual text that the assistant is using from the vector store? I believe v1 had an easier way to do this, but it seemed to have been removed from v2.

Great news, devs have been waiting patiently for our Shipmas present! With o1 now having vision capabilities (which I presume will be released in the API in the future) how should we be thinking about using it for use-cases that typically require in-context learning? Is it effective to provide the model a multi-shot prompt with each example having the ∼5 images that will be encountered in the real world, or is a different strategy recommended?

Are there any news for the Assistants API, particularly File Search updates (metadata, image parsing, etc)? Citations with exact text references are still missing as well. Thanks and happy Shipmas!

Will we ever get proper powerful embedding models again? Are multimodal embeddings on the horizon? Will you try to convince the safety team that preempting model responses isn’t the end of humanity? Will we ever get a more powerful completion model? Ask a hypothetical question now, don’t know what you’re asking about until tomorrow? Clever twist. “…so after that announcement, how long until O1-preview shutoff, if so?” “…a parameter to tune reasoning length from minimum (for continued chat quality and speed) up to Pro 0-shot performance (that might confuse who even asked?)”. “inter-call ID reference for continuing on prior reasoning context we can’t supply via API but paid for?” “…with that additional context length, will context caching see further competitive discounts?”

Or what can be known:

On the {n} day of Chanukah, my true love gave to me: Powered by Discourse, best viewed with JavaScript enabled