maadaa AI News & Datasets: OpenAI's GPT-4o Mini Debuts, NVIDIA Partners with Mistral as Tech

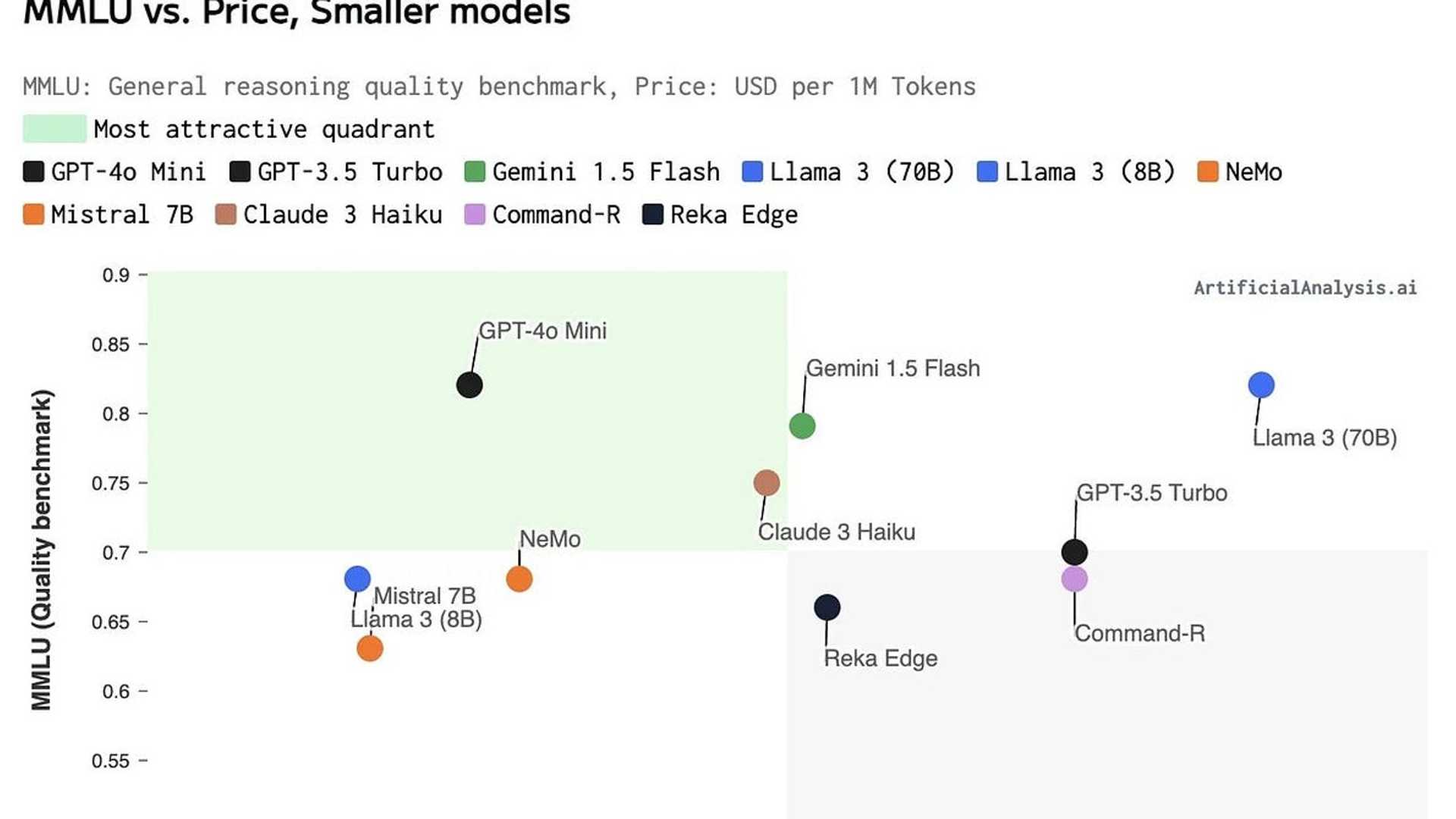

ListenShare (maadaa AI News Weekly: July 16 ~ July 22) OpenAI has introduced GPT-4O Mini, a smaller version of its GPT-4 model, designed to power ChatGPT. This new model aims to deliver high performance while being more efficient in terms of computational resources. The introduction of GPT-4O Mini is significant because it enables the development of more efficient training datasets. By reducing computational costs and resource requirements, it allows for broader accessibility and scalability of AI technologies. This can lead to more widespread use and integration of advanced AI in various applications, enhancing the overall quality and diversity of training datasets.

NVIDIA and Mistral AI Partnership

NVIDIA and Mistral AI have launched Mistral NeMo 12B, a cutting-edge language model tailored for enterprise use. This model leverages NVIDIA’s advanced hardware and software to deliver high performance and efficiency in various applications:

- Mistral NeMo 12B is designed for enterprise applications, supporting tasks like multi-turn conversations, math, reasoning, and coding.

- Features a 128K context length for handling extensive information.

- Released under the Apache 2.0 license, promoting innovation.

- It uses the FP8 data format for efficient inference.

- Can be deployed easily as an NVIDIA NIM inference microservice.

The release of Mistral NeMo 12B is significant as it enhances training datasets by offering a high-performance, efficient model optimized for enterprise use. Its ability to handle complex tasks and large context lengths improves the quality and diversity of training data, fostering broader AI adoption and innovation across various industries.

Ethical Concerns in AI Training

An investigation revealed that major AI companies, including Apple, Nvidia, and Anthropic, used subtitles from over 173,000 YouTube videos to train their AI models without permission. This practice, which violates YouTube’s terms of service, has sparked significant ethical and legal concerns among content creators and the broader community:

- Unauthorized Use: Subtitles from 173,536 YouTube videos were used without permission.

- Companies Involved: Apple, Nvidia, Anthropic, and Salesforce leveraged this data.

- Diverse Sources: Data came from educational channels, news outlets, and popular YouTubers.

- Ethical Concerns: Content creators were not notified or compensated.

- Legal Implications: Raises questions about copyright, consent, and fair use.

This news highlights the ethical and legal challenges of using publicly available data for AI training. The diverse and extensive YouTube dataset enhances AI models by providing a wide range of real-world scenarios, but the lack of consent and compensation for content creators underscores the need for clearer regulations and ethical guidelines in AI development.

Recent Developments in AI

Tech giants like Google, OpenAI, and Microsoft have created CoSAI to ensure AI safety and security by developing best practices and open-source tools for industry use. Opera has introduced a new Opera One browser version featuring the “Aria” AI assistant for summarizing web content, translating, and creating images and audio. Fei-Fei Li, the “godmother of AI,” founded World Labs, valued at $1 billion in four months, known for ImageNet and advising the White House on AI.